Pranav Inani

ALT-Pilot: Autonomous navigation with Language augmented Topometric maps

Oct 03, 2023

Abstract:We present an autonomous navigation system that operates without assuming HD LiDAR maps of the environment. Our system, ALT-Pilot, relies only on publicly available road network information and a sparse (and noisy) set of crowdsourced language landmarks. With the help of onboard sensors and a language-augmented topometric map, ALT-Pilot autonomously pilots the vehicle to any destination on the road network. We achieve this by leveraging vision-language models pre-trained on web-scale data to identify potential landmarks in a scene, incorporating vision-language features into the recursive Bayesian state estimation stack to generate global (route) plans, and a reactive trajectory planner and controller operating in the vehicle frame. We implement and evaluate ALT-Pilot in simulation and on a real, full-scale autonomous vehicle and report improvements over state-of-the-art topometric navigation systems by a factor of 3x on localization accuracy and 5x on goal reachability

Frontier Based Exploration for Autonomous Robot

Jun 10, 2018

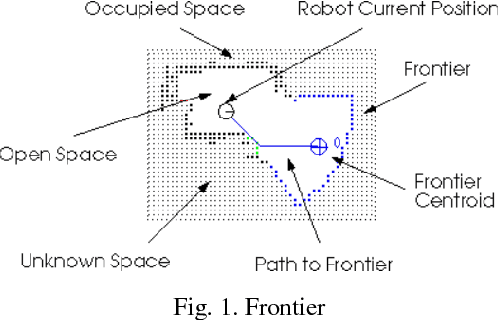

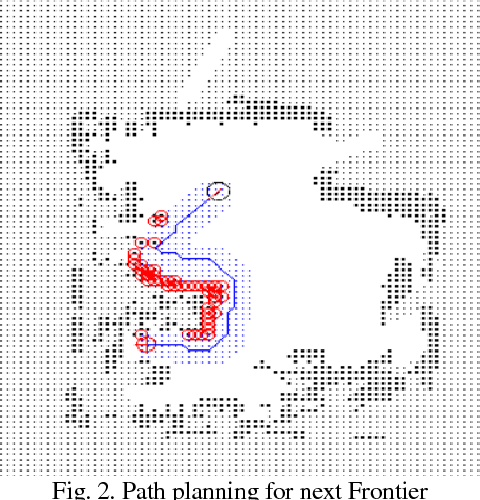

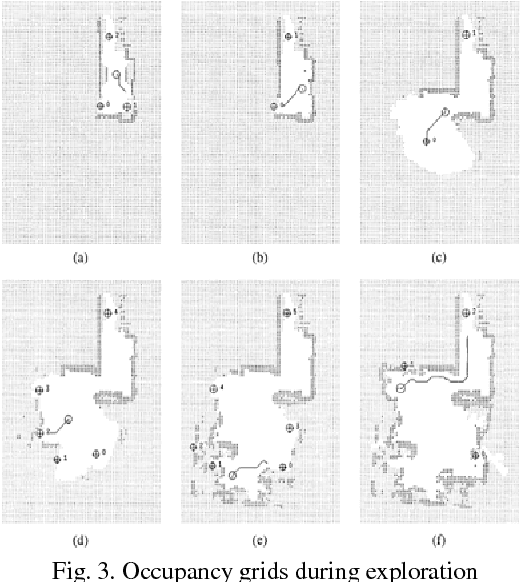

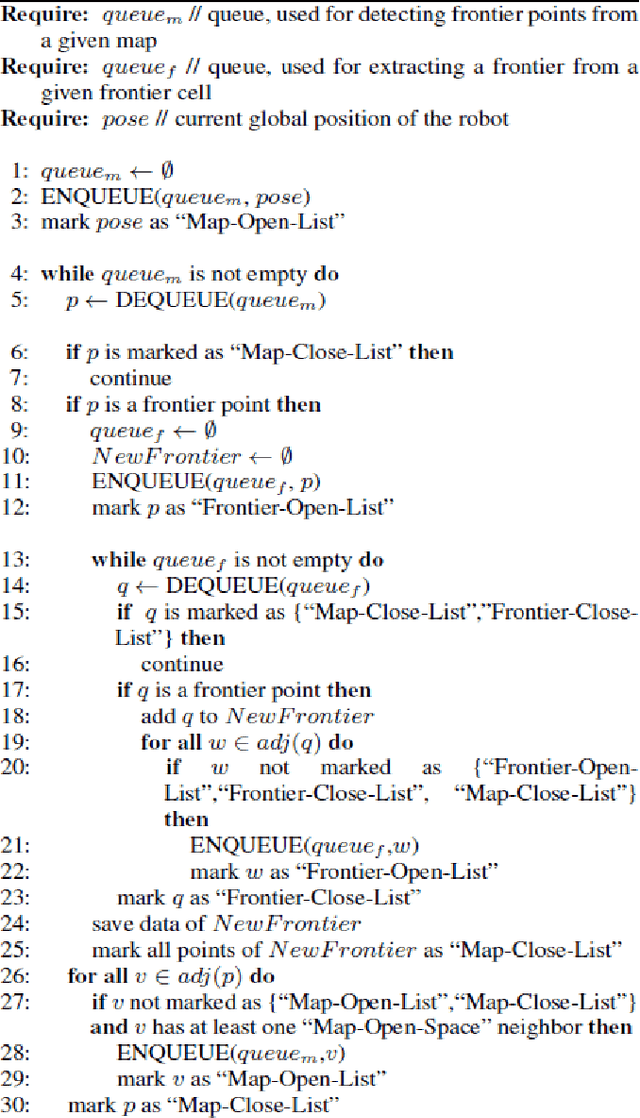

Abstract:Exploration is process of selecting target points that yield the biggest contribution to a specific gain function at an initially unknown environment. Frontier-based exploration is the most common approach to exploration, wherein frontiers are regions on the boundary between open space and unexplored space. By moving to a new frontier, we can keep building the map of the environment, until there are no new frontiers left to detect. In this paper, an autonomous frontier-based exploration strategy, namely Wavefront Frontier Detector (WFD) is described and implemented on Gazebo Simulation Environment as well as on hardware platform, i.e. Kobuki TurtleBot using Robot Operating System (ROS). The advantage of this algorithm is that the robot can explore large open spaces as well as small cluttered spaces. Further, the map generated from this technique is compared and validated with the map generated using turtlebot_teleop ROS Package.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge