Pinaki Mitra

A PDD-Inspired Channel Estimation Scheme in NOMA Network

Nov 29, 2024Abstract:In 5G networks, non-orthogonal multiple access (NOMA) provides a number of benefits by providing uneven power distribution to multiple users at once. On the other hand, effective power allocation, successful successive interference cancellation (SIC), and user fairness all depend on precise channel state information (CSI). Because of dynamic channels, imperfect models, and feedback overhead, CSI prediction in NOMA is difficult. Our aim is to propose a CSI prediction technique based on an ML model that accounts for partially decoded data (PDD), a byproduct of the SIC process. Our proposed technique has been shown to be efficient in handover failure (HOF) prediction and reducing pilot overhead, which is particularly important in 5G. We have shown how machine learning (ML) models may be used to forecast CSI in NOMA handover.

Information Security and Privacy in the Digital World: Some Selected Topics

Mar 30, 2024

Abstract:In the era of generative artificial intelligence and the Internet of Things, while there is explosive growth in the volume of data and the associated need for processing, analysis, and storage, several new challenges are faced in identifying spurious and fake information and protecting the privacy of sensitive data. This has led to an increasing demand for more robust and resilient schemes for authentication, integrity protection, encryption, non-repudiation, and privacy-preservation of data. The chapters in this book present some of the state-of-the-art research works in the field of cryptography and security in computing and communications.

Multi-Contextual Design of Convolutional Neural Network for Steganalysis

Jun 19, 2021

Abstract:In recent times, deep learning-based steganalysis classifiers became popular due to their state-of-the-art performance. Most deep steganalysis classifiers usually extract noise residuals using high-pass filters as preprocessing steps and feed them to their deep model for classification. It is observed that recent steganographic embedding does not always restrict their embedding in the high-frequency zone; instead, they distribute it as per embedding policy. Therefore, besides noise residual, learning the embedding zone is another challenging task. In this work, unlike the conventional approaches, the proposed model first extracts the noise residual using learned denoising kernels to boost the signal-to-noise ratio. After preprocessing, the sparse noise residuals are fed to a novel Multi-Contextual Convolutional Neural Network (M-CNET) that uses heterogeneous context size to learn the sparse and low-amplitude representation of noise residuals. The model performance is further improved by incorporating the Self-Attention module to focus on the areas prone to steganalytic embedding. A set of comprehensive experiments is performed to show the proposed scheme's efficacy over the prior arts. Besides, an ablation study is given to justify the contribution of various modules of the proposed architecture.

System and Methods for Converting Speech to SQL

Aug 14, 2013

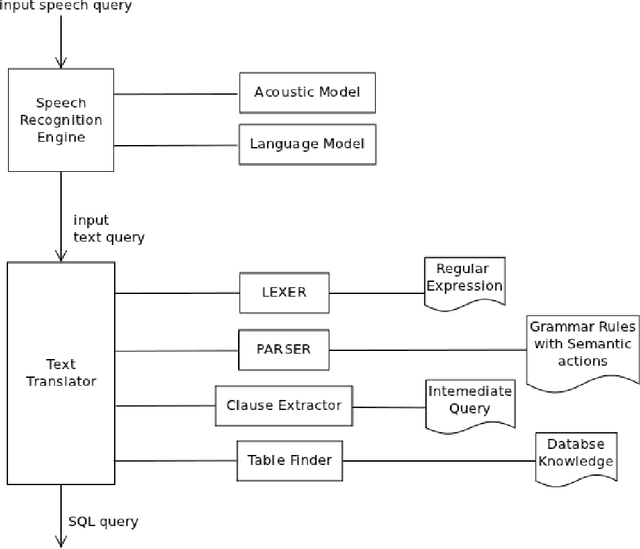

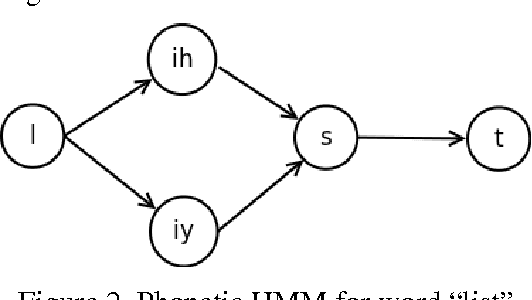

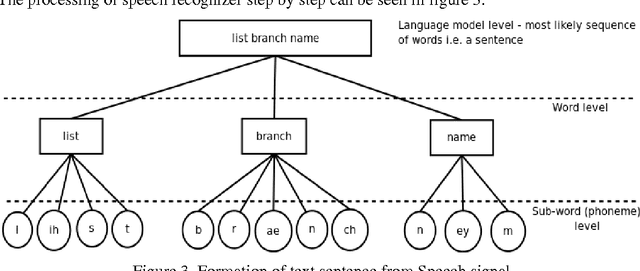

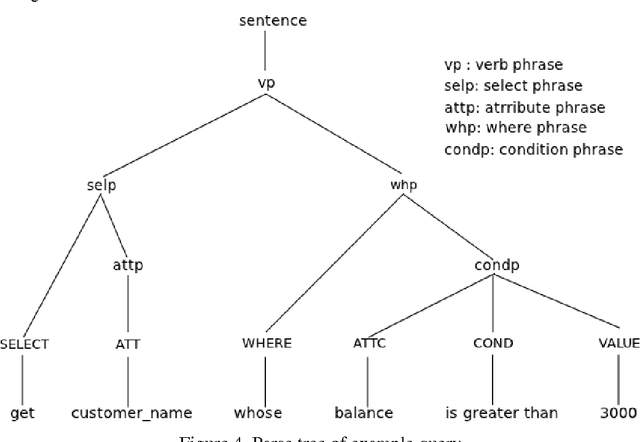

Abstract:This paper concerns with the conversion of a Spoken English Language Query into SQL for retrieving data from RDBMS. A User submits a query as speech signal through the user interface and gets the result of the query in the text format. We have developed the acoustic and language models using which a speech utterance can be converted into English text query and thus natural language processing techniques can be applied on this English text query to generate an equivalent SQL query. For conversion of speech into English text HTK and Julius tools have been used and for conversion of English text query into SQL query we have implemented a System which uses rule based translation to translate English Language Query into SQL Query. The translation uses lexical analyzer, parser and syntax directed translation techniques like in compilers. JFLex and BYACC tools have been used to build lexical analyzer and parser respectively. System is domain independent i.e. system can run on different database as it generates lex files from the underlying database.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge