Pierre-Edouard Honnet

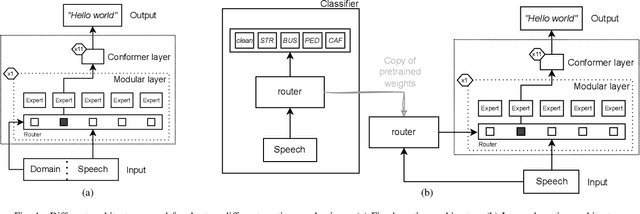

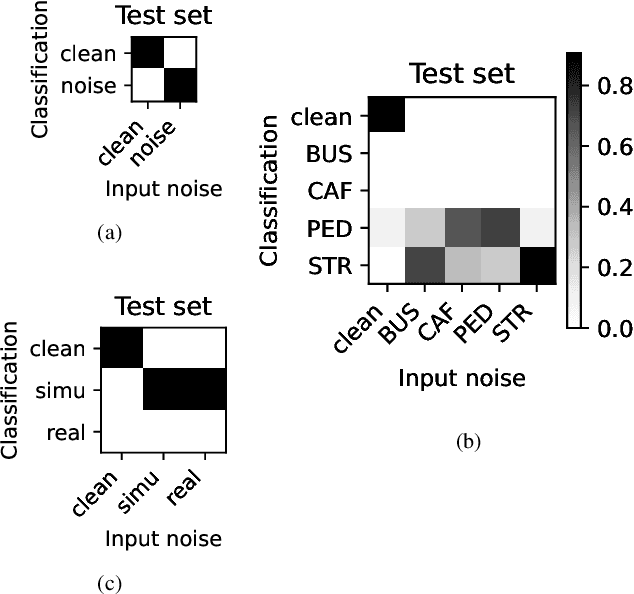

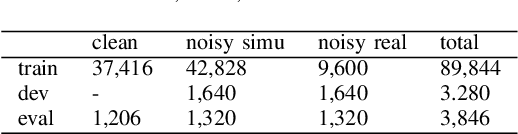

An investigation of modularity for noise robustness in conformer-based ASR

Sep 09, 2024

Abstract:Whilst state of the art automatic speech recognition (ASR) can perform well, it still degrades when exposed to acoustic environments that differ from those used when training the model. Unfamiliar environments for a given model may well be known a-priori, but yield comparatively small amounts of adaptation data. In this experimental study, we investigate to what extent recent formalisations of modularity can aid adaptation of ASR to new acoustic environments. Using a conformer based model and fixed routing, we confirm that environment awareness can indeed lead to improved performance in known environments. However, at least on the (CHIME) datasets in the study, it is difficult for a classifier module to distinguish different noisy environments, a simpler distinction between noisy and clean speech being the optimal configuration. The results have clear implications for deploying large models in particular environments with or without a-priori knowledge of the environmental noise.

Machine Translation of Low-Resource Spoken Dialects: Strategies for Normalizing Swiss German

Feb 06, 2018

Abstract:The goal of this work is to design a machine translation (MT) system for a low-resource family of dialects, collectively known as Swiss German, which are widely spoken in Switzerland but seldom written. We collected a significant number of parallel written resources to start with, up to a total of about 60k words. Moreover, we identified several other promising data sources for Swiss German. Then, we designed and compared three strategies for normalizing Swiss German input in order to address the regional diversity. We found that character-based neural MT was the best solution for text normalization. In combination with phrase-based statistical MT, our solution reached 36% BLEU score when translating from the Bernese dialect. This value, however, decreases as the testing data becomes more remote from the training one, geographically and topically. These resources and normalization techniques are a first step towards full MT of Swiss German dialects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge