Pierre Bagnaninchi

Multi-frequency Electrical Impedance Tomography Reconstruction with Multi-Branch Attention Image Prior

Sep 17, 2024

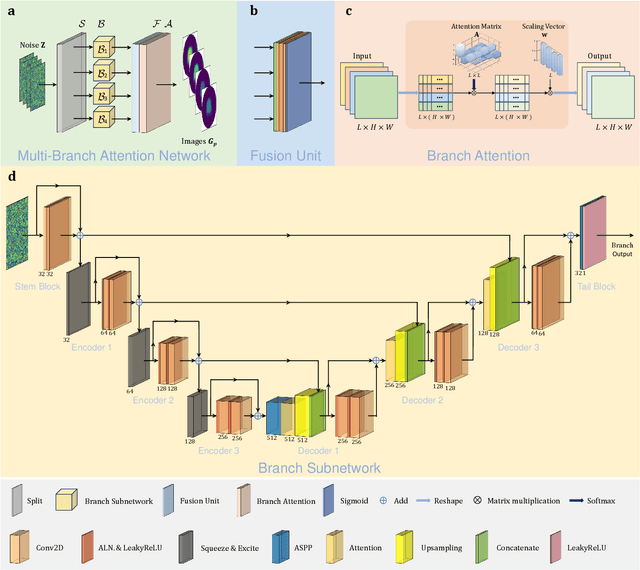

Abstract:Multi-frequency Electrical Impedance Tomography (mfEIT) is a promising biomedical imaging technique that estimates tissue conductivities across different frequencies. Current state-of-the-art (SOTA) algorithms, which rely on supervised learning and Multiple Measurement Vectors (MMV), require extensive training data, making them time-consuming, costly, and less practical for widespread applications. Moreover, the dependency on training data in supervised MMV methods can introduce erroneous conductivity contrasts across frequencies, posing significant concerns in biomedical applications. To address these challenges, we propose a novel unsupervised learning approach based on Multi-Branch Attention Image Prior (MAIP) for mfEIT reconstruction. Our method employs a carefully designed Multi-Branch Attention Network (MBA-Net) to represent multiple frequency-dependent conductivity images and simultaneously reconstructs mfEIT images by iteratively updating its parameters. By leveraging the implicit regularization capability of the MBA-Net, our algorithm can capture significant inter- and intra-frequency correlations, enabling robust mfEIT reconstruction without the need for training data. Through simulation and real-world experiments, our approach demonstrates performance comparable to, or better than, SOTA algorithms while exhibiting superior generalization capability. These results suggest that the MAIP-based method can be used to improve the reliability and applicability of mfEIT in various settings.

Impedance-optical Dual-modal Cell Culture Imaging with Learning-based Information Fusion

Jun 15, 2021

Abstract:While Electrical Impedance Tomography (EIT) has found many biomedicine applications, a better resolution is needed to provide quantitative analysis for tissue engineering and regenerative medicine. This paper proposes an impedance-optical dual-modal imaging framework, which is mainly aimed at high-quality 3D cell culture imaging and can be extended to other tissue engineering applications. The framework comprises three components, i.e., an impedance-optical dual-modal sensor, the guidance image processing algorithm, and a deep learning model named multi-scale feature cross fusion network (MSFCF-Net) for information fusion. The MSFCF-Net has two inputs, i.e., the EIT measurement and a binary mask image generated by the guidance image processing algorithm, whose input is an RGB microscopic image. The network then effectively fuses the information from the two different imaging modalities and generates the final conductivity image. We assess the performance of the proposed dual-modal framework by numerical simulation and MCF-7 cell imaging experiments. The results show that the proposed method could significantly improve image quality, indicating that impedance-optical joint imaging has the potential to reveal the structural and functional information of tissue-level targets simultaneously.

MMV-Net: A Multiple Measurement Vector Network for Multi-frequency Electrical Impedance Tomography

May 26, 2021

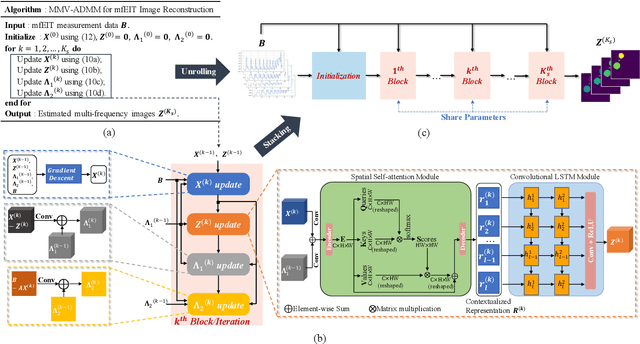

Abstract:Multi-frequency Electrical Impedance Tomography (mfEIT) is an emerging biomedical imaging modality to reveal frequency-dependent conductivity distributions in biomedical applications. Conventional model-based image reconstruction methods suffer from low spatial resolution, unconstrained frequency correlation and high computational cost. Deep learning has been extensively applied in solving the EIT inverse problem in biomedical and industrial process imaging. However, most existing learning-based approaches deal with the single-frequency setup, which is inefficient and ineffective when extended to address the multi-frequency setup. In this paper, we present a Multiple Measurement Vector (MMV) model based learning algorithm named MMV-Net to solve the mfEIT image reconstruction problem. MMV-Net takes into account the correlations between mfEIT images and unfolds the update steps of the Alternating Direction Method of Multipliers (ADMM) for the MMV problem. The non-linear shrinkage operator associated with the weighted l2_1 regularization term is generalized with a cascade of a Spatial Self-Attention module and a Convolutional Long Short-Term Memory (ConvLSTM) module to capture intra- and inter-frequency dependencies. The proposed MMVNet was validated on our Edinburgh mfEIT Dataset and a series of comprehensive experiments. All reconstructed results show superior image quality, convergence performance and noise robustness against the state of the art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge