Philippe Robert

IntrinSeqNet: Learning to Estimate the Reflectance from Varying Illumination

Jun 13, 2019

Abstract:Intrinsic image decomposition describes an image based on its reflectance and shading components. In this paper we tackle the problem of estimating the diffuse reflectance from a sequence of images captured from a fixed viewpoint under various illuminations. To this end we propose a deep learning approach to avoid heuristics and strong assumptions on the reflectance prior. We compare two network architectures: one classic 'U' shaped Convolutional Neural Network (CNN) and a Recurrent Neural Network (RNN) composed of Convolutional Gated Recurrent Units (CGRU). We train our networks on a new dataset specifically designed for the task of intrinsic decomposition from sequences. We test our networks on MIT and BigTime datasets and outperform state-of-the-art algorithms both qualitatively and quantitatively.

Monotonic Gaussian Process for Spatio-Temporal Trajectory Separation in Brain Imaging Data

Feb 28, 2019

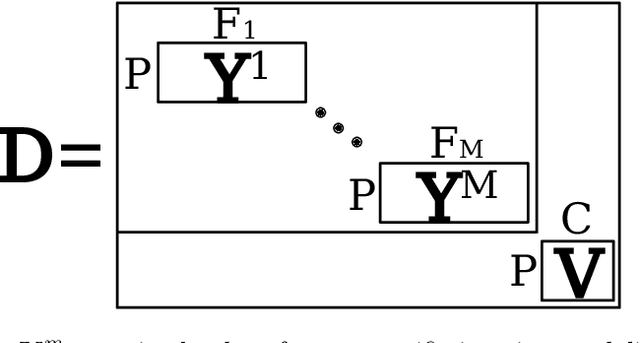

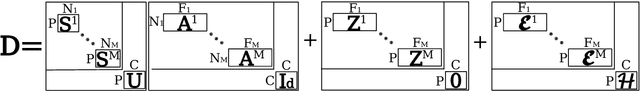

Abstract:We introduce a probabilistic generative model for disentangling spatio-temporal disease trajectories from series of high-dimensional brain images. The model is based on spatio-temporal matrix factorization, where inference on the sources is constrained by anatomically plausible statistical priors. To model realistic trajectories, the temporal sources are defined as monotonic and time-reparametrized Gaussian Processes. To account for the non-stationarity of brain images, we model the spatial sources as sparse codes convolved at multiple scales. The method was tested on synthetic data favourably comparing with standard blind source separation approaches. The application on large-scale imaging data from a clinical study allows to disentangle differential temporal progression patterns mapping brain regions key to neurodegeneration, while revealing a disease-specific time scale associated to the clinical diagnosis.

Robust Point Light Source Estimation Using Differentiable Rendering

Dec 12, 2018

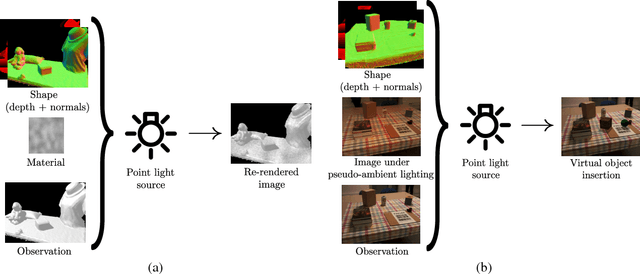

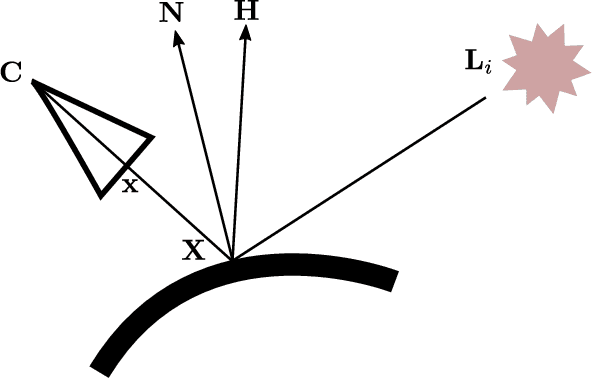

Abstract:Illumination estimation is often used in mixed reality to re-render a scene from another point of view, to change the color/texture of an object, or to insert a virtual object consistently lit into a real video or photograph. Specifically, the estimation of a point light source is required for the shadows cast by the inserted object to be consistent with the real scene. We tackle the problem of illumination retrieval given an RGBD image of the scene as an inverse problem: we aim to find the illumination that minimizes the photometric error between the rendered image and the observation. In particular we propose a novel differentiable renderer based on the Blinn-Phong model with cast shadows. We compare our differentiable renderer to state-of-the-art methods and demonstrate its robustness to an incorrect reflectance estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge