Philippe Lemey

Deep reinforcement learning for large-scale epidemic control

Mar 30, 2020

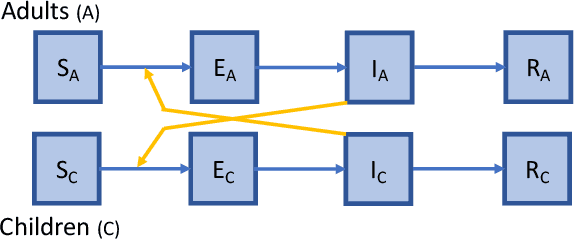

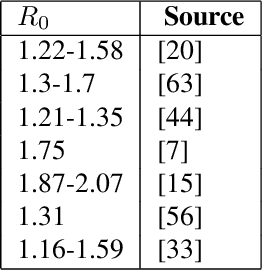

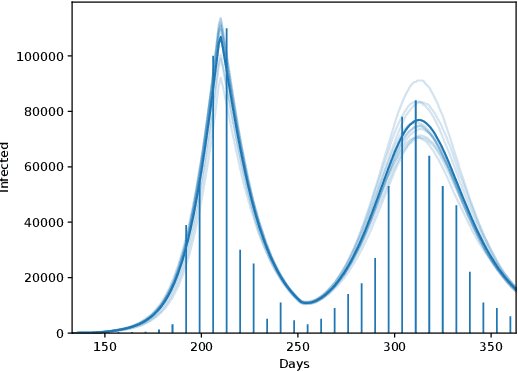

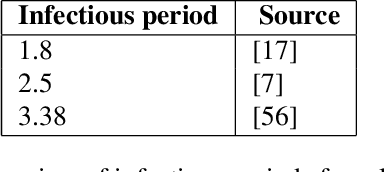

Abstract:Epidemics of infectious diseases are an important threat to public health and global economies. Yet, the development of prevention strategies remains a challenging process, as epidemics are non-linear and complex processes. For this reason, we investigate a deep reinforcement learning approach to automatically learn prevention strategies in the context of pandemic influenza. Firstly, we construct a new epidemiological meta-population model, with 379 patches (one for each administrative district in Great Britain), that adequately captures the infection process of pandemic influenza. Our model balances complexity and computational efficiency such that the use of reinforcement learning techniques becomes attainable. Secondly, we set up a ground truth such that we can evaluate the performance of the 'Proximal Policy Optimization' algorithm to learn in a single district of this epidemiological model. Finally, we consider a large-scale problem, by conducting an experiment where we aim to learn a joint policy to control the districts in a community of 11 tightly coupled districts, for which no ground truth can be established. This experiment shows that deep reinforcement learning can be used to learn mitigation policies in complex epidemiological models with a large state space. Moreover, through this experiment, we demonstrate that there can be an advantage to consider collaboration between districts when designing prevention strategies.

Bayesian Best-Arm Identification for Selecting Influenza Mitigation Strategies

Jun 15, 2018

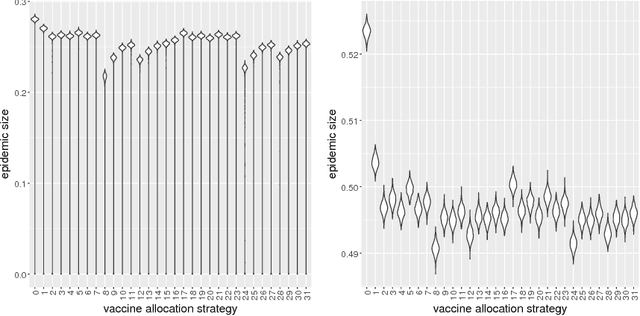

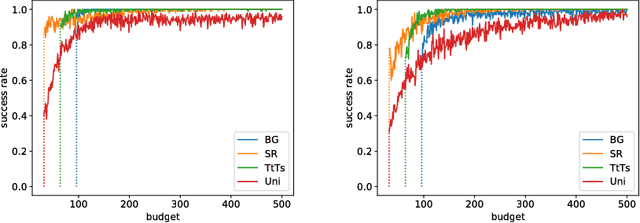

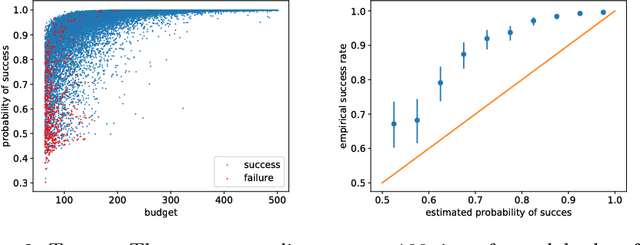

Abstract:Pandemic influenza has the epidemic potential to kill millions of people. While various preventive measures exist (i.a., vaccination and school closures), deciding on strategies that lead to their most effective and efficient use remains challenging. To this end, individual-based epidemiological models are essential to assist decision makers in determining the best strategy to curb epidemic spread. However, individual-based models are computationally intensive and it is therefore pivotal to identify the optimal strategy using a minimal amount of model evaluations. Additionally, as epidemiological modeling experiments need to be planned, a computational budget needs to be specified a priori. Consequently, we present a new sampling technique to optimize the evaluation of preventive strategies using fixed budget best-arm identification algorithms. We use epidemiological modeling theory to derive knowledge about the reward distribution which we exploit using Bayesian best-arm identification algorithms (i.e., Top-two Thompson sampling and BayesGap). We evaluate these algorithms in a realistic experimental setting and demonstrate that it is possible to identify the optimal strategy using only a limited number of model evaluations, i.e., 2-to-3 times faster compared to the uniform sampling method, the predominant technique used for epidemiological decision making in the literature. Finally, we contribute and evaluate a statistic for Top-two Thompson sampling to inform the decision makers about the confidence of an arm recommendation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge