Peter Bult

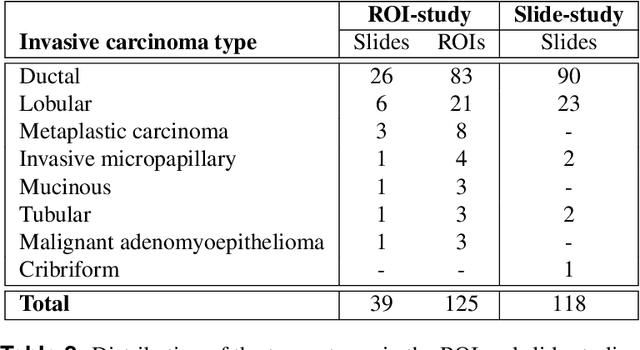

A Multicentric Dataset for Training and Benchmarking Breast Cancer Segmentation in H&E Slides

Oct 02, 2025

Abstract:Automated semantic segmentation of whole-slide images (WSIs) stained with hematoxylin and eosin (H&E) is essential for large-scale artificial intelligence-based biomarker analysis in breast cancer. However, existing public datasets for breast cancer segmentation lack the morphological diversity needed to support model generalizability and robust biomarker validation across heterogeneous patient cohorts. We introduce BrEast cancEr hisTopathoLogy sEgmentation (BEETLE), a dataset for multiclass semantic segmentation of H&E-stained breast cancer WSIs. It consists of 587 biopsies and resections from three collaborating clinical centers and two public datasets, digitized using seven scanners, and covers all molecular subtypes and histological grades. Using diverse annotation strategies, we collected annotations across four classes - invasive epithelium, non-invasive epithelium, necrosis, and other - with particular focus on morphologies underrepresented in existing datasets, such as ductal carcinoma in situ and dispersed lobular tumor cells. The dataset's diversity and relevance to the rapidly growing field of automated biomarker quantification in breast cancer ensure its high potential for reuse. Finally, we provide a well-curated, multicentric external evaluation set to enable standardized benchmarking of breast cancer segmentation models.

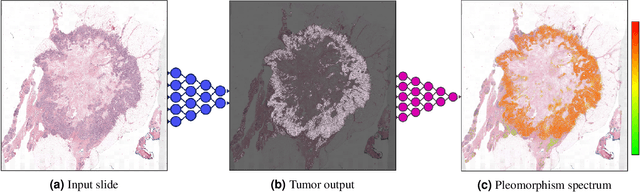

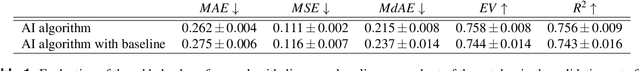

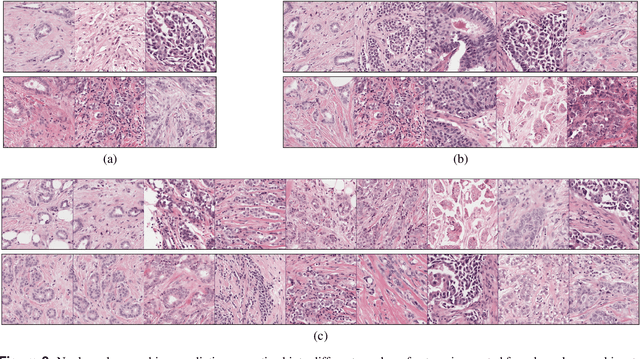

Automated Scoring of Nuclear Pleomorphism Spectrum with Pathologist-level Performance in Breast Cancer

Dec 24, 2020

Abstract:Nuclear pleomorphism, defined herein as the extent of abnormalities in the overall appearance of tumor nuclei, is one of the components of the three-tiered breast cancer grading. Given that nuclear pleomorphism reflects a continuous spectrum of variation, we trained a deep neural network on a large variety of tumor regions from the collective knowledge of several pathologists, without constraining the network to the traditional three-category classification. We also motivate an additional approach in which we discuss the additional benefit of normal epithelium as baseline, following the routine clinical practice where pathologists are trained to score nuclear pleomorphism in tumor, having the normal breast epithelium for comparison. In multiple experiments, our fully-automated approach could achieve top pathologist-level performance in select regions of interest as well as at whole slide images, compared to ten and four pathologists, respectively.

Whole-Slide Mitosis Detection in H&E Breast Histology Using PHH3 as a Reference to Train Distilled Stain-Invariant Convolutional Networks

Aug 17, 2018

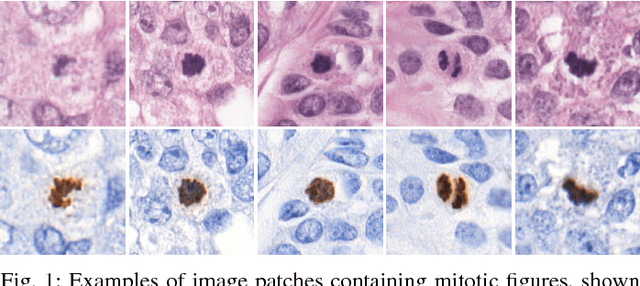

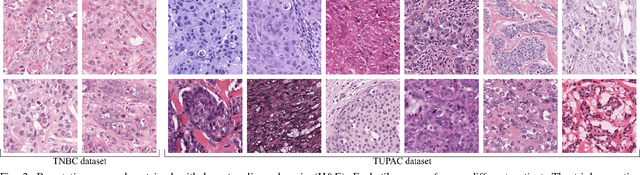

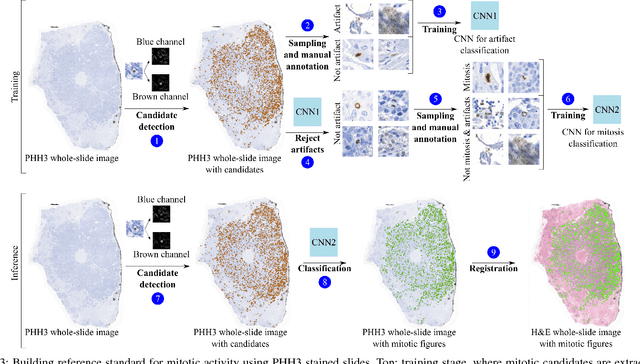

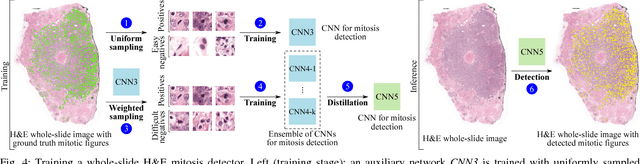

Abstract:Manual counting of mitotic tumor cells in tissue sections constitutes one of the strongest prognostic markers for breast cancer. This procedure, however, is time-consuming and error-prone. We developed a method to automatically detect mitotic figures in breast cancer tissue sections based on convolutional neural networks (CNNs). Application of CNNs to hematoxylin and eosin (H&E) stained histological tissue sections is hampered by: (1) noisy and expensive reference standards established by pathologists, (2) lack of generalization due to staining variation across laboratories, and (3) high computational requirements needed to process gigapixel whole-slide images (WSIs). In this paper, we present a method to train and evaluate CNNs to specifically solve these issues in the context of mitosis detection in breast cancer WSIs. First, by combining image analysis of mitotic activity in phosphohistone-H3 (PHH3) restained slides and registration, we built a reference standard for mitosis detection in entire H&E WSIs requiring minimal manual annotation effort. Second, we designed a data augmentation strategy that creates diverse and realistic H&E stain variations by modifying the hematoxylin and eosin color channels directly. Using it during training combined with network ensembling resulted in a stain invariant mitosis detector. Third, we applied knowledge distillation to reduce the computational requirements of the mitosis detection ensemble with a negligible loss of performance. The system was trained in a single-center cohort and evaluated in an independent multicenter cohort from The Cancer Genome Atlas on the three tasks of the Tumor Proliferation Assessment Challenge (TUPAC). We obtained a performance within the top-3 best methods for most of the tasks of the challenge.

Context-aware stacked convolutional neural networks for classification of breast carcinomas in whole-slide histopathology images

May 10, 2017Abstract:Automated classification of histopathological whole-slide images (WSI) of breast tissue requires analysis at very high resolutions with a large contextual area. In this paper, we present context-aware stacked convolutional neural networks (CNN) for classification of breast WSIs into normal/benign, ductal carcinoma in situ (DCIS), and invasive ductal carcinoma (IDC). We first train a CNN using high pixel resolution patches to capture cellular level information. The feature responses generated by this model are then fed as input to a second CNN, stacked on top of the first. Training of this stacked architecture with large input patches enables learning of fine-grained (cellular) details and global interdependence of tissue structures. Our system is trained and evaluated on a dataset containing 221 WSIs of H&E stained breast tissue specimens. The system achieves an AUC of 0.962 for the binary classification of non-malignant and malignant slides and obtains a three class accuracy of 81.3% for classification of WSIs into normal/benign, DCIS, and IDC, demonstrating its potentials for routine diagnostics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge