Peijie Huang

Do Large Language Model Understand Multi-Intent Spoken Language ?

Mar 08, 2024

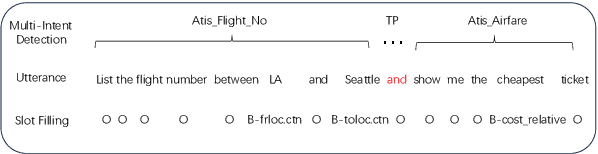

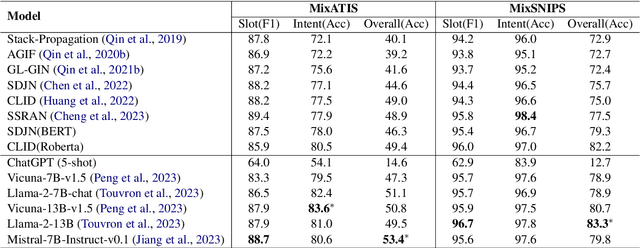

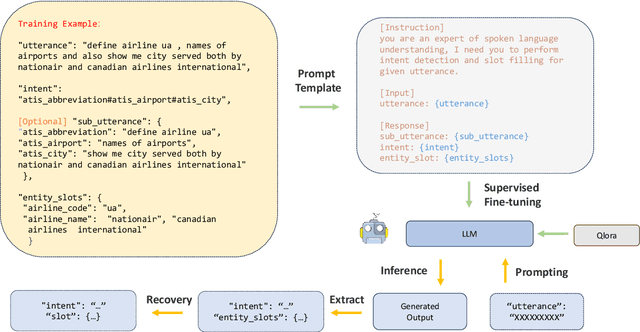

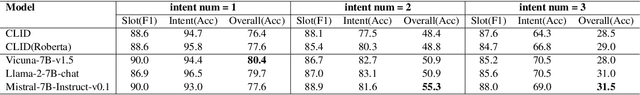

Abstract:This study marks a significant advancement by harnessing Large Language Models (LLMs) for multi-intent spoken language understanding (SLU), proposing a unique methodology that capitalizes on the generative power of LLMs within an SLU context. Our innovative technique reconfigures entity slots specifically for LLM application in multi-intent SLU environments and introduces the concept of Sub-Intent Instruction (SII), enhancing the dissection and interpretation of intricate, multi-intent communication within varied domains. The resultant datasets, dubbed LM-MixATIS and LM-MixSNIPS, are crafted from pre-existing benchmarks. Our research illustrates that LLMs can match and potentially excel beyond the capabilities of current state-of-the-art multi-intent SLU models. It further explores LLM efficacy across various intent configurations and dataset proportions. Moreover, we introduce two pioneering metrics, Entity Slot Accuracy (ESA) and Combined Semantic Accuracy (CSA), to provide an in-depth analysis of LLM proficiency in this complex field.

Sequential Recommendation with Auxiliary Item Relationships via Multi-Relational Transformer

Oct 28, 2022Abstract:Sequential Recommendation (SR) models user dynamics and predicts the next preferred items based on the user history. Existing SR methods model the 'was interacted before' item-item transitions observed in sequences, which can be viewed as an item relationship. However, there are multiple auxiliary item relationships, e.g., items from similar brands and with similar contents in real-world scenarios. Auxiliary item relationships describe item-item affinities in multiple different semantics and alleviate the long-lasting cold start problem in the recommendation. However, it remains a significant challenge to model auxiliary item relationships in SR. To simultaneously model high-order item-item transitions in sequences and auxiliary item relationships, we propose a Multi-relational Transformer capable of modeling auxiliary item relationships for SR (MT4SR). Specifically, we propose a novel self-attention module, which incorporates arbitrary item relationships and weights item relationships accordingly. Second, we regularize intra-sequence item relationships with a novel regularization module to supervise attentions computations. Third, for inter-sequence item relationship pairs, we introduce a novel inter-sequence related items modeling module. Finally, we conduct experiments on four benchmark datasets and demonstrate the effectiveness of MT4SR over state-of-the-art methods and the improvements on the cold start problem. The code is available at https://github.com/zfan20/MT4SR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge