Peibo Li

Large Language Models for Next Point-of-Interest Recommendation

Apr 19, 2024

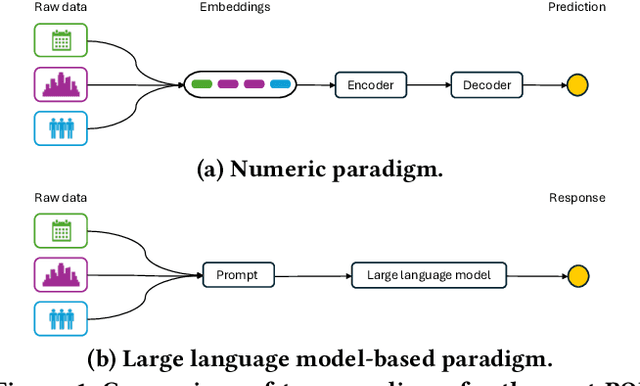

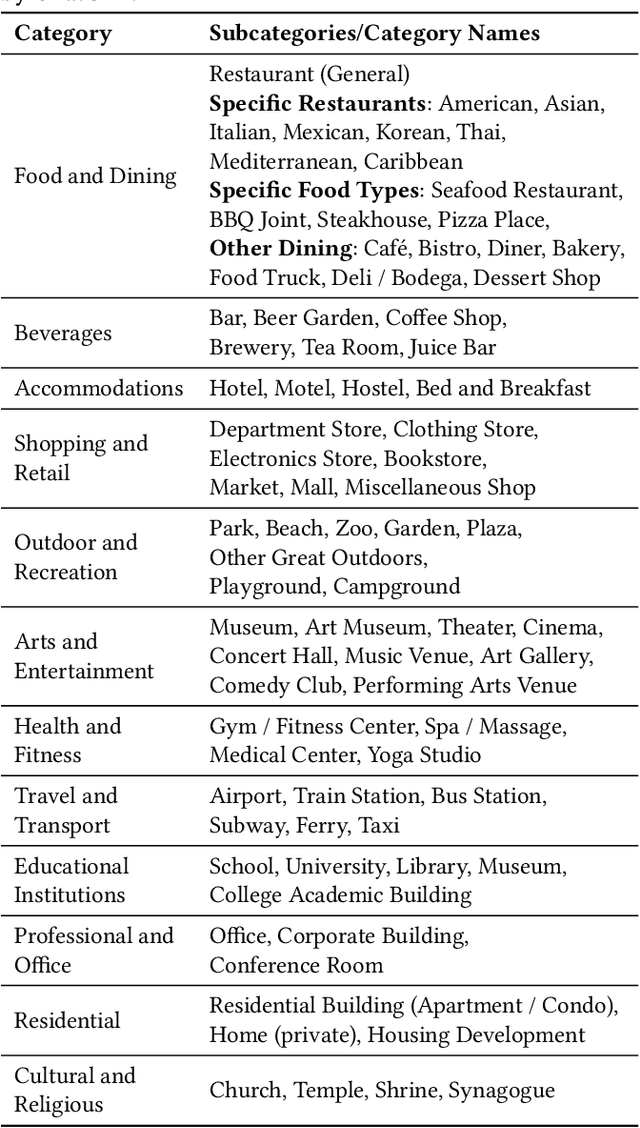

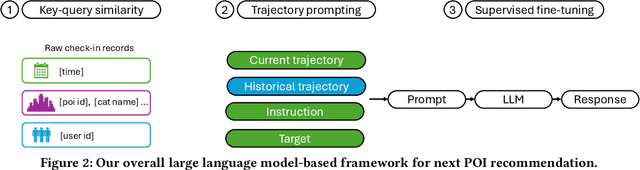

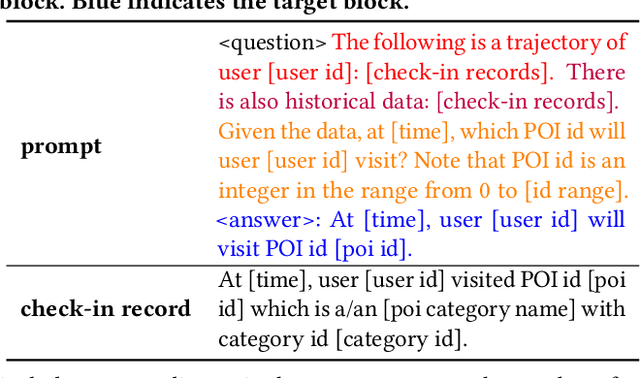

Abstract:The next Point of Interest (POI) recommendation task is to predict users' immediate next POI visit given their historical data. Location-Based Social Network (LBSN) data, which is often used for the next POI recommendation task, comes with challenges. One frequently disregarded challenge is how to effectively use the abundant contextual information present in LBSN data. Previous methods are limited by their numerical nature and fail to address this challenge. In this paper, we propose a framework that uses pretrained Large Language Models (LLMs) to tackle this challenge. Our framework allows us to preserve heterogeneous LBSN data in its original format, hence avoiding the loss of contextual information. Furthermore, our framework is capable of comprehending the inherent meaning of contextual information due to the inclusion of commonsense knowledge. In experiments, we test our framework on three real-world LBSN datasets. Our results show that the proposed framework outperforms the state-of-the-art models in all three datasets. Our analysis demonstrates the effectiveness of the proposed framework in using contextual information as well as alleviating the commonly encountered cold-start and short trajectory problems.

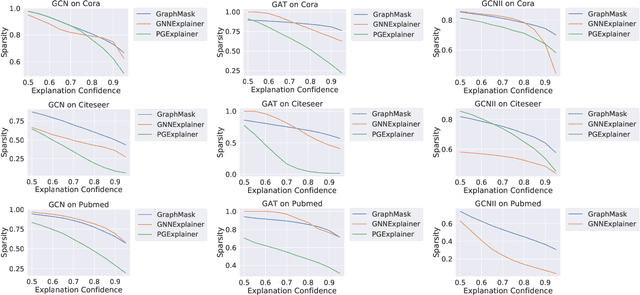

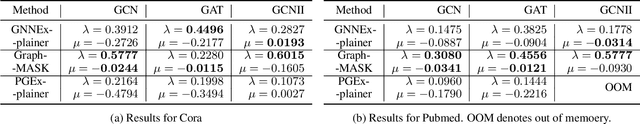

Explainability in Graph Neural Networks: An Experimental Survey

Mar 17, 2022

Abstract:Graph neural networks (GNNs) have been extensively developed for graph representation learning in various application domains. However, similar to all other neural networks models, GNNs suffer from the black-box problem as people cannot understand the mechanism underlying them. To solve this problem, several GNN explainability methods have been proposed to explain the decisions made by GNNs. In this survey, we give an overview of the state-of-the-art GNN explainability methods and how they are evaluated. Furthermore, we propose a new evaluation metric and conduct thorough experiments to compare GNN explainability methods on real world datasets. We also suggest future directions for GNN explainability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge