Pedro M. de Sant Ana

Timely Communication from Sensors for Wireless Networked Control in Cloud-Based Digital Twins

Aug 05, 2024

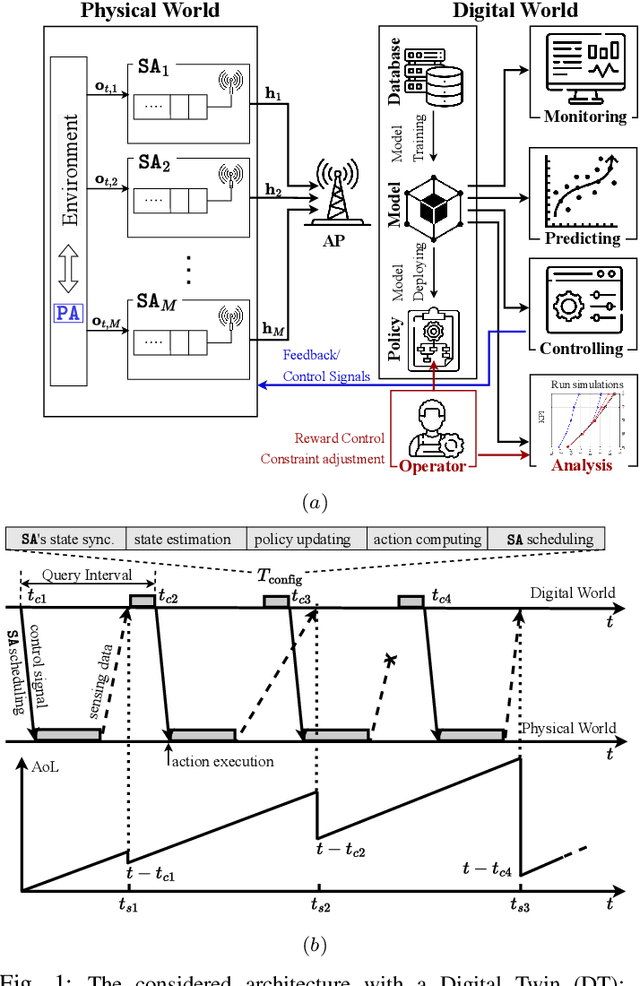

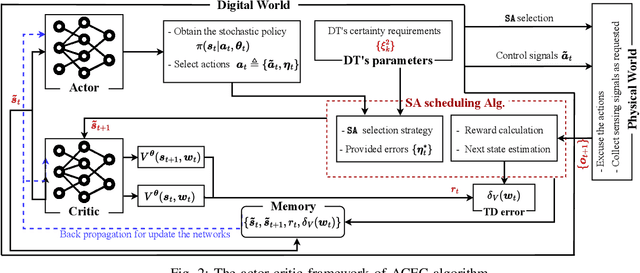

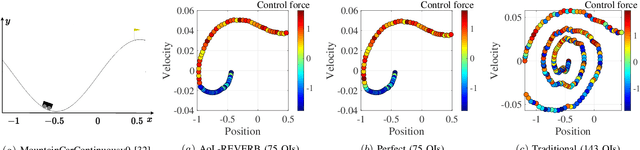

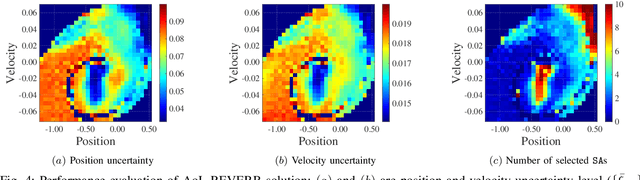

Abstract:We consider a Wireless Networked Control System (WNCS) where sensors provide observations to build a DT model of the underlying system dynamics. The focus is on control, scheduling, and resource allocation for sensory observation to ensure timely delivery to the DT model deployed in the cloud. \phuc{Timely and relevant information, as characterized by optimized data acquisition policy and low latency, are instrumental in ensuring that the DT model can accurately estimate and predict system states. However, optimizing closed-loop control with DT and acquiring data for efficient state estimation and control computing pose a non-trivial problem given the limited network resources, partial state vector information, and measurement errors encountered at distributed sensing agents.} To address this, we propose the \emph{Age-of-Loop REinforcement learning and Variational Extended Kalman filter with Robust Belief (AoL-REVERB)}, which leverages an uncertainty-control reinforcement learning solution combined with an algorithm based on Value of Information (VoI) for performing optimal control and selecting the most informative sensors to satisfy the prediction accuracy of DT. Numerical results demonstrate that the DT platform can offer satisfactory performance while halving the communication overhead.

Digital Twin of Industrial Networked Control System based on Value of Information

Apr 23, 2024

Abstract:The paper examines a scenario wherein sensors are deployed within an Industrial Networked Control System, aiming to construct a digital twin (DT) model for a remotely operated Autonomous Guided Vehicle (AGV). The DT model, situated on a cloud platform, estimates and predicts the system's state, subsequently formulating the optimal scheduling strategy for execution in the physical world. However, acquiring data crucial for efficient state estimation and control computation poses a significant challenge, primarily due to constraints such as limited network resources, partial observation, and the necessity to maintain a certain confidence level for DT estimation. We propose an algorithm based on Value of Information (VoI), seamlessly integrated with the Extended Kalman Filter to deliver a polynomial-time solution, selecting the most informative subset of sensing agents for data. Additionally, we put forth an alternative solution leveraging a Graph Neural Network to precisely ascertain the AGV's position with a remarkable accuracy of up to 5 cm. Our experimental validation in an industrial robotic laboratory environment yields promising results, underscoring the potential of high-accuracy DT models in practice.

Value-Based Reinforcement Learning for Digital Twins in Cloud Computing

Nov 27, 2023Abstract:The setup considered in the paper consists of sensors in a Networked Control System that are used to build a digital twin (DT) model of the system dynamics. The focus is on control, scheduling, and resource allocation for sensory observation to ensure timely delivery to the DT model deployed in the cloud. Low latency and communication timeliness are instrumental in ensuring that the DT model can accurately estimate and predict system states. However, acquiring data for efficient state estimation and control computing poses a non-trivial problem given the limited network resources, partial state vector information, and measurement errors encountered at distributed sensors. We propose the REinforcement learning and Variational Extended Kalman filter with Robust Belief (REVERB), which leverages a reinforcement learning solution combined with a Value of Information-based algorithm for performing optimal control and selecting the most informative sensors to satisfy the prediction accuracy of DT. Numerical results demonstrate that the DT platform can offer satisfactory performance while reducing the communication overhead up to five times.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge