Pedro Abreu

Interleaved Sequence RNNs for Fraud Detection

Feb 14, 2020

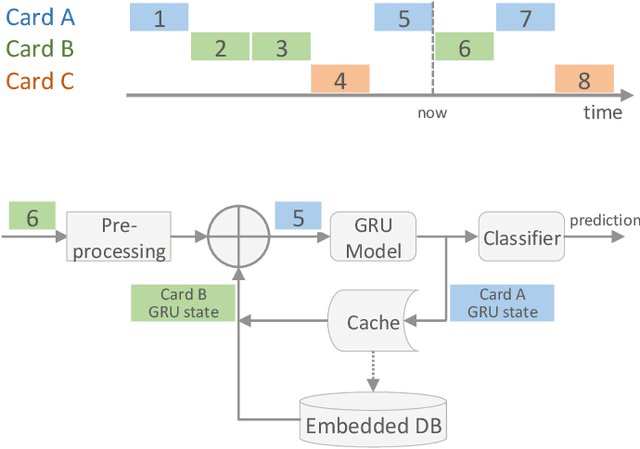

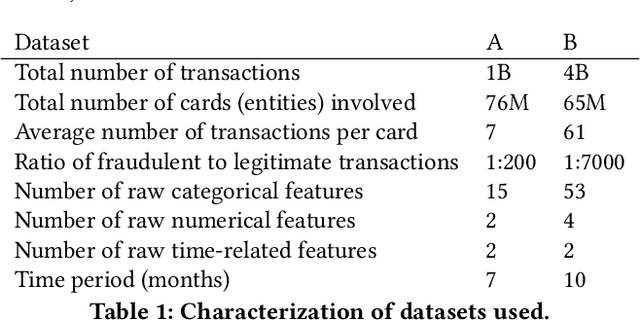

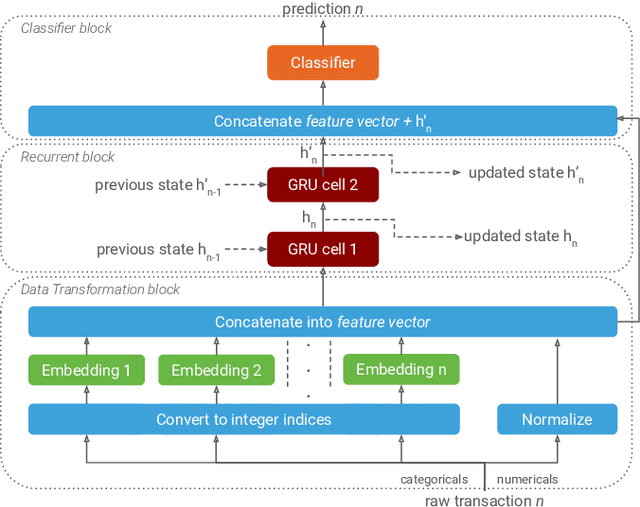

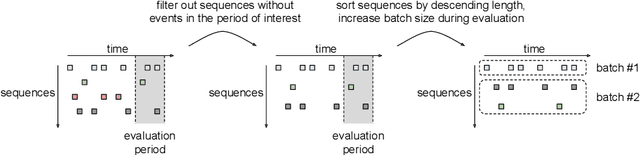

Abstract:Payment card fraud causes multibillion dollar losses for banks and merchants worldwide, often fueling complex criminal activities. To address this, many real-time fraud detection systems use tree-based models, demanding complex feature engineering systems to efficiently enrich transactions with historical data while complying with millisecond-level latencies. In this work, we do not require those expensive features by using recurrent neural networks and treating payments as an interleaved sequence, where the history of each card is an unbounded, irregular sub-sequence. We present a complete RNN framework to detect fraud in real-time, proposing an efficient ML pipeline from preprocessing to deployment. We show that these feature-free, multi-sequence RNNs outperform state-of-the-art models saving millions of dollars in fraud detection and using fewer computational resources.

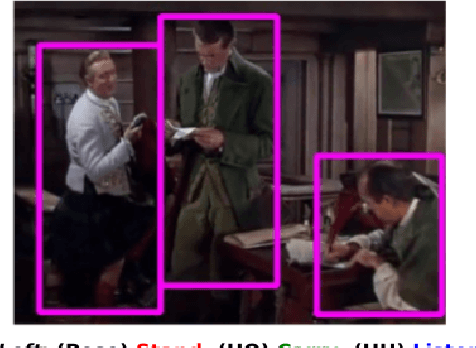

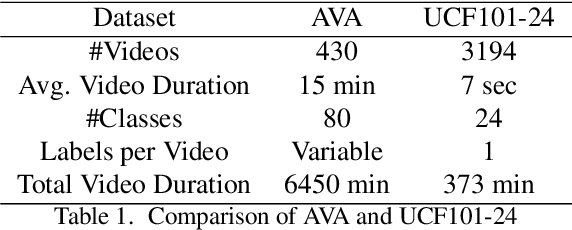

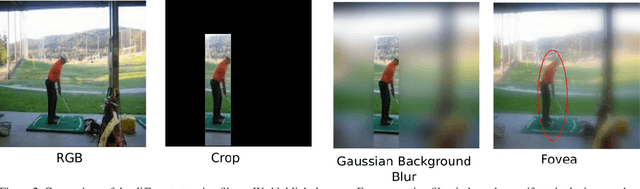

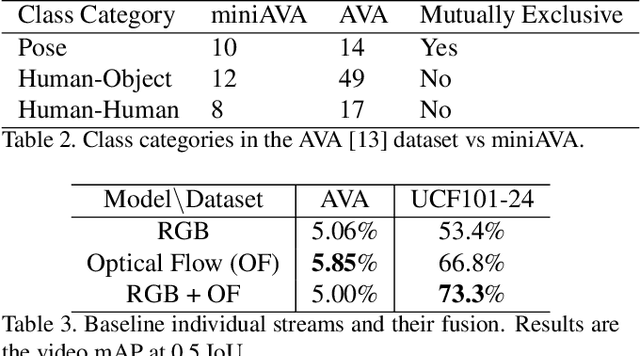

Attention Filtering for Multi-person Spatiotemporal Action Detection on Deep Two-Stream CNN Architectures

Jul 21, 2019

Abstract:Action detection and recognition tasks have been the target of much focus in the computer vision community due to their many applications, namely, security, robotics and recommendation systems. Recently, datasets like AVA, provide multi-person, multi-label, spatiotemporal action detection and recognition challenges. Being unable to discern which portions of the input to use for classification is a limitation of two-stream CNN approaches, once the vision task involves several people with several labels. We address this limitation and improve the state-of-the-art performance of two-stream CNNs. In this paper we present four contributions: our fovea attention filtering that highlights targets for classification without discarding background; a generalized binary loss function designed for the AVA dataset; miniAVA, a partition of AVA that maintains temporal continuity and class distribution with only one tenth of the dataset size; and ablation studies on alternative attention filters. Our method, using fovea attention filtering and our generalized binary loss, achieves a relative video mAP improvement of 20% over the two-stream baseline in AVA, and is competitive with the state-of-the-art in the UCF101-24. We also show a relative video mAP improvement of 12.6% when using our generalized binary loss over the standard sum-of-sigmoids.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge