Pedro A. Tsividis

Growing knowledge culturally across generations to solve novel, complex tasks

Jul 28, 2021

Abstract:Knowledge built culturally across generations allows humans to learn far more than an individual could glean from their own experience in a lifetime. Cultural knowledge in turn rests on language: language is the richest record of what previous generations believed, valued, and practiced. The power and mechanisms of language as a means of cultural learning, however, are not well understood. We take a first step towards reverse-engineering cultural learning through language. We developed a suite of complex high-stakes tasks in the form of minimalist-style video games, which we deployed in an iterated learning paradigm. Game participants were limited to only two attempts (two lives) to beat each game and were allowed to write a message to a future participant who read the message before playing. Knowledge accumulated gradually across generations, allowing later generations to advance further in the games and perform more efficient actions. Multigenerational learning followed a strikingly similar trajectory to individuals learning alone with an unlimited number of lives. These results suggest that language provides a sufficient medium to express and accumulate the knowledge people acquire in these diverse tasks: the dynamics of the environment, valuable goals, dangerous risks, and strategies for success. The video game paradigm we pioneer here is thus a rich test bed for theories of cultural transmission and learning from language.

Human-Level Reinforcement Learning through Theory-Based Modeling, Exploration, and Planning

Jul 27, 2021

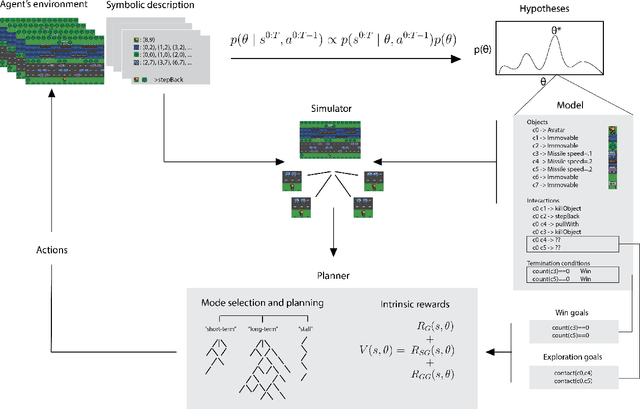

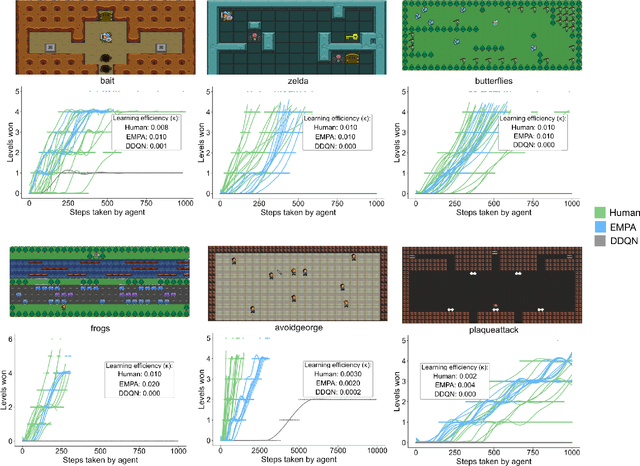

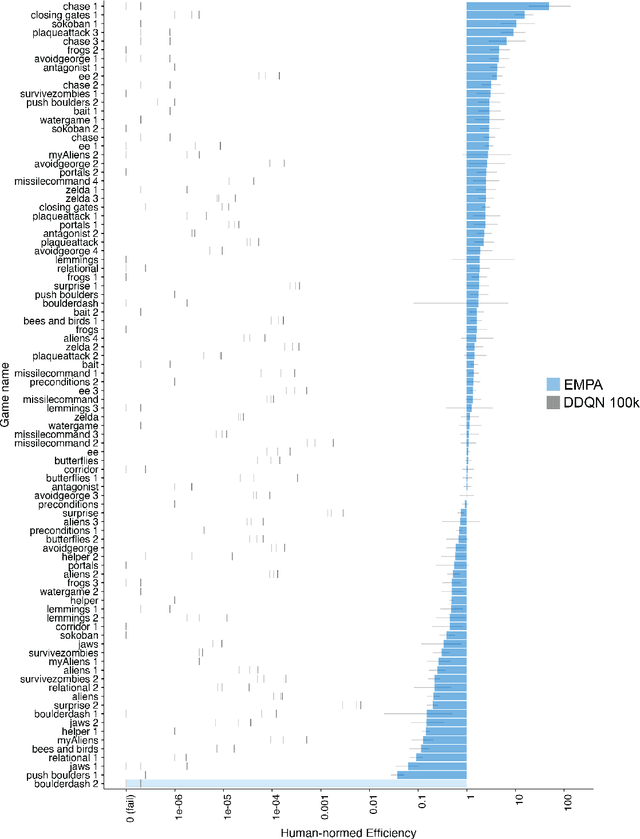

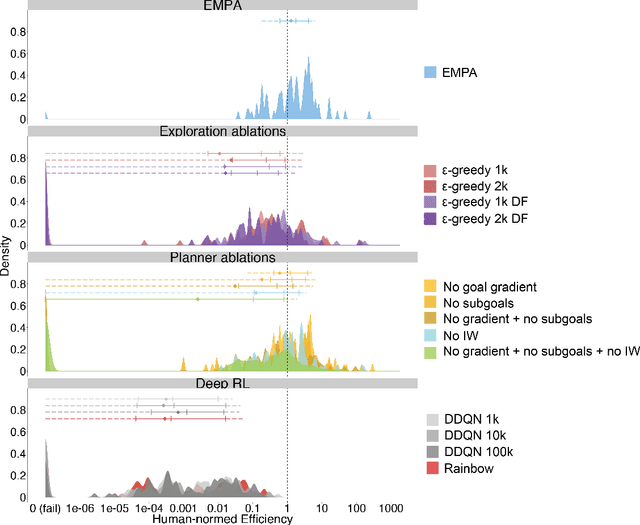

Abstract:Reinforcement learning (RL) studies how an agent comes to achieve reward in an environment through interactions over time. Recent advances in machine RL have surpassed human expertise at the world's oldest board games and many classic video games, but they require vast quantities of experience to learn successfully -- none of today's algorithms account for the human ability to learn so many different tasks, so quickly. Here we propose a new approach to this challenge based on a particularly strong form of model-based RL which we call Theory-Based Reinforcement Learning, because it uses human-like intuitive theories -- rich, abstract, causal models of physical objects, intentional agents, and their interactions -- to explore and model an environment, and plan effectively to achieve task goals. We instantiate the approach in a video game playing agent called EMPA (the Exploring, Modeling, and Planning Agent), which performs Bayesian inference to learn probabilistic generative models expressed as programs for a game-engine simulator, and runs internal simulations over these models to support efficient object-based, relational exploration and heuristic planning. EMPA closely matches human learning efficiency on a suite of 90 challenging Atari-style video games, learning new games in just minutes of game play and generalizing robustly to new game situations and new levels. The model also captures fine-grained structure in people's exploration trajectories and learning dynamics. Its design and behavior suggest a way forward for building more general human-like AI systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge