Paweł Wachel

Kernel-based learning with guarantees for multi-agent applications

Apr 15, 2024

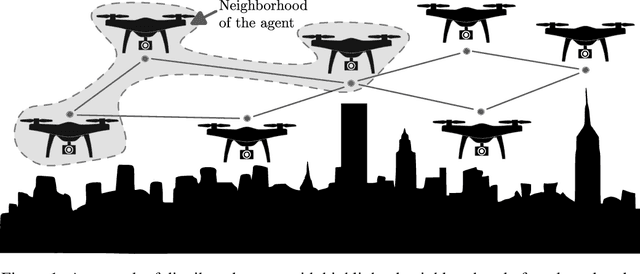

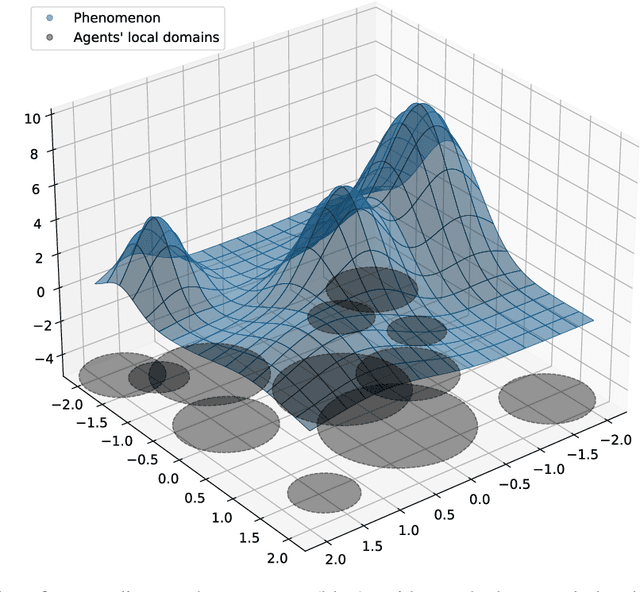

Abstract:This paper addresses a kernel-based learning problem for a network of agents locally observing a latent multidimensional, nonlinear phenomenon in a noisy environment. We propose a learning algorithm that requires only mild a priori knowledge about the phenomenon under investigation and delivers a model with corresponding non-asymptotic high probability error bounds. Both non-asymptotic analysis of the method and numerical simulation results are presented and discussed in the paper.

A computationally lightweight safe learning algorithm

Sep 07, 2023Abstract:Safety is an essential asset when learning control policies for physical systems, as violating safety constraints during training can lead to expensive hardware damage. In response to this need, the field of safe learning has emerged with algorithms that can provide probabilistic safety guarantees without knowledge of the underlying system dynamics. Those algorithms often rely on Gaussian process inference. Unfortunately, Gaussian process inference scales cubically with the number of data points, limiting applicability to high-dimensional and embedded systems. In this paper, we propose a safe learning algorithm that provides probabilistic safety guarantees but leverages the Nadaraya-Watson estimator instead of Gaussian processes. For the Nadaraya-Watson estimator, we can reach logarithmic scaling with the number of data points. We provide theoretical guarantees for the estimates, embed them into a safe learning algorithm, and show numerical experiments on a simulated seven-degrees-of-freedom robot manipulator.

Frequency-Supported Neural Networks for Nonlinear Dynamical System Identification

May 10, 2023Abstract:Neural networks are a very general type of model capable of learning various relationships between multiple variables. One example of such relationships, particularly interesting in practice, is the input-output relation of nonlinear systems, which has a multitude of applications. Studying models capable of estimating such relation is a broad discipline with numerous theoretical and practical results. Neural networks are very general, but multiple special cases exist, including convolutional neural networks and recurrent neural networks, which are adjusted for specific applications, which are image and sequence processing respectively. We formulate a hypothesis that adjusting general network structure by incorporating frequency information into it should result in a network specifically well suited to nonlinear system identification. Moreover, we show that it is possible to add this frequency information without the loss of generality from a theoretical perspective. We call this new structure Frequency-Supported Neural Network (FSNN) and empirically investigate its properties.

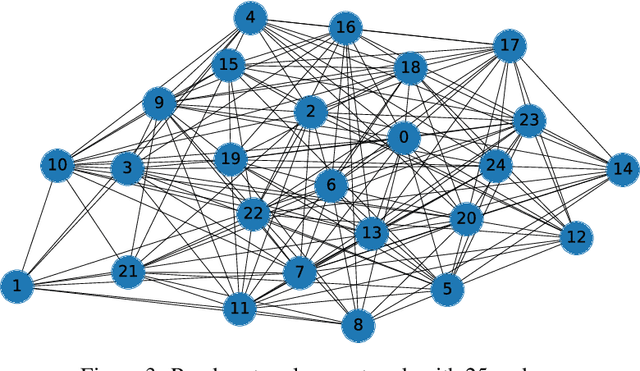

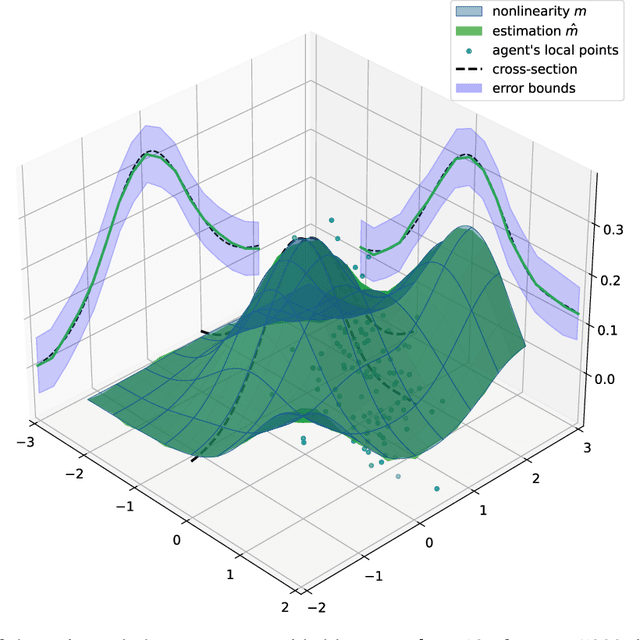

Decentralized diffusion-based learning under non-parametric limited prior knowledge

May 05, 2023Abstract:We study the problem of diffusion-based network learning of a nonlinear phenomenon, $m$, from local agents' measurements collected in a noisy environment. For a decentralized network and information spreading merely between directly neighboring nodes, we propose a non-parametric learning algorithm, that avoids raw data exchange and requires only mild \textit{a priori} knowledge about $m$. Non-asymptotic estimation error bounds are derived for the proposed method. Its potential applications are illustrated through simulation experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge