Pavel Vlasanek

Unproportional mosaicing

Mar 06, 2023Abstract:Data shift is a gap between data distribution used for training and data distribution encountered in the real-world. Data augmentations help narrow the gap by generating new data samples, increasing data variability, and data space coverage. We present a new data augmentation: Unproportional mosaicing (Unprop). Our augmentation randomly splits an image into various-sized blocks and swaps its content (pixels) while maintaining block sizes. Our method achieves a lower error rate when combined with other state-of-the-art augmentations.

Poly-YOLO: higher speed, more precise detection and instance segmentation for YOLOv3

May 29, 2020

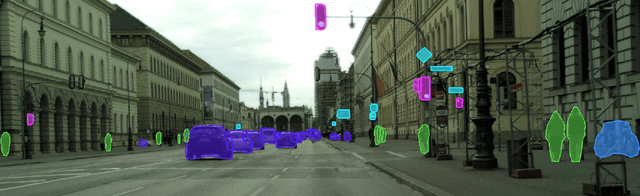

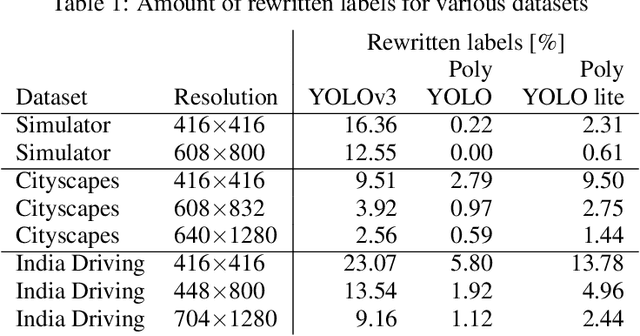

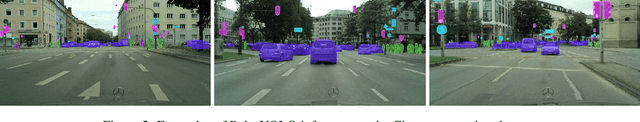

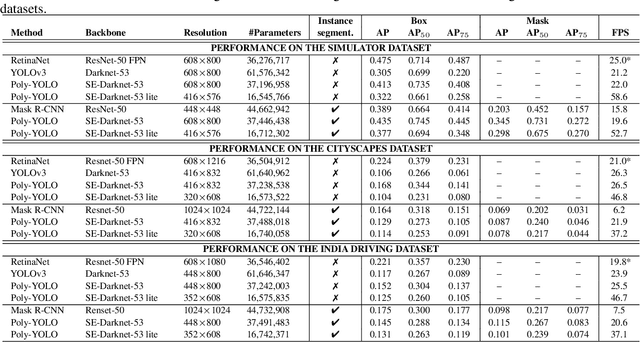

Abstract:We present a new version of YOLO with better performance and extended with instance segmentation called Poly-YOLO. Poly-YOLO builds on the original ideas of YOLOv3 and removes two of its weaknesses: a large amount of rewritten labels and inefficient distribution of anchors. Poly-YOLO reduces the issues by aggregating features from a light SE-Darknet-53 backbone with a hypercolumn technique, using stairstep upsampling, and produces a single scale output with high resolution. In comparison with YOLOv3, Poly-YOLO has only 60% of its trainable parameters but improves mAP by a relative 40%. We also present Poly-YOLO lite with fewer parameters and a lower output resolution. It has the same precision as YOLOv3, but it is three times smaller and twice as fast, thus suitable for embedded devices. Finally, Poly-YOLO performs instance segmentation using bounding polygons. The network is trained to detect size-independent polygons defined on a polar grid. Vertices of each polygon are being predicted with their confidence, and therefore Poly-YOLO produces polygons with a varying number of vertices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge