Pavel Rytíř

ExMAG: Learning of Maximally Ancestral Graphs

Mar 11, 2025

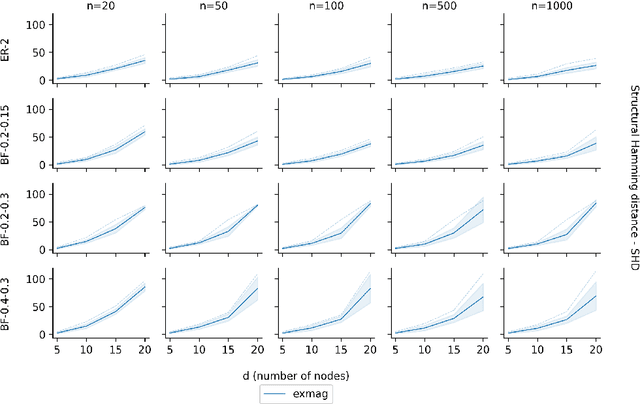

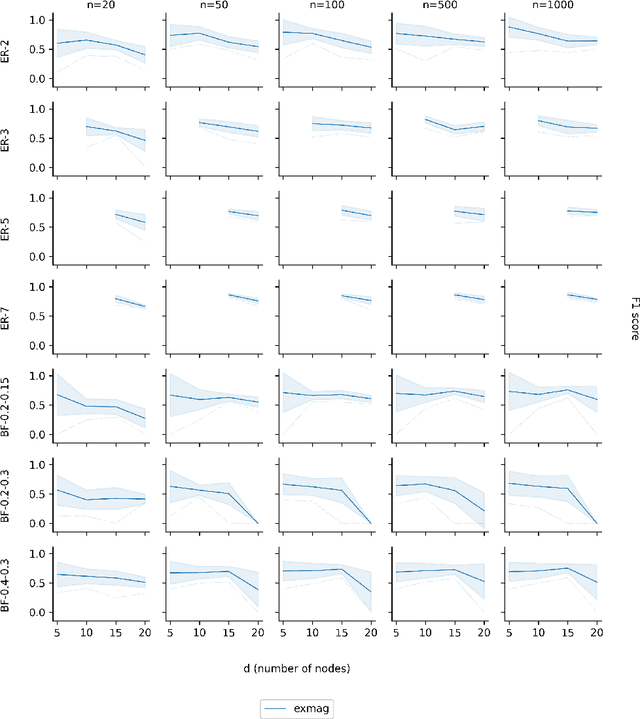

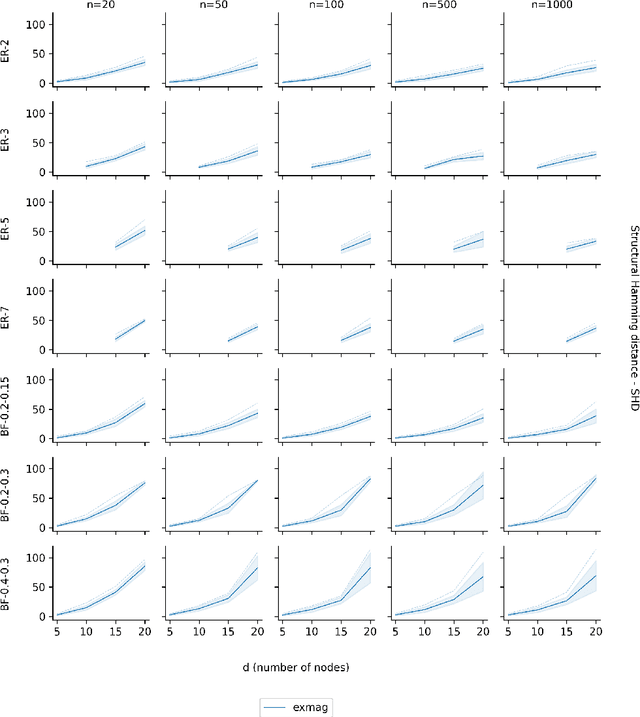

Abstract:As one transitions from statistical to causal learning, one is seeking the most appropriate causal model. Dynamic Bayesian networks are a popular model, where a weighted directed acyclic graph represents the causal relationships. Stochastic processes are represented by its vertices, and weighted oriented edges suggest the strength of the causal relationships. When there are confounders, one would like to utilize both oriented edges (when the direction of causality is clear) and edges that are not oriented (when there is a confounder), yielding mixed graphs. A little-studied extension of acyclicity to this mixed-graph setting is known as maximally ancestral graphs. We propose a score-based learning algorithm for learning maximally ancestral graphs. A mixed-integer quadratic program is formulated, and an algorithmic approach is proposed, in which the pre-generation of exponentially many constraints is avoided by generating only violated constraints in the so-called branch-and-cut (``lazy constraint'') method. Comparing the novel approach to the state-of-the-art, we show that the proposed approach turns out to produce more accurate results when applied to small and medium-sized synthetic instances containing up to 25 variables.

ExDBN: Exact learning of Dynamic Bayesian Networks

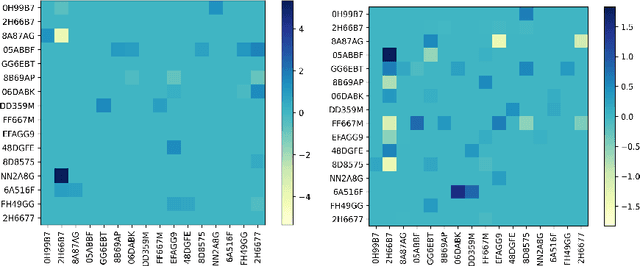

Oct 21, 2024Abstract:Causal learning from data has received much attention in recent years. One way of capturing causal relationships is by utilizing Bayesian networks. There, one recovers a weighted directed acyclic graph, in which random variables are represented by vertices, and the weights associated with each edge represent the strengths of the causal relationships between them. This concept is extended to capture dynamic effects by introducing a dependency on past data, which may be captured by the structural equation model, which is utilized in the present contribution to formulate a score-based learning approach. A mixed-integer quadratic program is formulated and an algorithmic solution proposed, in which the pre-generation of exponentially many acyclicity constraints is avoided by utilizing the so-called branch-and-cut ("lazy constraint") method. Comparing the novel approach to the state of the art, we show that the proposed approach turns out to produce excellent results when applied to small and medium-sized synthetic instances of up to 25 time-series. Lastly, two interesting applications in bio-science and finance, to which the method is directly applied, further stress the opportunities in developing highly accurate, globally convergent solvers that can handle modest instances.

ExDAG: Exact learning of DAGs

Jun 21, 2024Abstract:There has been a growing interest in causal learning in recent years. Commonly used representations of causal structures, including Bayesian networks and structural equation models (SEM), take the form of directed acyclic graphs (DAGs). We provide a novel mixed-integer quadratic programming formulation and associated algorithm that identifies DAGs on up to 50 vertices, where these are identifiable. We call this method ExDAG, which stands for Exact learning of DAGs. Although there is a superexponential number of constraints that prevent the formation of cycles, the algorithm adds constraints violated by solutions found, rather than imposing all constraints in each continuous-valued relaxation. Our empirical results show that ExDAG outperforms local state-of-the-art solvers in terms of precision and outperforms state-of-the-art global solvers with respect to scaling, when considering Gaussian noise. We also provide validation with respect to other noise distributions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge