Paul Sanzenbacher

Learning Neural Light Transport

Jun 05, 2020

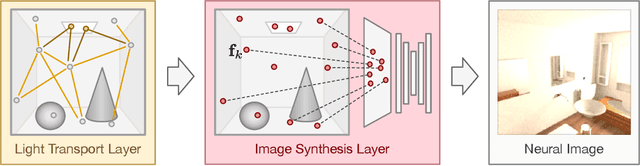

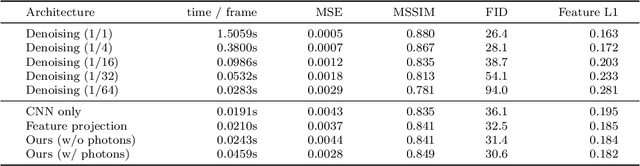

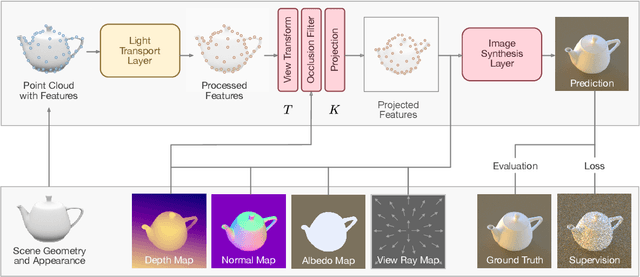

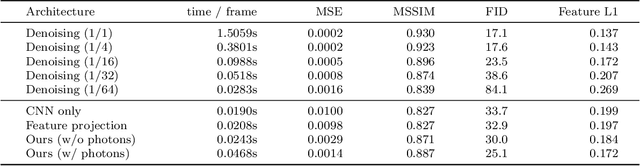

Abstract:In recent years, deep generative models have gained significance due to their ability to synthesize natural-looking images with applications ranging from virtual reality to data augmentation for training computer vision models. While existing models are able to faithfully learn the image distribution of the training set, they often lack controllability as they operate in 2D pixel space and do not model the physical image formation process. In this work, we investigate the importance of 3D reasoning for photorealistic rendering. We present an approach for learning light transport in static and dynamic 3D scenes using a neural network with the goal of predicting photorealistic images. In contrast to existing approaches that operate in the 2D image domain, our approach reasons in both 3D and 2D space, thus enabling global illumination effects and manipulation of 3D scene geometry. Experimentally, we find that our model is able to produce photorealistic renderings of static and dynamic scenes. Moreover, it compares favorably to baselines which combine path tracing and image denoising at the same computational budget.

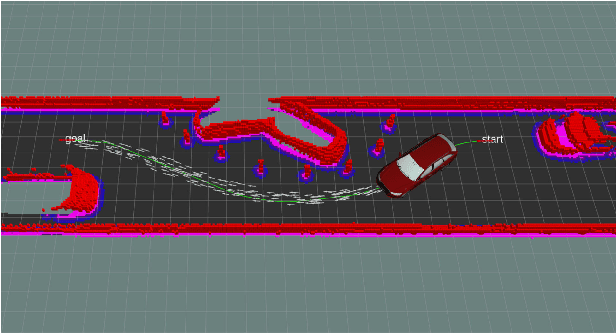

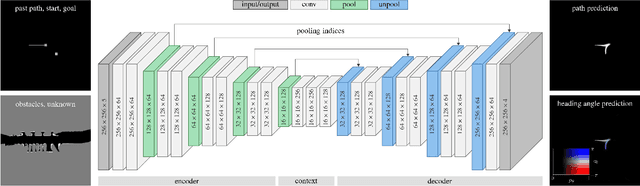

Learning to Predict Ego-Vehicle Poses for Sampling-Based Nonholonomic Motion Planning

Feb 01, 2019

Abstract:Sampling-based motion planning is an effective tool to compute safe trajectories for automated vehicles in complex environments. However, a fast convergence to the optimal solution can only be ensured with the use of problem-specific sampling distributions. Due to the large variety of driving situations within the context of automated driving, it is very challenging to manually design such distributions. This paper introduces therefore a data-driven approach utilizing a deep convolutional neural network (CNN): Given the current driving situation, future ego-vehicle poses can be directly generated from the output of the CNN allowing to guide the motion planner efficiently towards the optimal solution. A benchmark highlights that the CNN predicts future vehicle poses with a higher accuracy compared to uniform sampling and a state-of-the-art A*-based approach. Combining this CNN-guided sampling with the motion planner Bidirectional RRT* reduces the computation time by up to an order of magnitude and yields a faster convergence to a lower cost as well as a success rate of 100 % in the tested scenarios.

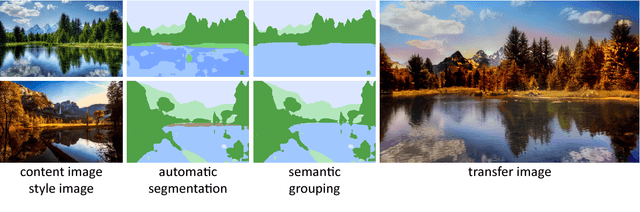

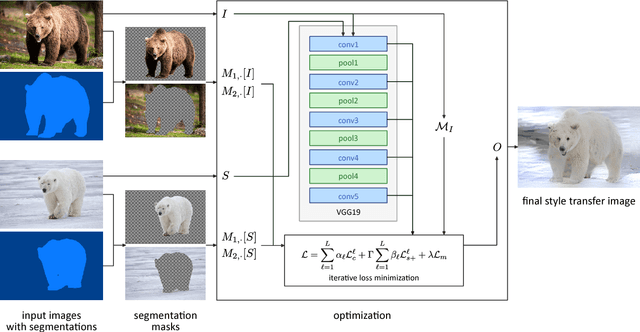

Automated Deep Photo Style Transfer

Jan 12, 2019

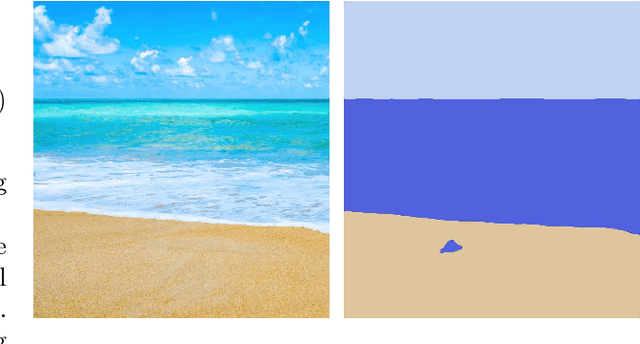

Abstract:Photorealism is a complex concept that cannot easily be formulated mathematically. Deep Photo Style Transfer is an attempt to transfer the style of a reference image to a content image while preserving its photorealism. This is achieved by introducing a constraint that prevents distortions in the content image and by applying the style transfer independently for semantically different parts of the images. In addition, an automated segmentation process is presented that consists of a neural network based segmentation method followed by a semantic grouping step. To further improve the results a measure for image aesthetics is used and elaborated. If the content and the style image are sufficiently similar, the result images look very realistic. With the automation of the image segmentation the pipeline becomes completely independent from any user interaction, which allows for new applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge