Paul Gajewski

Context-Aware Command Understanding for Tabletop Scenarios

Oct 10, 2024

Abstract:This paper presents a novel hybrid algorithm designed to interpret natural human commands in tabletop scenarios. By integrating multiple sources of information, including speech, gestures, and scene context, the system extracts actionable instructions for a robot, identifying relevant objects and actions. The system operates in a zero-shot fashion, without reliance on predefined object models, enabling flexible and adaptive use in various environments. We assess the integration of multiple deep learning models, evaluating their suitability for deployment in real-world robotic setups. Our algorithm performs robustly across different tasks, combining language processing with visual grounding. In addition, we release a small dataset of video recordings used to evaluate the system. This dataset captures real-world interactions in which a human provides instructions in natural language to a robot, a contribution to future research on human-robot interaction. We discuss the strengths and limitations of the system, with particular focus on how it handles multimodal command interpretation, and its ability to be integrated into symbolic robotic frameworks for safe and explainable decision-making.

Solving Multi-Goal Robotic Tasks with Decision Transformer

Oct 08, 2024

Abstract:Artificial intelligence plays a crucial role in robotics, with reinforcement learning (RL) emerging as one of the most promising approaches for robot control. However, several key challenges hinder its broader application. First, many RL methods rely on online learning, which requires either real-world hardware or advanced simulation environments--both of which can be costly, time-consuming, and impractical. Offline reinforcement learning offers a solution, enabling models to be trained without ongoing access to physical robots or simulations. A second challenge is learning multi-goal tasks, where robots must achieve multiple objectives simultaneously. This adds complexity to the training process, as the model must generalize across different goals. At the same time, transformer architectures have gained significant popularity across various domains, including reinforcement learning. Yet, no existing methods effectively combine offline training, multi-goal learning, and transformer-based architectures. In this paper, we address these challenges by introducing a novel adaptation of the decision transformer architecture for offline multi-goal reinforcement learning in robotics. Our approach integrates goal-specific information into the decision transformer, allowing it to handle complex tasks in an offline setting. To validate our method, we developed a new offline reinforcement learning dataset using the Panda robotic platform in simulation. Our extensive experiments demonstrate that the decision transformer can outperform state-of-the-art online reinforcement learning methods.

How language of interaction affects the user perception of a robot

Oct 23, 2023

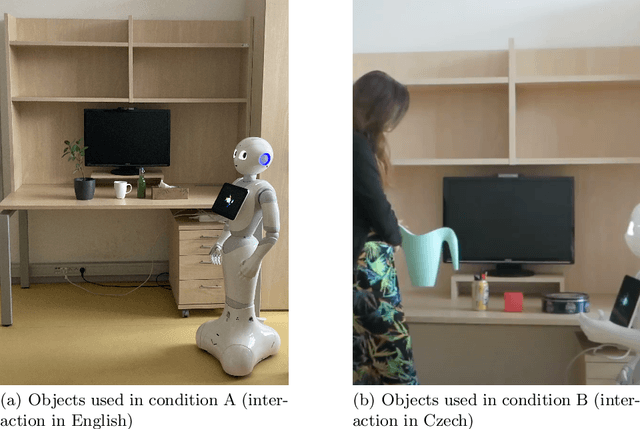

Abstract:Spoken language is the most natural way for a human to communicate with a robot. It may seem intuitive that a robot should communicate with users in their native language. However, it is not clear if a user's perception of a robot is affected by the language of interaction. We investigated this question by conducting a study with twenty-three native Czech participants who were also fluent in English. The participants were tasked with instructing the Pepper robot on where to place objects on a shelf. The robot was controlled remotely using the Wizard-of-Oz technique. We collected data through questionnaires, video recordings, and a post-experiment feedback session. The results of our experiment show that people perceive an English-speaking robot as more intelligent than a Czech-speaking robot (z = 18.00, p-value = 0.02). This finding highlights the influence of language on human-robot interaction. Furthermore, we discuss the feedback obtained from the participants via the post-experiment sessions and its implications for HRI design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge