Pau Gallés

Head-tail Loss: A simple function for Oriented Object Detection and Anchor-free models

Apr 10, 2023Abstract:This paper presents a new loss function for the prediction of oriented bounding boxes, named head-tail-loss. The loss function consists in minimizing the distance between the prediction and the annotation of two key points that are representing the annotation of the object. The first point is the center point and the second is the head of the object. However, for the second point, the minimum distance between the prediction and either the head or tail of the groundtruth is used. On this way, either prediction is valid (with the head pointing to the tail or the tail pointing to the head). At the end the importance is to detect the direction of the object but not its heading. The new loss function has been evaluated on the DOTA and HRSC2016 datasets and has shown potential for elongated objects such as ships and also for other types of objects with different shapes.

Object Detection performance variation on compressed satellite image datasets with iquaflow

Jan 18, 2023Abstract:A lot of work has been done to reach the best possible performance of predictive models on images. There are fewer studies about the resilience of these models when they are trained on image datasets that suffer modifications altering their original quality. Yet this is a common problem that is often encountered in the industry. A good example of that is with earth observation satellites that are capturing many images. The energy and time of connection to the earth of an orbiting satellite are limited and must be carefully used. An approach to mitigate that is to compress the images on board before downloading. The compression can be regulated depending on the intended usage of the image and the requirements of this application. We present a new software tool with the name iquaflow that is designed to study image quality and model performance variation given an alteration of the image dataset. Furthermore, we do a showcase study about oriented object detection models adoption on a public image dataset DOTA Xia_2018_CVPR given different compression levels. The optimal compression point is found and the usefulness of iquaflow becomes evident.

QMRNet: Quality Metric Regression for EO Image Quality Assessment and Super-Resolution

Oct 14, 2022

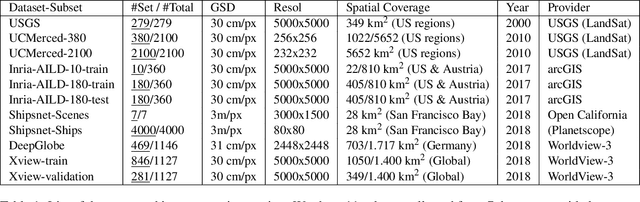

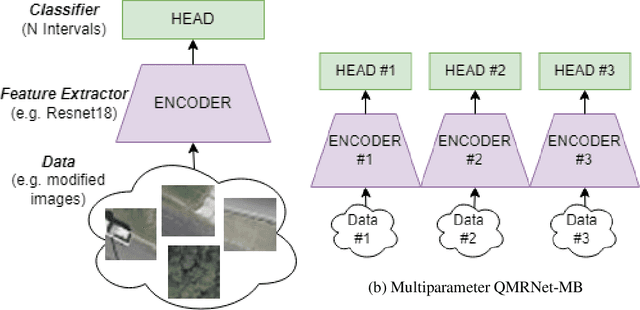

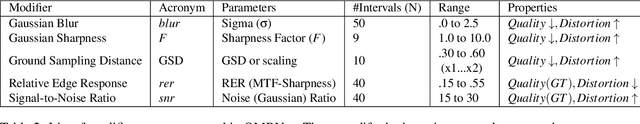

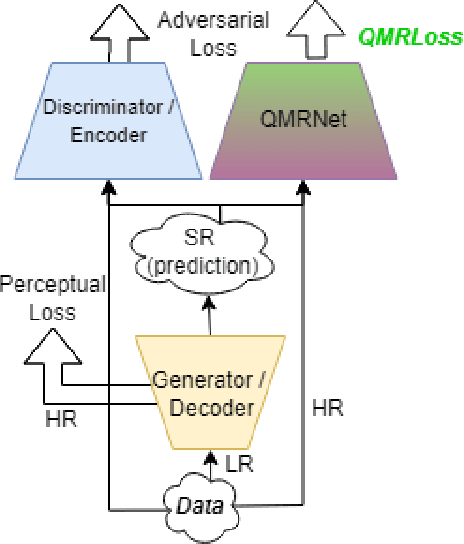

Abstract:Latest advances in Super-Resolution (SR) have been tested with general purpose images such as faces, landscapes and objects, mainly unused for the task of super-resolving Earth Observation (EO) images. In this research paper, we benchmark state-of-the-art SR algorithms for distinct EO datasets using both Full-Reference and No-Reference Image Quality Assessment (IQA) metrics. We also propose a novel Quality Metric Regression Network (QMRNet) that is able to predict quality (as a No-Reference metric) by training on any property of the image (i.e. its resolution, its distortions...) and also able to optimize SR algorithms for a specific metric objective. This work is part of the implementation of the framework IQUAFLOW which has been developed for evaluating image quality, detection and classification of objects as well as image compression in EO use cases. We integrated our experimentation and tested our QMRNet algorithm on predicting features like blur, sharpness, snr, rer and ground sampling distance (GSD) and obtain validation medRs below 1.0 (out of N=50) and recall rates above 95\%. Overall benchmark shows promising results for LIIF, CAR and MSRN and also the potential use of QMRNet as Loss for optimizing SR predictions. Due to its simplicity, QMRNet could also be used for other use cases and image domains, as its architecture and data processing is fully scalable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge