Patrick Baker

A Framework for Evaluating Faithfulness in Explainable AI for Machine Anomalous Sound Detection Using Frequency-Band Perturbation

Jan 26, 2026Abstract:Explainable AI (XAI) is commonly applied to anomalous sound detection (ASD) models to identify which time-frequency regions of an audio signal contribute to an anomaly decision. However, most audio explanations rely on qualitative inspection of saliency maps, leaving open the question of whether these attributions accurately reflect the spectral cues the model uses. In this work, we introduce a new quantitative framework for evaluating XAI faithfulness in machine-sound analysis by directly linking attribution relevance to model behaviour through systematic frequency-band removal. This approach provides an objective measure of whether an XAI method for machine ASD correctly identifies frequency regions that influence an ASD model's predictions. By using four widely adopted methods, namely Integrated Gradients, Occlusion, Grad-CAM and SmoothGrad, we show that XAI techniques differ in reliability, with Occlusion demonstrating the strongest alignment with true model sensitivity and gradient-+based methods often failing to accurately capture spectral dependencies. The proposed framework offers a reproducible way to benchmark audio explanations and enables more trustworthy interpretation of spectrogram-based ASD systems.

Aggregation in the Mirror Space : Fast, Accurate Distributed Machine Learning in Military Settings

Oct 28, 2022

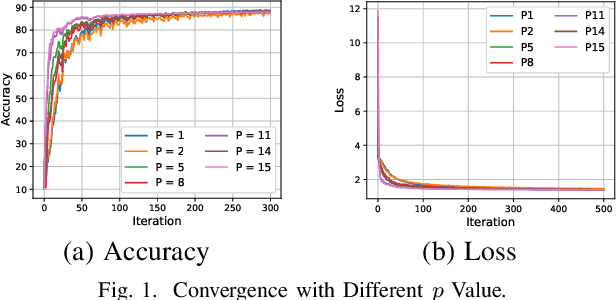

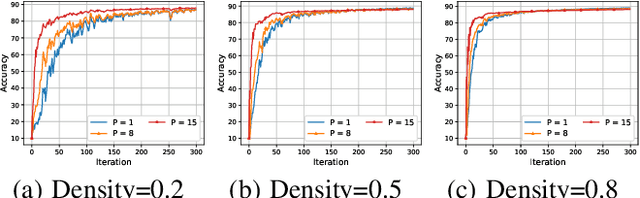

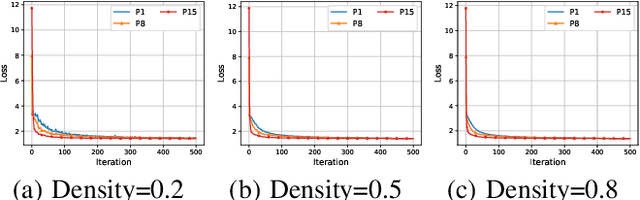

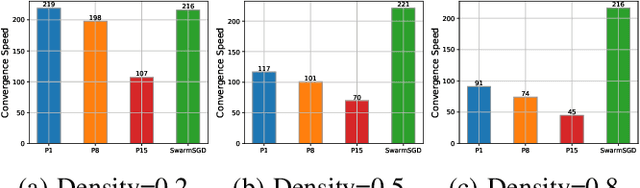

Abstract:Distributed machine learning (DML) can be an important capability for modern military to take advantage of data and devices distributed at multiple vantage points to adapt and learn. The existing distributed machine learning frameworks, however, cannot realize the full benefits of DML, because they are all based on the simple linear aggregation framework, but linear aggregation cannot handle the $\textit{divergence challenges}$ arising in military settings: the learning data at different devices can be heterogeneous ($\textit{i.e.}$, Non-IID data), leading to model divergence, but the ability for devices to communicate is substantially limited ($\textit{i.e.}$, weak connectivity due to sparse and dynamic communications), reducing the ability for devices to reconcile model divergence. In this paper, we introduce a novel DML framework called aggregation in the mirror space (AIMS) that allows a DML system to introduce a general mirror function to map a model into a mirror space to conduct aggregation and gradient descent. Adapting the convexity of the mirror function according to the divergence force, AIMS allows automatic optimization of DML. We conduct both rigorous analysis and extensive experimental evaluations to demonstrate the benefits of AIMS. For example, we prove that AIMS achieves a loss of $O\left((\frac{m^{r+1}}{T})^{\frac1r}\right)$ after $T$ network-wide updates, where $m$ is the number of devices and $r$ the convexity of the mirror function, with existing linear aggregation frameworks being a special case with $r=2$. Our experimental evaluations using EMANE (Extendable Mobile Ad-hoc Network Emulator) for military communications settings show similar results: AIMS can improve DML convergence rate by up to 57\% and scale well to more devices with weak connectivity, all with little additional computation overhead compared to traditional linear aggregation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge