Paolo Pareti

Evaluation and Comparison Semantics for ODRL

Sep 05, 2025

Abstract:We consider the problem of evaluating, and comparing computational policies in the Open Digital Rights Language (ODRL), which has become the de facto standard for governing the access and usage of digital resources. Although preliminary progress has been made on the formal specification of the language's features, a comprehensive formal semantics of ODRL is still missing. In this paper, we provide a simple and intuitive formal semantics for ODRL that is based on query answering. Our semantics refines previous formalisations, and is aligned with the latest published specification of the language (2.2). Building on our evaluation semantics, and motivated by data sharing scenarios, we also define and study the problem of comparing two policies, detecting equivalent, more restrictive or more permissive policies.

SHACL Validation under Graph Updates (Extended Paper)

Jul 31, 2025Abstract:SHACL (SHApe Constraint Language) is a W3C standardized constraint language for RDF graphs. In this paper, we study SHACL validation in RDF graphs under updates. We present a SHACL-based update language that can capture intuitive and realistic modifications on RDF graphs and study the problem of static validation under such updates. This problem asks to verify whether every graph that validates a SHACL specification will still do so after applying a given update sequence. More importantly, it provides a basis for further services for reasoning about evolving RDF graphs. Using a regression technique that embeds the update actions into SHACL constraints, we show that static validation under updates can be reduced to (un)satisfiability of constraints in (a minor extension of) SHACL. We analyze the computational complexity of the static validation problem for SHACL and some key fragments. Finally, we present a prototype implementation that performs static validation and other static analysis tasks on SHACL constraints and demonstrate its behavior through preliminary experiments.

SHACL2FOL: An FOL Toolkit for SHACL Decision Problems

Jun 12, 2024

Abstract:Recent studies on the Shapes Constraint Language (SHACL), a W3C specification for validating RDF graphs, rely on translating the language into first-order logic in order to provide formally-grounded solutions to the validation, containment and satisfiability decision problems. Continuing on this line of research, we introduce SHACL2FOL, the first automatic tool that (i) translates SHACL documents into FOL sentences and (ii) computes the answer to the two static analysis problems of satisfiability and containment; it also allow to test the validity of a graph with respect to a set of constraints. By integrating with existing theorem provers, such as E and Vampire, the tool computes the answer to the aforementioned decision problems and outputs the corresponding first-order logic theories in the standard TPTP format. We believe this tool can contribute to further theoretical studies of SHACL, by providing an automatic first-order logic interpretation of its semantics, while also benefiting SHACL practitioners, by supplying static analysis capabilities to help the creation and management of SHACL constraints.

A Review of SHACL: From Data Validation to Schema Reasoning for RDF Graphs

Dec 02, 2021

Abstract:We present an introduction and a review of the Shapes Constraint Language (SHACL), the W3C recommendation language for validating RDF data. A SHACL document describes a set of constraints on RDF nodes, and a graph is valid with respect to the document if its nodes satisfy these constraints. We revisit the basic concepts of the language, its constructs and components and their interaction. We review the different formal frameworks used to study this language and the different semantics proposed. We examine a number of related problems, from containment and satisfiability to the interaction of SHACL with inference rules, and exhibit how different modellings of the language are useful for different problems. We also cover practical aspects of SHACL, discussing its implementations and state of adoption, to present a holistic review useful to practitioners and theoreticians alike.

Satisfiability and Containment of Recursive SHACL

Aug 30, 2021

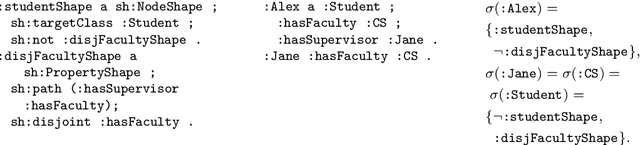

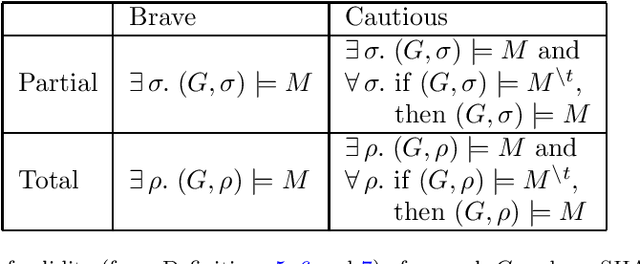

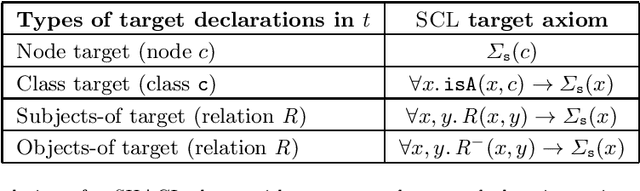

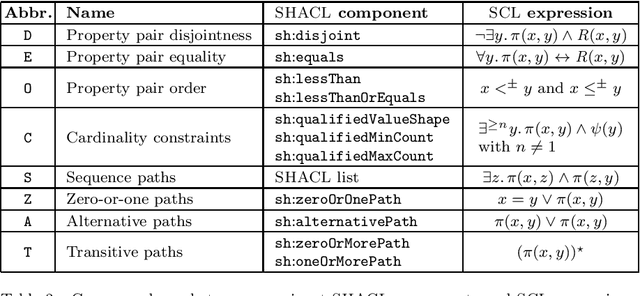

Abstract:The Shapes Constraint Language (SHACL) is the recent W3C recommendation language for validating RDF data, by verifying certain shapes on graphs. Previous work has largely focused on the validation problem and the standard decision problems of satisfiability and containment, crucial for design and optimisation purposes, have only been investigated for simplified versions of SHACL. Moreover, the SHACL specification does not define the semantics of recursively-defined constraints, which led to several alternative recursive semantics being proposed in the literature. The interaction between these different semantics and important decision problems has not been investigated yet. In this article we provide a comprehensive study of the different features of SHACL, by providing a translation to a new first-order language, called SCL, that precisely captures the semantics of SHACL. We also present MSCL, a second-order extension of SCL, which allows us to define, in a single formal logic framework, the main recursive semantics of SHACL. Within this language we also provide an effective treatment of filter constraints which are often neglected in the related literature. Using this logic we provide a detailed map of (un)decidability and complexity results for the satisfiability and containment decision problems for different SHACL fragments. Notably, we prove that both problems are undecidable for the full language, but we present decidable combinations of interesting features, even in the face of recursion.

SHACL Satisfiability and Containment (Extended Paper)

Aug 31, 2020Abstract:The Shapes Constraint Language (SHACL) is a recent W3C recommendation language for validating RDF data. Specifically, SHACL documents are collections of constraints that enforce particular shapes on an RDF graph. Previous work on the topic has provided theoretical and practical results for the validation problem, but did not consider the standard decision problems of satisfiability and containment, which are crucial for verifying the feasibility of the constraints and important for design and optimization purposes. In this paper, we undertake a thorough study of different features of non-recursive SHACL by providing a translation to a new first-order language, called SCL, that precisely captures the semantics of SHACL w.r.t. satisfiability and containment. We study the interaction of SHACL features in this logic and provide the detailed map of decidability and complexity results of the aforementioned decision problems for different SHACL sublanguages. Notably, we prove that both problems are undecidable for the full language, but we present decidable combinations of interesting features.

A Policy Editor for Semantic Sensor Networks

Nov 15, 2019

Abstract:An important use of sensors and actuator networks is to comply with health and safety policies in hazardous environments. In order to deal with increasingly large and dynamic environments, and to quickly react to emergencies, tools are needed to simplify the process of translating high-level policies into executable queries and rules. We present a framework to produce such tools, which uses rules to aggregate low-level sensor data, described using the Semantic Sensor Network Ontology, into more useful and actionable abstractions. Using the schema of the underlying data sources as an input, we automatically generate abstractions which are relevant to the use case at hand. In this demonstration we present a policy editor tool and a simulation on which policies can be tested.

SHACL Constraints with Inference Rules

Nov 01, 2019

Abstract:The Shapes Constraint Language (SHACL) has been recently introduced as a W3C recommendation to define constraints that can be validated against RDF graphs. Interactions of SHACL with other Semantic Web technologies, such as ontologies or reasoners, is a matter of ongoing research. In this paper we study the interaction of a subset of SHACL with inference rules expressed in datalog. On the one hand, SHACL constraints can be used to define a "schema" for graph datasets. On the other hand, inference rules can lead to the discovery of new facts that do not match the original schema. Given a set of SHACL constraints and a set of datalog rules, we present a method to detect which constraints could be violated by the application of the inference rules on some graph instance of the schema, and update the original schema, i.e, the set of SHACL constraints, in order to capture the new facts that can be inferred. We provide theoretical and experimental results of the various components of our approach.

Rule Applicability on RDF Triplestore Schemas

Jul 02, 2019

Abstract:Rule-based systems play a critical role in health and safety, where policies created by experts are usually formalised as rules. When dealing with increasingly large and dynamic sources of data, as in the case of Internet of Things (IoT) applications, it becomes important not only to efficiently apply rules, but also to reason about their applicability on datasets confined by a certain schema. In this paper we define the notion of a triplestore schema which models a set of RDF graphs. Given a set of rules and such a schema as input we propose a method to determine rule applicability and produce output schemas. Output schemas model the graphs that would be obtained by running the rules on the graph models of the input schema. We present two approaches: one based on computing a canonical (critical) instance of the schema, and a novel approach based on query rewriting. We provide theoretical, complexity and evaluation results that show the superior efficiency of our rewriting approach.

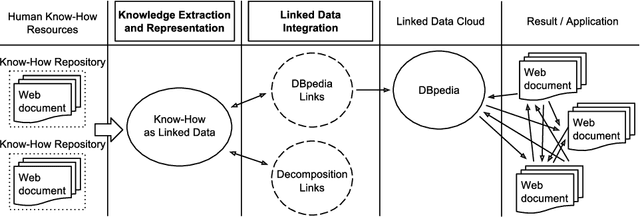

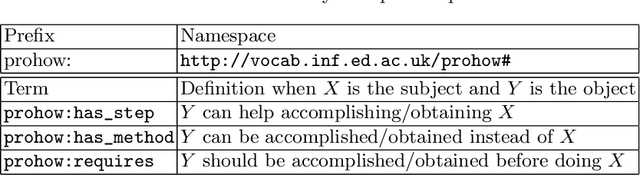

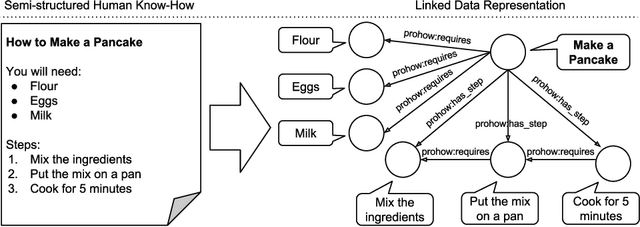

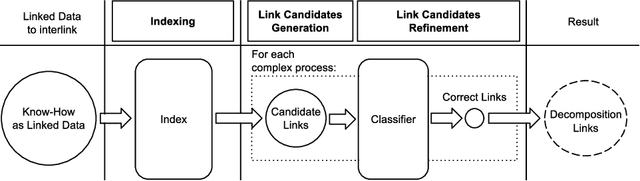

Integrating Know-How into the Linked Data Cloud

Apr 15, 2016

Abstract:This paper presents the first framework for integrating procedural knowledge, or "know-how", into the Linked Data Cloud. Know-how available on the Web, such as step-by-step instructions, is largely unstructured and isolated from other sources of online knowledge. To overcome these limitations, we propose extending to procedural knowledge the benefits that Linked Data has already brought to representing, retrieving and reusing declarative knowledge. We describe a framework for representing generic know-how as Linked Data and for automatically acquiring this representation from existing resources on the Web. This system also allows the automatic generation of links between different know-how resources, and between those resources and other online knowledge bases, such as DBpedia. We discuss the results of applying this framework to a real-world scenario and we show how it outperforms existing manual community-driven integration efforts.

* The 19th International Conference on Knowledge Engineering and Knowledge Management (EKAW 2014), 24-28 November 2014, Link\"oping, Sweden

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge