Paolo D'Alberto

Weight Block Sparsity: Training, Compilation, and AI Engine Accelerators

Jul 12, 2024Abstract:Nowadays, increasingly larger Deep Neural Networks (DNNs) are being developed, trained, and utilized. These networks require significant computational resources, putting a strain on both advanced and limited devices. Our solution is to implement {\em weight block sparsity}, which is a structured sparsity that is friendly to hardware. By zeroing certain sections of the convolution and fully connected layers parameters of pre-trained DNN models, we can efficiently speed up the DNN's inference process. This results in a smaller memory footprint, faster communication, and fewer operations. Our work presents a vertical system that allows for the training of convolution and matrix multiplication weights to exploit 8x8 block sparsity on a single GPU within a reasonable amount of time. Compilers recognize this sparsity and use it for both data compaction and computation splitting into threads. Blocks like these take full advantage of both spatial and temporal locality, paving the way for fast vector operations and memory reuse. By using this system on a Resnet50 model, we were able to reduce the weight by half with minimal accuracy loss, resulting in a two-times faster inference speed. We will present performance estimates using accurate and complete code generation for AIE2 configuration sets (AMD Versal FPGAs) with Resnet50, Inception V3, and VGG16 to demonstrate the necessary synergy between hardware overlay designs and software stacks for compiling and executing machine learning applications.

DPUV3INT8: A Compiler View to programmable FPGA Inference Engines

Oct 08, 2021

Abstract:We have a FPGA design, we make it fast, efficient, and tested for a few important examples. Now we must infer a general solution to deploy in the data center. Here, we describe the FPGA DPUV3INT8 design and our compiler effort. The hand-tuned SW-HW solution for Resnet50\_v1 has (close to) 2 times better images per second (throughput) than our best FPGA implementation; the compiler generalizes the hand written techniques achieving about 1.5 times better performance for the same example, the compiler generalizes the optimizations to a model zoo of networks, and it achieves 80+\% HW efficiency.

Quantizing Convolutional Neural Networks for Low-Power High-Throughput Inference Engines

May 21, 2018

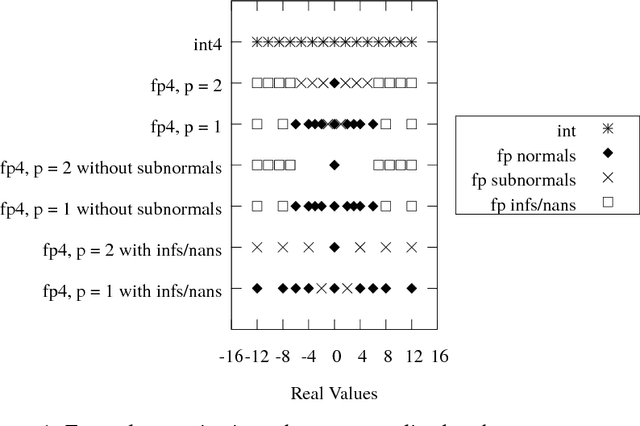

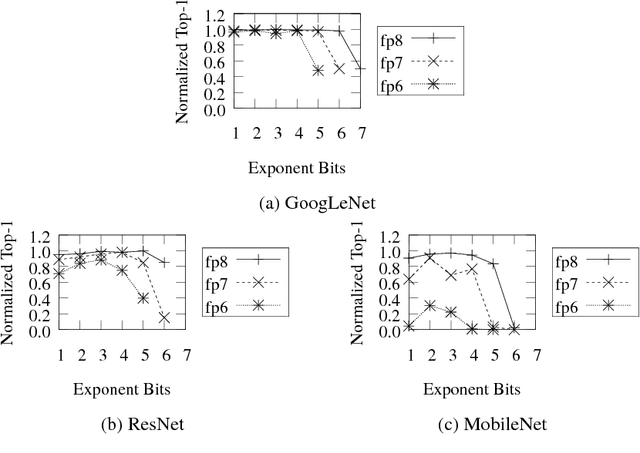

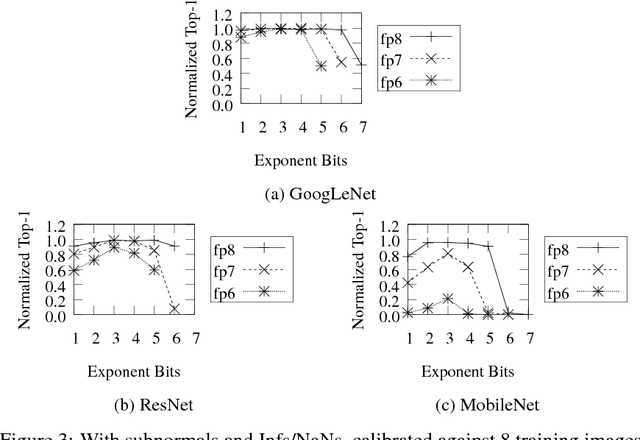

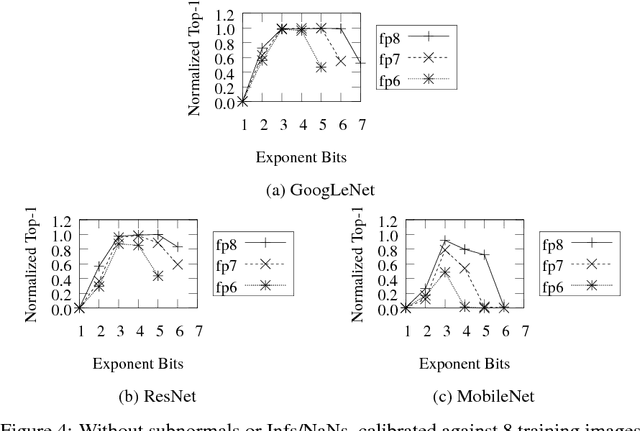

Abstract:Deep learning as a means to inferencing has proliferated thanks to its versatility and ability to approach or exceed human-level accuracy. These computational models have seemingly insatiable appetites for computational resources not only while training, but also when deployed at scales ranging from data centers all the way down to embedded devices. As such, increasing consideration is being made to maximize the computational efficiency given limited hardware and energy resources and, as a result, inferencing with reduced precision has emerged as a viable alternative to the IEEE 754 Standard for Floating-Point Arithmetic. We propose a quantization scheme that allows inferencing to be carried out using arithmetic that is fundamentally more efficient when compared to even half-precision floating-point. Our quantization procedure is significant in that we determine our quantization scheme parameters by calibrating against its reference floating-point model using a single inference batch rather than (re)training and achieve end-to-end post quantization accuracies comparable to the reference model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge