Pamul Yadav

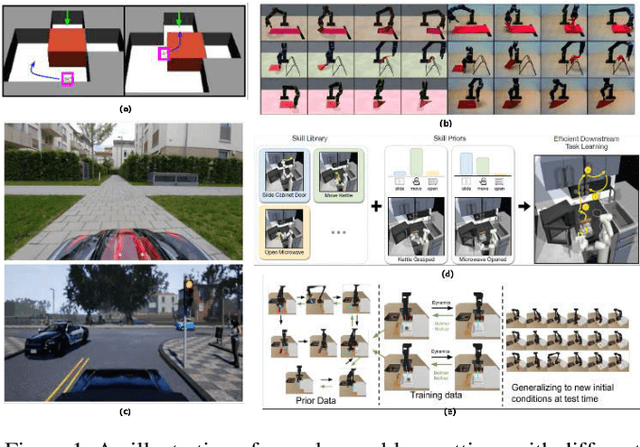

Rationale-aware Autonomous Driving Policy utilizing Safety Force Field implemented on CARLA Simulator

Nov 18, 2022Abstract:Despite the rapid improvement of autonomous driving technology in recent years, automotive manufacturers must resolve liability issues to commercialize autonomous passenger car of SAE J3016 Level 3 or higher. To cope with the product liability law, manufacturers develop autonomous driving systems in compliance with international standards for safety such as ISO 26262 and ISO 21448. Concerning the safety of the intended functionality (SOTIF) requirement in ISO 26262, the driving policy recommends providing an explicit rational basis for maneuver decisions. In this case, mathematical models such as Safety Force Field (SFF) and Responsibility-Sensitive Safety (RSS) which have interpretability on decision, may be suitable. In this work, we implement SFF from scratch to substitute the undisclosed NVIDIA's source code and integrate it with CARLA open-source simulator. Using SFF and CARLA, we present a predictor for claimed sets of vehicles, and based on the predictor, propose an integrated driving policy that consistently operates regardless of safety conditions it encounters while passing through dynamic traffic. The policy does not have a separate plan for each condition, but using safety potential, it aims human-like driving blended in with traffic flow.

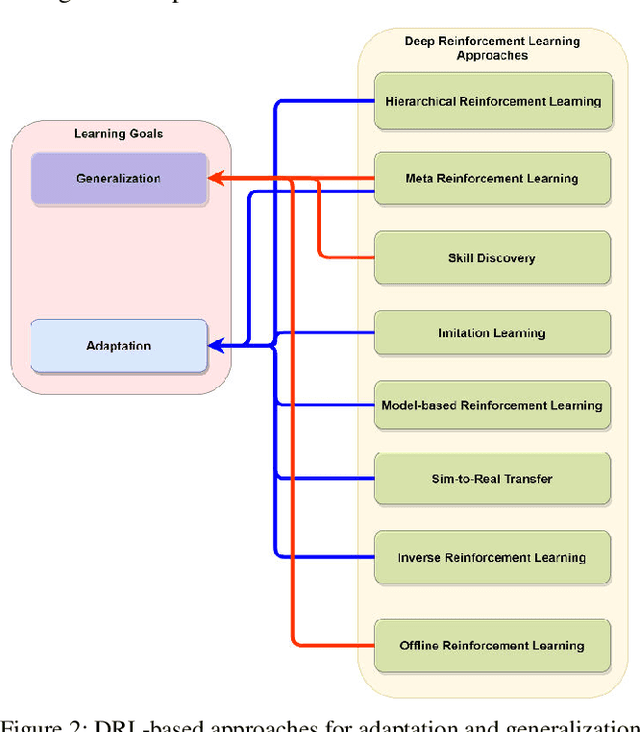

A Survey on Deep Reinforcement Learning-based Approaches for Adaptation and Generalization

Feb 17, 2022

Abstract:Deep Reinforcement Learning (DRL) aims to create intelligent agents that can learn to solve complex problems efficiently in a real-world environment. Typically, two learning goals: adaptation and generalization are used for baselining DRL algorithm's performance on different tasks and domains. This paper presents a survey on the recent developments in DRL-based approaches for adaptation and generalization. We begin by formulating these goals in the context of task and domain. Then we review the recent works under those approaches and discuss future research directions through which DRL algorithms' adaptability and generalizability can be enhanced and potentially make them applicable to a broad range of real-world problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge