Omid Shoghli

Real-Time Roadway Obstacle Detection for Electric Scooters Using Deep Learning and Multi-Sensor Fusion

Apr 04, 2025

Abstract:The increasing adoption of electric scooters (e-scooters) in urban areas has coincided with a rise in traffic accidents and injuries, largely due to their small wheels, lack of suspension, and sensitivity to uneven surfaces. While deep learning-based object detection has been widely used to improve automobile safety, its application for e-scooter obstacle detection remains unexplored. This study introduces a novel ground obstacle detection system for e-scooters, integrating an RGB camera, and a depth camera to enhance real-time road hazard detection. Additionally, the Inertial Measurement Unit (IMU) measures linear vertical acceleration to identify surface vibrations, guiding the selection of six obstacle categories: tree branches, manhole covers, potholes, pine cones, non-directional cracks, and truncated domes. All sensors, including the RGB camera, depth camera, and IMU, are integrated within the Intel RealSense Camera D435i. A deep learning model powered by YOLO detects road hazards and utilizes depth data to estimate obstacle proximity. Evaluated on the seven hours of naturalistic riding dataset, the system achieves a high mean average precision (mAP) of 0.827 and demonstrates excellent real-time performance. This approach provides an effective solution to enhance e-scooter safety through advanced computer vision and data fusion. The dataset is accessible at https://zenodo.org/records/14583718, and the project code is hosted on https://github.com/Zeyang-Zheng/Real-Time-Roadway-Obstacle-Detection-for-Electric-Scooters.

Performance Evaluation of Real-Time Object Detection for Electric Scooters

May 05, 2024

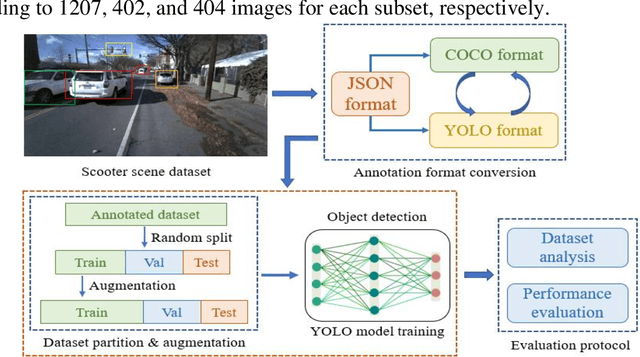

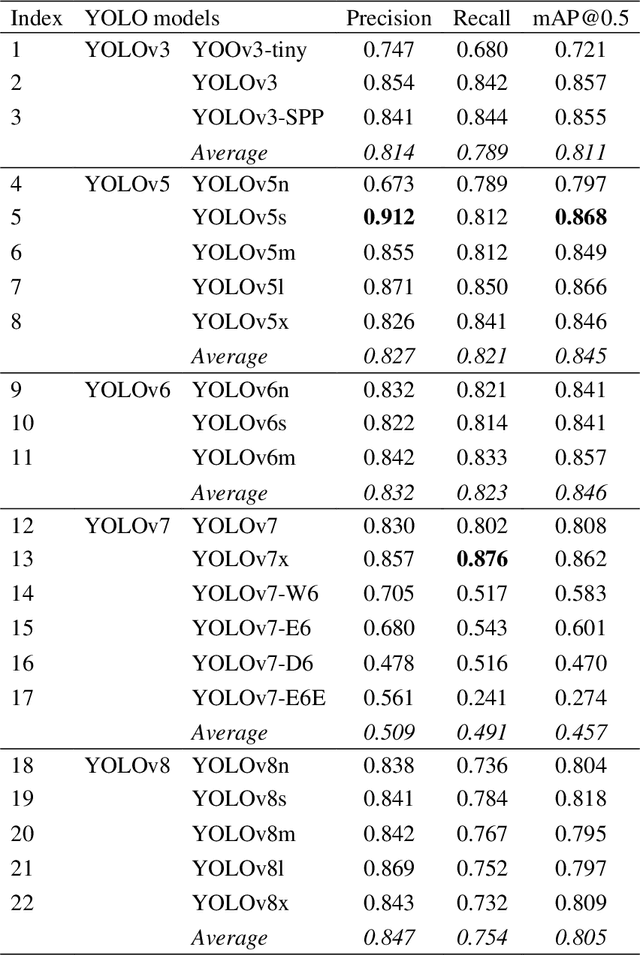

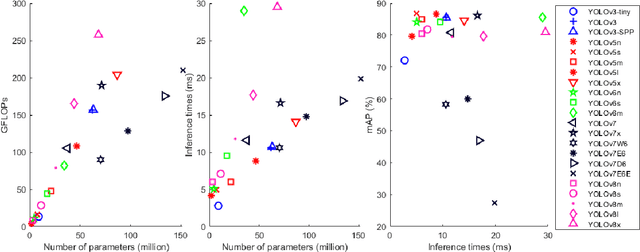

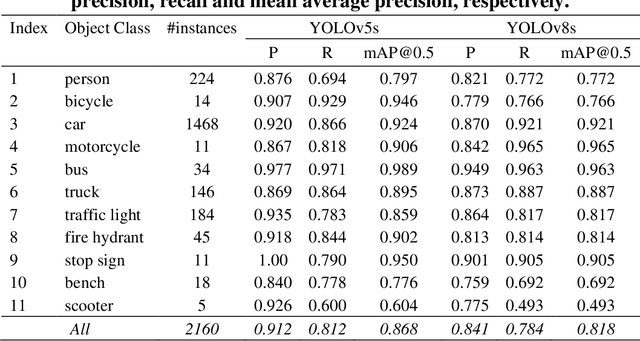

Abstract:Electric scooters (e-scooters) have rapidly emerged as a popular mode of transportation in urban areas, yet they pose significant safety challenges. In the United States, the rise of e-scooters has been marked by a concerning increase in related injuries and fatalities. Recently, while deep-learning object detection holds paramount significance in autonomous vehicles to avoid potential collisions, its application in the context of e-scooters remains relatively unexplored. This paper addresses this gap by assessing the effectiveness and efficiency of cutting-edge object detectors designed for e-scooters. To achieve this, the first comprehensive benchmark involving 22 state-of-the-art YOLO object detectors, including five versions (YOLOv3, YOLOv5, YOLOv6, YOLOv7, and YOLOv8), has been established for real-time traffic object detection using a self-collected dataset featuring e-scooters. The detection accuracy, measured in terms of mAP@0.5, ranges from 27.4% (YOLOv7-E6E) to 86.8% (YOLOv5s). All YOLO models, particularly YOLOv3-tiny, have displayed promising potential for real-time object detection in the context of e-scooters. Both the traffic scene dataset (https://zenodo.org/records/10578641) and software program codes (https://github.com/DongChen06/ScooterDet) for model benchmarking in this study are publicly available, which will not only improve e-scooter safety with advanced object detection but also lay the groundwork for tailored solutions, promising a safer and more sustainable urban micromobility landscape.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge