Octavian Donca

Non-rigid Relative Placement through 3D Dense Diffusion

Oct 29, 2024

Abstract:The task of "relative placement" is to predict the placement of one object in relation to another, e.g. placing a mug onto a mug rack. Through explicit object-centric geometric reasoning, recent methods for relative placement have made tremendous progress towards data-efficient learning for robot manipulation while generalizing to unseen task variations. However, they have yet to represent deformable transformations, despite the ubiquity of non-rigid bodies in real world settings. As a first step towards bridging this gap, we propose ``cross-displacement" - an extension of the principles of relative placement to geometric relationships between deformable objects - and present a novel vision-based method to learn cross-displacement through dense diffusion. To this end, we demonstrate our method's ability to generalize to unseen object instances, out-of-distribution scene configurations, and multimodal goals on multiple highly deformable tasks (both in simulation and in the real world) beyond the scope of prior works. Supplementary information and videos can be found at https://sites.google.com/view/tax3d-corl-2024 .

Learning Distributional Demonstration Spaces for Task-Specific Cross-Pose Estimation

May 07, 2024

Abstract:Relative placement tasks are an important category of tasks in which one object needs to be placed in a desired pose relative to another object. Previous work has shown success in learning relative placement tasks from just a small number of demonstrations when using relational reasoning networks with geometric inductive biases. However, such methods cannot flexibly represent multimodal tasks, like a mug hanging on any of n racks. We propose a method that incorporates additional properties that enable learning multimodal relative placement solutions, while retaining the provably translation-invariant and relational properties of prior work. We show that our method is able to learn precise relative placement tasks with only 10-20 multimodal demonstrations with no human annotations across a diverse set of objects within a category.

Safe Path Planning for Polynomial Shape Obstacles via Control Barrier Functions and Logistic Regression

Oct 07, 2022

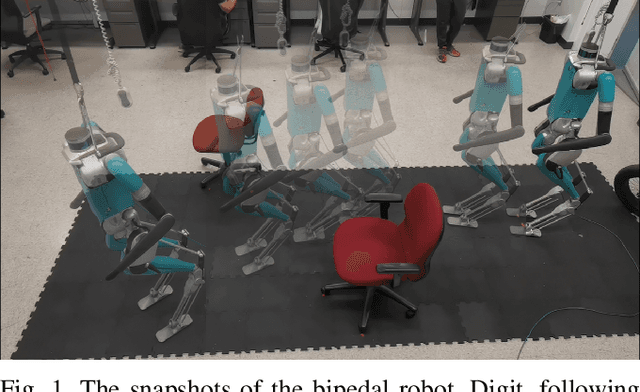

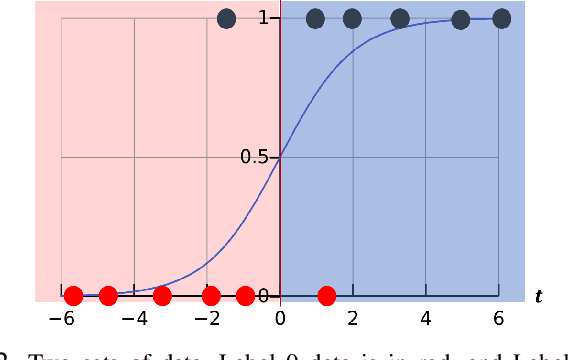

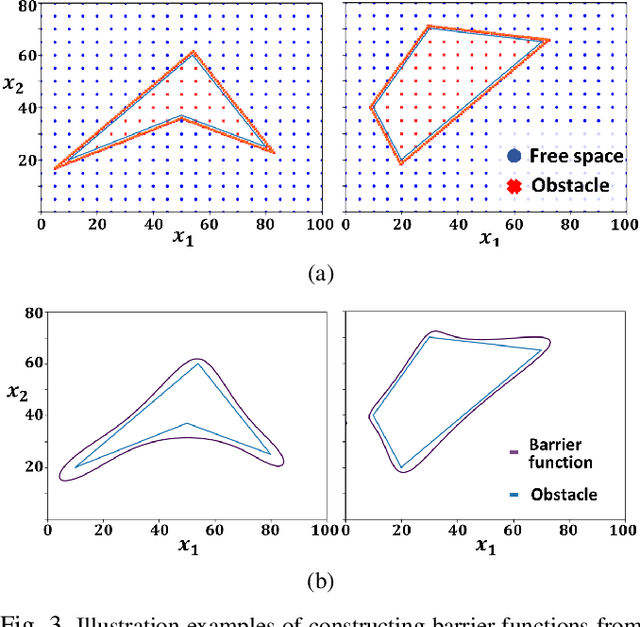

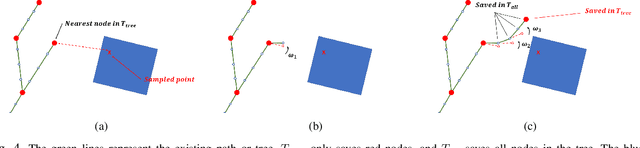

Abstract:Safe path planning is critical for bipedal robots to operate in safety-critical environments. Common path planning algorithms, such as RRT or RRT*, typically use geometric or kinematic collision check algorithms to ensure collision-free paths toward the target position. However, such approaches may generate non-smooth paths that do not comply with the dynamics constraints of walking robots. It has been shown that the control barrier function (CBF) can be integrated with RRT/RRT* to synthesize dynamically feasible collision-free paths. Yet, existing work has been limited to simple circular or elliptical shape obstacles due to the challenging nature of constructing appropriate barrier functions to represent irregular-shaped obstacles. In this paper, we present a CBF-based RRT* algorithm for bipedal robots to generate a collision-free path through complex space with polynomial-shaped obstacles. In particular, we used logistic regression to construct polynomial barrier functions from a grid map of the environment to represent arbitrarily shaped obstacles. Moreover, we developed a multi-step CBF steering controller to ensure the efficiency of free space exploration. The proposed approach was first validated in simulation for a differential drive model, and then experimentally evaluated with a 3D humanoid robot, Digit, in a lab setting with randomly placed obstacles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge