Nouf Alabbasi

Rethinking Strategic Mechanism Design In The Age Of Large Language Models: New Directions For Communication Systems

Nov 30, 2024

Abstract:This paper explores the application of large language models (LLMs) in designing strategic mechanisms -- including auctions, contracts, and games -- for specific purposes in communication networks. Traditionally, strategic mechanism design in telecommunications has relied on human expertise to craft solutions based on game theory, auction theory, and contract theory. However, the evolving landscape of telecom networks, characterized by increasing abstraction, emerging use cases, and novel value creation opportunities, calls for more adaptive and efficient approaches. We propose leveraging LLMs to automate or semi-automate the process of strategic mechanism design, from intent specification to final formulation. This paradigm shift introduces both semi-automated and fully-automated design pipelines, raising crucial questions about faithfulness to intents, incentive compatibility, algorithmic stability, and the balance between human oversight and artificial intelligence (AI) autonomy. The paper discusses potential frameworks, such as retrieval-augmented generation (RAG)-based systems, to implement LLM-driven mechanism design in communication networks contexts. We examine key challenges, including LLM limitations in capturing domain-specific constraints, ensuring strategy proofness, and integrating with evolving telecom standards. By providing an in-depth analysis of the synergies and tensions between LLMs and strategic mechanism design within the IoT ecosystem, this work aims to stimulate discussion on the future of AI-driven information economic mechanisms in telecommunications and their potential to address complex, dynamic network management scenarios.

TeleOracle: Fine-Tuned Retrieval-Augmented Generation with Long-Context Support for Network

Nov 04, 2024Abstract:The telecommunications industry's rapid evolution demands intelligent systems capable of managing complex networks and adapting to emerging technologies. While large language models (LLMs) show promise in addressing these challenges, their deployment in telecom environments faces significant constraints due to edge device limitations and inconsistent documentation. To bridge this gap, we present TeleOracle, a telecom-specialized retrieval-augmented generation (RAG) system built on the Phi-2 small language model (SLM). To improve context retrieval, TeleOracle employs a two-stage retriever that incorporates semantic chunking and hybrid keyword and semantic search. Additionally, we expand the context window during inference to enhance the model's performance on open-ended queries. We also employ low-rank adaption for efficient fine-tuning. A thorough analysis of the model's performance indicates that our RAG framework is effective in aligning Phi-2 to the telecom domain in a downstream question and answer (QnA) task, achieving a 30% improvement in accuracy over the base Phi-2 model, reaching an overall accuracy of 81.20%. Notably, we show that our model not only performs on par with the much larger LLMs but also achieves a higher faithfulness score, indicating higher adherence to the retrieved context.

Leveraging Fine-Tuned Retrieval-Augmented Generation with Long-Context Support: For 3GPP Standards

Aug 21, 2024

Abstract:Recent studies show that large language models (LLMs) struggle with technical standards in telecommunications. We propose a fine-tuned retrieval-augmented generation (RAG) system based on the Phi-2 small language model (SLM) to serve as an oracle for communication networks. Our developed system leverages forward-looking semantic chunking to adaptively determine parsing breakpoints based on embedding similarity, enabling effective processing of diverse document formats. To handle the challenge of multiple similar contexts in technical standards, we employ a re-ranking algorithm to prioritize the most relevant retrieved chunks. Recognizing the limitations of Phi-2's small context window, we implement a recent technique, namely SelfExtend, to expand the context window during inference, which not only boosts the performance but also can accommodate a wider range of user queries and design requirements from customers to specialized technicians. For fine-tuning, we utilize the low-rank adaptation (LoRA) technique to enhance computational efficiency during training and enable effective fine-tuning on small datasets. Our comprehensive experiments demonstrate substantial improvements over existing question-answering approaches in the telecom domain, achieving performance that exceeds larger language models such as GPT-4 (which is about 880 times larger in size). This work presents a novel approach to leveraging SLMs for communication networks, offering a balance of efficiency and performance. This work can serve as a foundation towards agentic language models for networks.

Large Language Model-Driven Curriculum Design for Mobile Networks

May 28, 2024

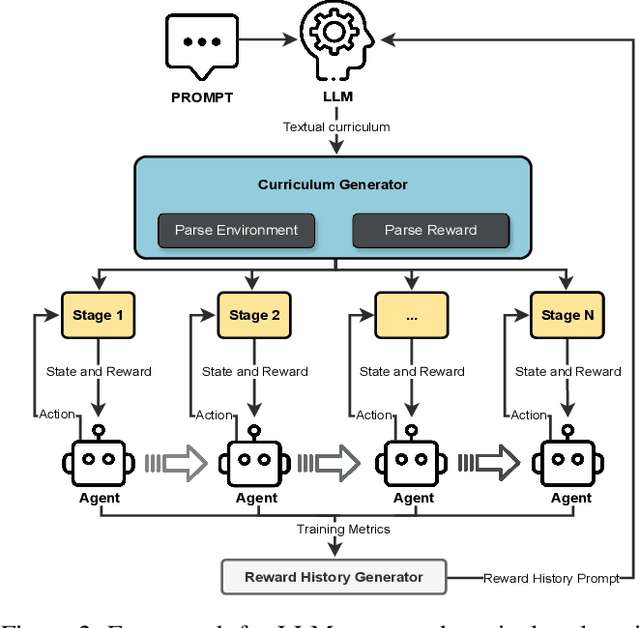

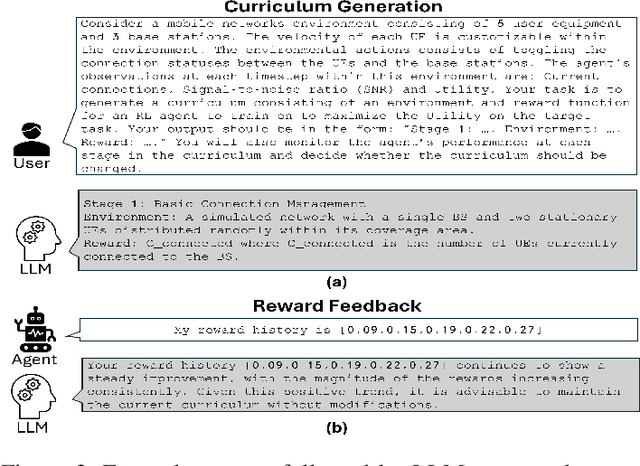

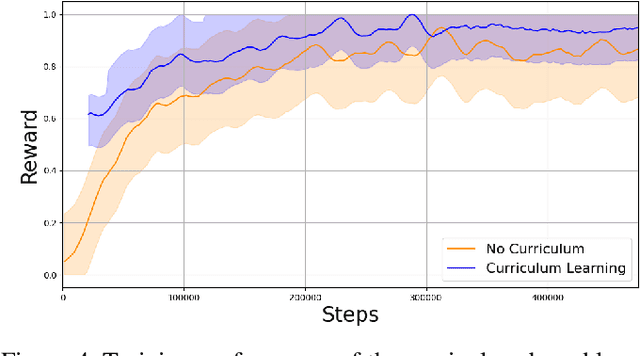

Abstract:This paper proposes a novel framework that leverages large language models (LLMs) to automate curriculum design, thereby enhancing the application of reinforcement learning (RL) in mobile networks. As mobile networks evolve towards the 6G era, managing their increasing complexity and dynamic nature poses significant challenges. Conventional RL approaches often suffer from slow convergence and poor generalization due to conflicting objectives and the large state and action spaces associated with mobile networks. To address these shortcomings, we introduce curriculum learning, a method that systematically exposes the RL agent to progressively challenging tasks, improving convergence and generalization. However, curriculum design typically requires extensive domain knowledge and manual human effort. Our framework mitigates this by utilizing the generative capabilities of LLMs to automate the curriculum design process, significantly reducing human effort while improving the RL agent's convergence and performance. We deploy our approach within a simulated mobile network environment and demonstrate improved RL convergence rates, generalization to unseen scenarios, and overall performance enhancements. As a case study, we consider autonomous coordination and user association in mobile networks. Our obtained results highlight the potential of combining LLM-based curriculum generation with RL for managing next-generation wireless networks, marking a significant step towards fully autonomous network operations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge