Nora Etxezarreta

Hierarchical Learning for Modular Robots

Feb 12, 2018

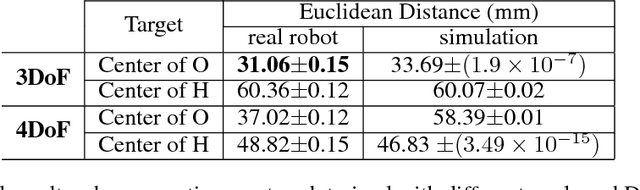

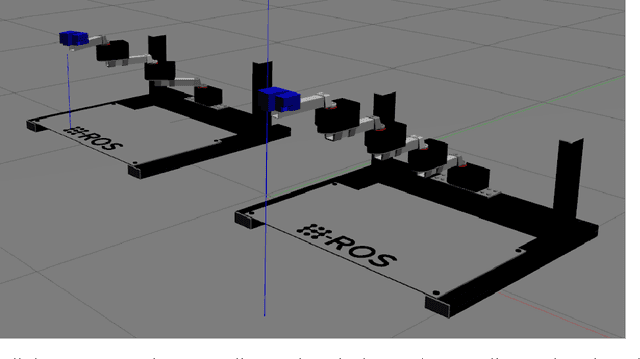

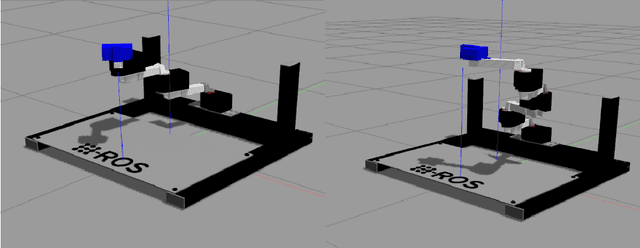

Abstract:We argue that hierarchical methods can become the key for modular robots achieving reconfigurability. We present a hierarchical approach for modular robots that allows a robot to simultaneously learn multiple tasks. Our evaluation results present an environment composed of two different modular robot configurations, namely 3 degrees-of-freedom (DoF) and 4DoF with two corresponding targets. During the training, we switch between configurations and targets aiming to evaluate the possibility of training a neural network that is able to select appropriate motor primitives and robot configuration to achieve the target. The trained neural network is then transferred and executed on a real robot with 3DoF and 4DoF configurations. We demonstrate how this technique generalizes to robots with different configurations and tasks.

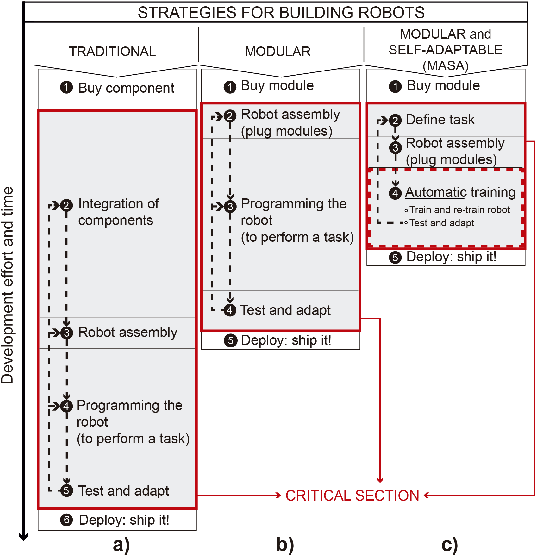

Towards self-adaptable robots: from programming to training machines

Feb 12, 2018

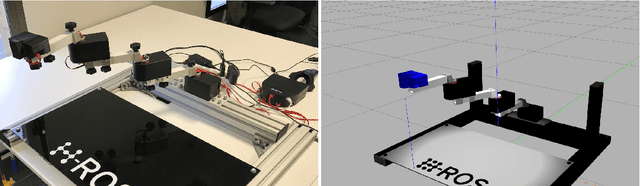

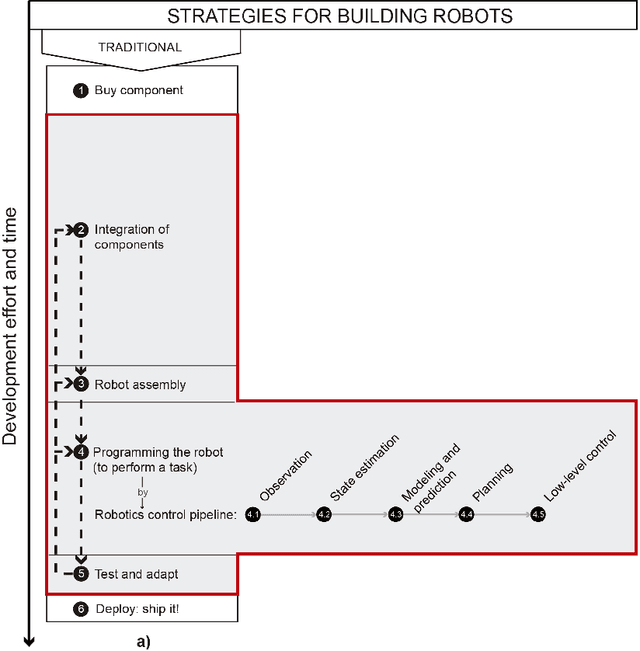

Abstract:We argue that hardware modularity plays a key role in the convergence of Robotics and Artificial Intelligence (AI). We introduce a new approach for building robots that leads to more adaptable and capable machines. We present the concept of a self-adaptable robot that makes use of hardware modularity and AI techniques to reduce the effort and time required to be built. We demonstrate in simulation and with a real robot how, rather than programming, training produces behaviors in the robot that generalize fast and produce robust outputs in the presence of noise. In particular, we advocate for mammals.

Evaluation of Deep Reinforcement Learning Methods for Modular Robots

Feb 07, 2018

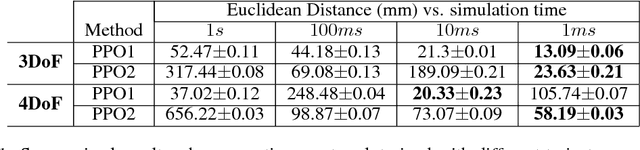

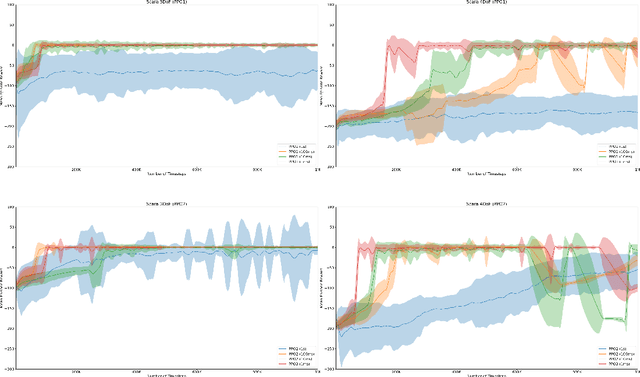

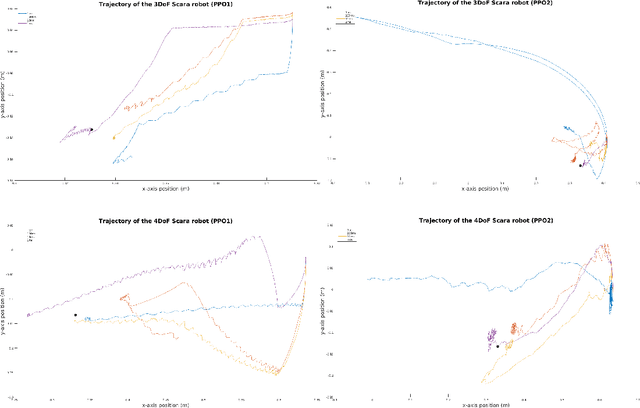

Abstract:We propose a novel framework for Deep Reinforcement Learning (DRL) in modular robotics using traditional robotic tools that extend state-of-the-art DRL implementations and provide an end-to-end approach which trains a robot directly from joint states. Moreover, we present a novel technique to transfer these DLR methods into the real robot, aiming to close the simulation-reality gap. We demonstrate the robustness of the performance of state-of-the-art DRL methods for continuous action spaces in modular robots, with an empirical study both in simulation and in the real robot where we also evaluate how accelerating the simulation time affects the robot's performance. Our results show that extending the modular robot from 3 degrees-of-freedom (DoF), to 4 DoF, does not affect the robot's learning. This paves the way towards training modular robots using DRL techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge