Noëlie Cherrier

Context-Aware Automated Passenger Counting Data Denoising

Feb 06, 2024Abstract:A reliable and accurate knowledge of the ridership in public transportation networks is crucial for public transport operators and public authorities to be aware of their network's use and optimize transport offering. Several techniques to estimate ridership exist nowadays, some of them in an automated manner. Among them, Automatic Passenger Counting (APC) systems detect passengers entering and leaving the vehicle at each station of its course. However, data resulting from these systems are often noisy or even biased, resulting in under or overestimation of onboard occupancy. In this work, we propose a denoising algorithm for APC data to improve their robustness and ease their analyzes. The proposed approach consists in a constrained integer linear optimization, taking advantage of ticketing data and historical ridership data to further constrain and guide the optimization. The performances are assessed and compared to other denoising methods on several public transportation networks in France, to manual counts available on one of these networks, and on simulated data.

Sim-to-Real Domain Adaptation For High Energy Physics

Dec 17, 2019

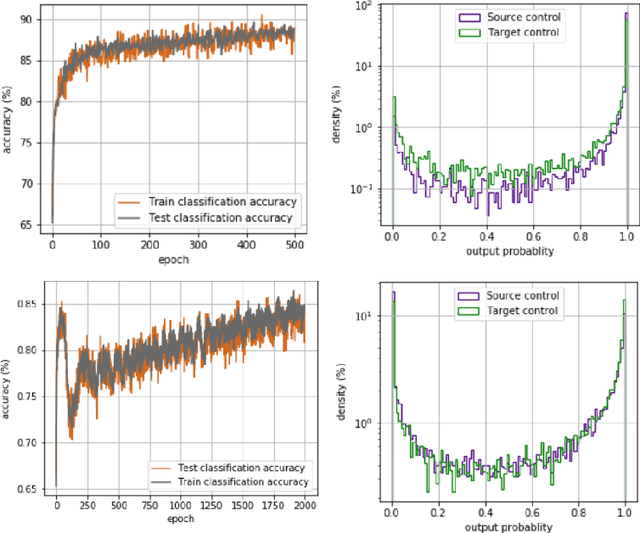

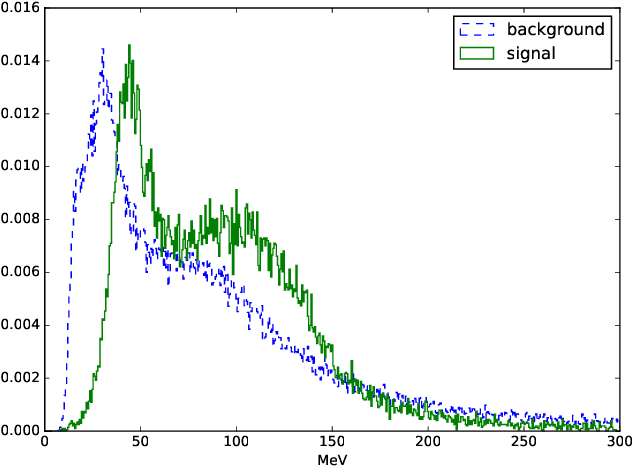

Abstract:Particle physics or High Energy Physics (HEP) studies the elementary constituents of matter and their interactions with each other. Machine Learning (ML) has played an important role in HEP analysis and has proven extremely successful in this area. Usually, the ML algorithms are trained on numerical simulations of the experimental setup and then applied to the real experimental data. However, any discrepancy between the simulation and real data may lead to dramatic consequences concerning the performances of the algorithm on real data. In this paper, we present an application of domain adaptation using a Domain Adversarial Neural Network trained on public HEP data. We demonstrate the success of this approach to achieve sim-to-real transfer and ensure the consistency of the ML algorithms performances on real and simulated HEP datasets.

Embedded Constrained Feature Construction for High-Energy Physics Data Classification

Dec 17, 2019

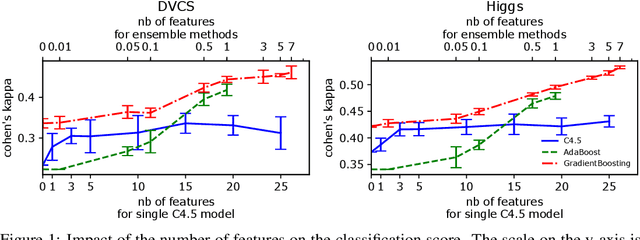

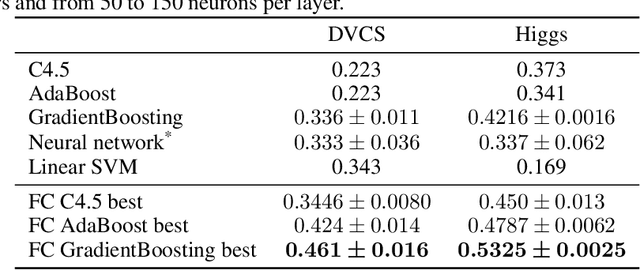

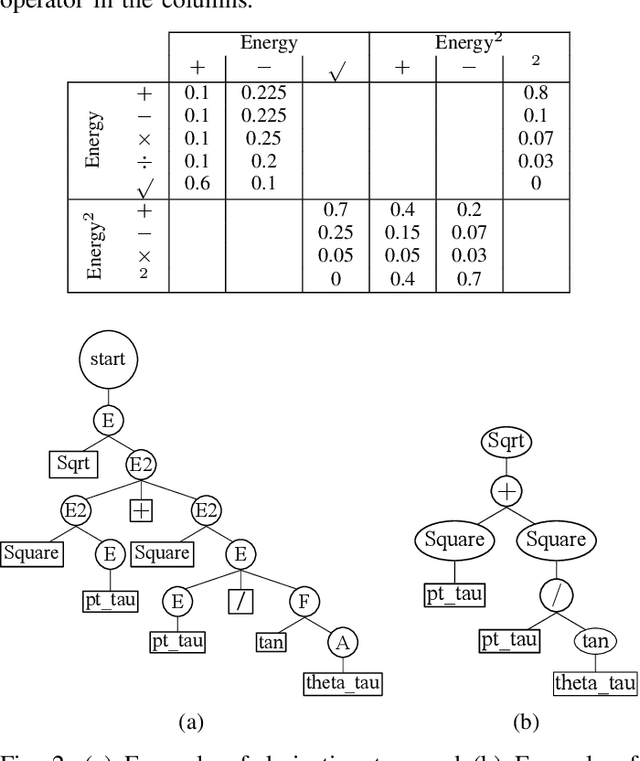

Abstract:Before any publication, data analysis of high-energy physics experiments must be validated. This validation is granted only if a perfect understanding of the data and the analysis process is demonstrated. Therefore, physicists prefer using transparent machine learning algorithms whose performances highly rely on the suitability of the provided input features. To transform the feature space, feature construction aims at automatically generating new relevant features. Whereas most of previous works in this area perform the feature construction prior to the model training, we propose here a general framework to embed a feature construction technique adapted to the constraints of high-energy physics in the induction of tree-based models. Experiments on two high-energy physics datasets confirm that a significant gain is obtained on the classification scores, while limiting the number of built features. Since the features are built to be interpretable, the whole model is transparent and readable.

Consistent Feature Construction with Constrained Genetic Programming for Experimental Physics

Aug 17, 2019

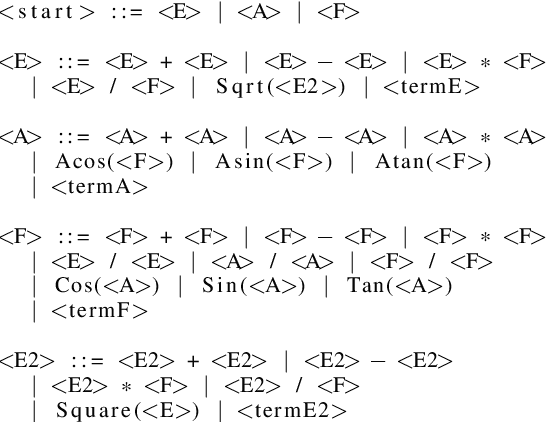

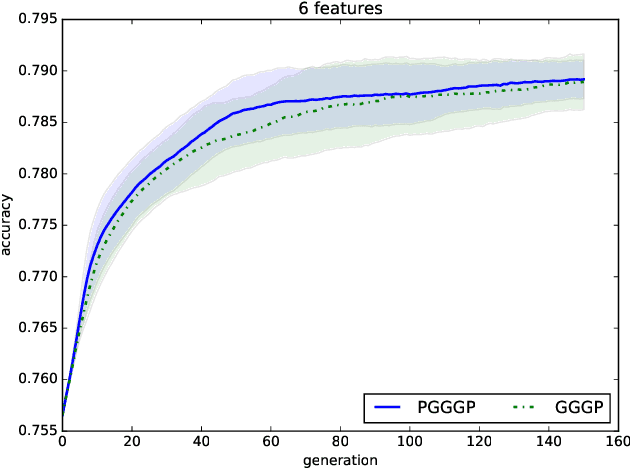

Abstract:A good feature representation is a determinant factor to achieve high performance for many machine learning algorithms in terms of classification. This is especially true for techniques that do not build complex internal representations of data (e.g. decision trees, in contrast to deep neural networks). To transform the feature space, feature construction techniques build new high-level features from the original ones. Among these techniques, Genetic Programming is a good candidate to provide interpretable features required for data analysis in high energy physics. Classically, original features or higher-level features based on physics first principles are used as inputs for training. However, physicists would benefit from an automatic and interpretable feature construction for the classification of particle collision events. Our main contribution consists in combining different aspects of Genetic Programming and applying them to feature construction for experimental physics. In particular, to be applicable to physics, dimensional consistency is enforced using grammars. Results of experiments on three physics datasets show that the constructed features can bring a significant gain to the classification accuracy. To the best of our knowledge, it is the first time a method is proposed for interpretable feature construction with units of measurement, and that experts in high-energy physics validate the overall approach as well as the interpretability of the built features.

* Accepted in this version to CEC 2019

Ionospheric activity prediction using convolutional recurrent neural networks

Nov 06, 2018

Abstract:The ionosphere electromagnetic activity is a major factor of the quality of satellite telecommunications, Global Navigation Satellite Systems (GNSS) and other vital space applications. Being able to forecast globally the Total Electron Content (TEC) would enable a better anticipation of potential performance degradations. A few studies have proposed models able to predict the TEC locally, but not worldwide for most of them. Thanks to a large record of past TEC maps publicly available, we propose a method based on Deep Neural Networks (DNN) to forecast a sequence of global TEC maps consecutive to an input sequence of TEC maps, without introducing any prior knowledge other than Earth rotation periodicity. By combining several state-of-the-art architectures, the proposed approach is competitive with previous works on TEC forecasting while predicting the TEC globally.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge