Nishchal K. Verma

On Designing Features for Condition Monitoring of Rotating Machines

Feb 15, 2024Abstract:Various methods for designing input features have been proposed for fault recognition in rotating machines using one-dimensional raw sensor data. The available methods are complex, rely on empirical approaches, and may differ depending on the condition monitoring data used. Therefore, this article proposes a novel algorithm to design input features that unifies the feature extraction process for different time-series sensor data. This new insight for designing/extracting input features is obtained through the lens of histogram theory. The proposed algorithm extracts discriminative input features, which are suitable for a simple classifier to deep neural network-based classifiers. The designed input features are given as input to the classifier with end-to-end training in a single framework for machine conditions recognition. The proposed scheme has been validated through three real-time datasets: a) acoustic dataset, b) CWRU vibration dataset, and c) IMS vibration dataset. The real-time results and comparative study show the effectiveness of the proposed scheme for the prediction of the machine's health states.

A Novel Vision Transformer with Residual in Self-attention for Biomedical Image Classification

Jun 05, 2023Abstract:Biomedical image classification requires capturing of bio-informatics based on specific feature distribution. In most of such applications, there are mainly challenges due to limited availability of samples for diseased cases and imbalanced nature of dataset. This article presents the novel framework of multi-head self-attention for vision transformer (ViT) which makes capable of capturing the specific image features for classification and analysis. The proposed method uses the concept of residual connection for accumulating the best attention output in each block of multi-head attention. The proposed framework has been evaluated on two small datasets: (i) blood cell classification dataset and (ii) brain tumor detection using brain MRI images. The results show the significant improvement over traditional ViT and other convolution based state-of-the-art classification models.

Guided Sampling-based Evolutionary Deep Neural Network for Intelligent Fault Diagnosis

Nov 12, 2021

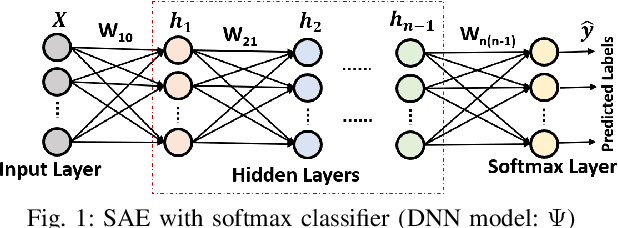

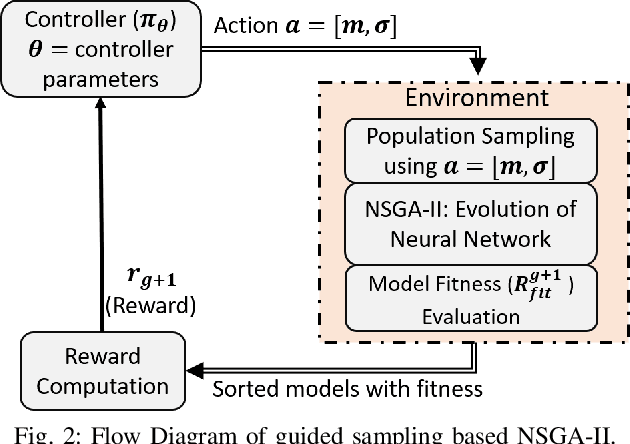

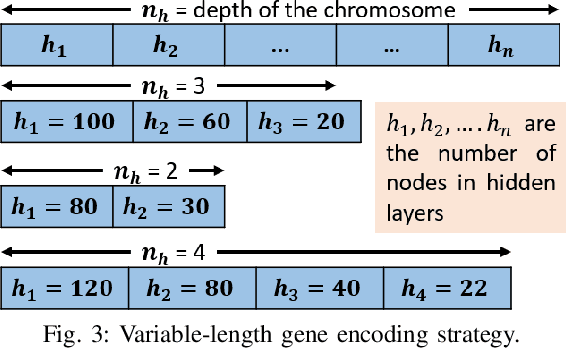

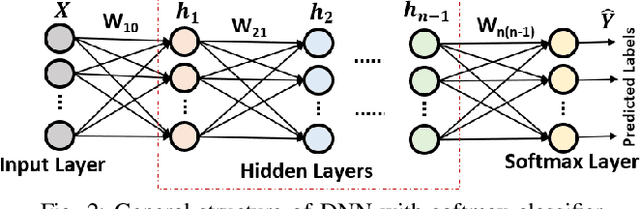

Abstract:The diagnostic performance of most of the deep learning models is greatly affected by the selection of model architecture and their hyperparameters. The main challenges in model selection methodologies are the design of architecture optimizer and model evaluation strategy. In this paper, we have proposed a novel framework of evolutionary deep neural network which uses policy gradient to guide the evolution of DNN architecture towards maximum diagnostic accuracy. We have formulated a policy gradient-based controller which generates an action to sample the new model architecture at every generation. The best fitness obtained is used as a reward to update the policy parameters. Also, the best model obtained is transferred to the next generation for quick model evaluation in the NSGA-II evolutionary framework. Thus, the algorithm gets the benefits of fast non-dominated sorting as well as quick model evaluation. The effectiveness of the proposed framework has been validated on three datasets: the Air Compressor dataset, Case Western Reserve University dataset, and Paderborn university dataset.

Transfer Learning based Evolutionary Deep Neural Network for Intelligent Fault Diagnosis

Sep 28, 2021

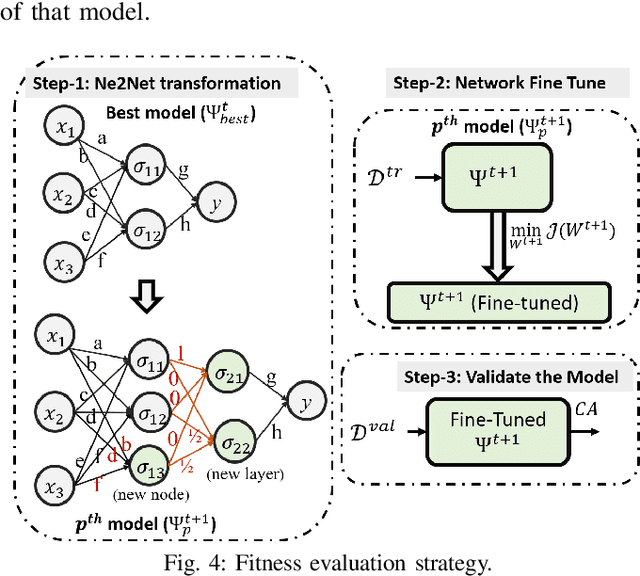

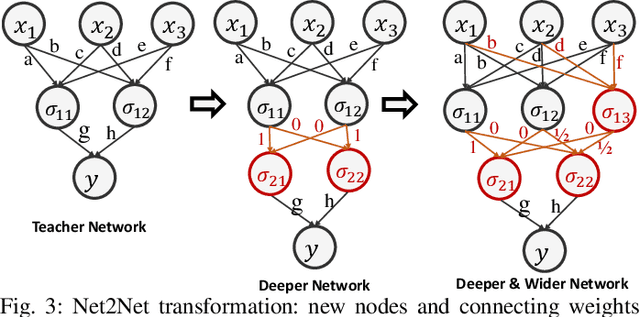

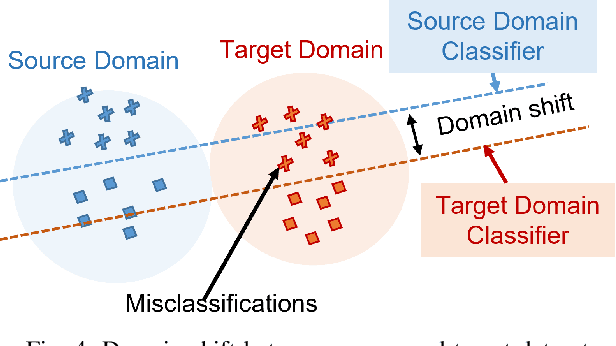

Abstract:The performance of a deep neural network (DNN) for fault diagnosis is very much dependent on the network architecture. Also, the diagnostic performance is reduced if the model trained on a laboratory case machine is used on a test dataset from an industrial machine running under variable operating conditions. Thus there are two challenges for the intelligent fault diagnosis of industrial machines: (i) selection of suitable DNN architecture and (ii) domain adaptation for the change in operating conditions. Therefore, we propose an evolutionary Net2Net transformation (EvoNet2Net) that finds the best suitable DNN architecture for the given dataset. Nondominated sorting genetic algorithm II has been used to optimize the depth and width of the DNN architecture. We have formulated a transfer learning-based fitness evaluation scheme for faster evolution. It uses the concept of domain adaptation for quick learning of the data pattern in the target domain. Also, we have introduced a hybrid crossover technique for optimization of the depth and width of the deep neural network encoded in a chromosome. We have used the Case Western Reserve University dataset and Paderborn university dataset to demonstrate the effectiveness of the proposed framework for the selection of the best suitable architecture capable of excellent diagnostic performance, classification accuracy almost up to 100\%.

Quick Learning Mechanism with Cross-Domain Adaptation for Intelligent Fault Diagnosis

Mar 16, 2021

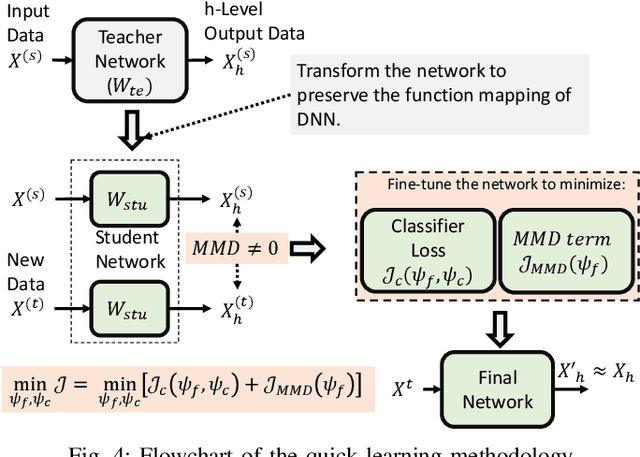

Abstract:This paper presents a quick learning mechanism for intelligent fault diagnosis of rotating machines operating under changeable working conditions. Since real case machines in industries run under different operating conditions, the deep learning model trained for a laboratory case machine fails to perform well for the fault diagnosis using recorded data from real case machines. It poses the need of training a new diagnostic model for the fault diagnosis of the real case machine under every new working condition. Therefore, there is a need for a mechanism that can quickly transform the existing diagnostic model for machines operating under different conditions. we propose a quick learning method with Net2Net transformation followed by a fine-tuning method to cancel/minimize the maximum mean discrepancy of the new data to the previous one. This transformation enables us to create a new network with any architecture almost ready to be used for the new dataset. The effectiveness of the proposed fault diagnosis method has been demonstrated on the CWRU dataset, IMS bearing dataset, and Paderborn university dataset. We have shown that the diagnostic model trained for CWRU data at zero load can be used to quickly train another diagnostic model for the CWRU data at different loads and also for the IMS dataset. Using the dataset provided by Paderborn university, it has been validated that the diagnostic model trained on artificially damaged fault dataset can be used for quickly training another model for real damage dataset.

Improved Adaptive Type-2 Fuzzy Filter with Exclusively Two Fuzzy Membership Function for Filtering Salt and Pepper Noise

Aug 10, 2020

Abstract:Image denoising is one of the preliminary steps in image processing methods in which the presence of noise can deteriorate the image quality. To overcome this limitation, in this paper a improved two-stage fuzzy filter is proposed for filtering salt and pepper noise from the images. In the first-stage, the pixels in the image are categorized as good or noisy based on adaptive thresholding using type-2 fuzzy logic with exclusively two different membership functions in the filter window. In the second-stage, the noisy pixels are denoised using modified ordinary fuzzy logic in the respective filter window. The proposed filter is validated on standard images with various noise levels. The proposed filter removes the noise and preserves useful image characteristics, i.e., edges and corners at higher noise level. The performance of the proposed filter is compared with the various state-of-the-art methods in terms of peak signal-to-noise ratio and computation time. To show the effectiveness of filter statistical tests, i.e., Friedman test and Bonferroni-Dunn (BD) test are also carried out which clearly ascertain that the proposed filter outperforms in comparison of various filtering approaches.

mRMR-DNN with Transfer Learning for IntelligentFault Diagnosis of Rotating Machines

Dec 25, 2019

Abstract:In recent years, intelligent condition-based monitoring of rotary machinery systems has become a major research focus of machine fault diagnosis. In condition-based monitoring, it is challenging to form a large-scale well-annotated dataset due to the expense of data acquisition and costly annotation. Along with that, the generated data have a large number of redundant features which degraded the performance of the machine learning models. To overcome this, we have utilized the advantages of minimum redundancy maximum relevance (mRMR) and transfer learning with deep learning model. In this work, mRMR is combined with deep learning and deep transfer learning framework to improve the fault diagnostics performance in term of accuracy and computational complexity. The mRMR reduces the redundant information from data and increases the deep learning performance, whereas transfer learning, reduces a large amount of data dependency for training the model. In the proposed work, two frameworks, i.e., mRMR with deep learning and mRMR with deep transfer learning, have explored and validated on CWRU and IMS rolling element bearings datasets. The analysis shows that the proposed frameworks are able to obtain better diagnostic accuracy in comparison of existing methods and also able to handle the data with a large number of features more quickly.

An Entropy-based Variable Feature Weighted Fuzzy k-Means Algorithm for High Dimensional Data

Dec 24, 2019

Abstract:This paper presents a new fuzzy k-means algorithm for the clustering of high dimensional data in various subspaces. Since, In the case of high dimensional data, some features might be irrelevant and relevant but may have different significance in the clustering. For a better clustering, it is crucial to incorporate the contribution of these features in the clustering process. To combine these features, in this paper, we have proposed a new fuzzy k-means clustering algorithm in which the objective function of the fuzzy k-means is modified using two different entropy term. The first entropy term helps to minimize the within-cluster dispersion and maximize the negative entropy to determine clusters to contribute to the association of data points. The second entropy term helps to control the weight of the features because different features have different contributing weights in the clustering process for obtaining the better partition of the data. The efficacy of the proposed method is presented in terms of various clustering measures on multiple datasets and compared with various state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge