Niloofar Ranjbar

Lotus at SemEval-2025 Task 11: RoBERTa with Llama-3 Generated Explanations for Multi-Label Emotion Classification

Feb 28, 2025

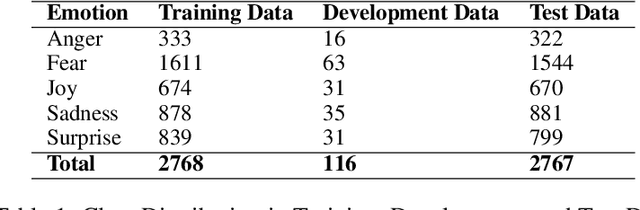

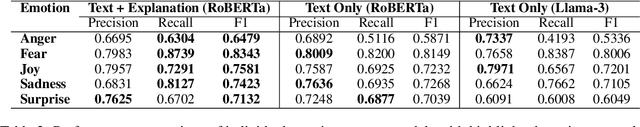

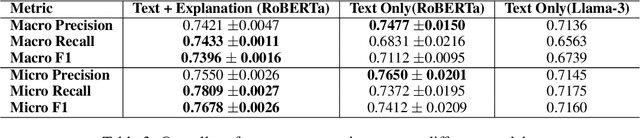

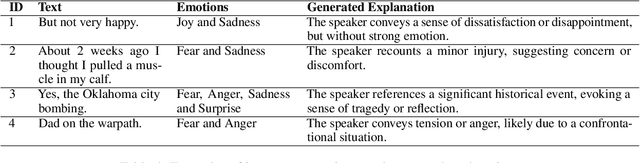

Abstract:This paper presents a novel approach for multi-label emotion detection, where Llama-3 is used to generate explanatory content that clarifies ambiguous emotional expressions, thereby enhancing RoBERTa's emotion classification performance. By incorporating explanatory context, our method improves F1-scores, particularly for emotions like fear, joy, and sadness, and outperforms text-only models. The addition of explanatory content helps resolve ambiguity, addresses challenges like overlapping emotional cues, and enhances multi-label classification, marking a significant advancement in emotion detection tasks.

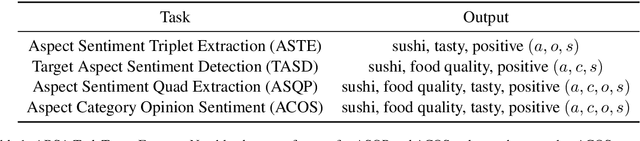

E2TP: Element to Tuple Prompting Improves Aspect Sentiment Tuple Prediction

May 10, 2024

Abstract:Generative approaches have significantly influenced Aspect-Based Sentiment Analysis (ABSA), garnering considerable attention. However, existing studies often predict target text components monolithically, neglecting the benefits of utilizing single elements for tuple prediction. In this paper, we introduce Element to Tuple Prompting (E2TP), employing a two-step architecture. The former step focuses on predicting single elements, while the latter step completes the process by mapping these predicted elements to their corresponding tuples. E2TP is inspired by human problem-solving, breaking down tasks into manageable parts, using the first step's output as a guide in the second step. Within this strategy, three types of paradigms, namely E2TP($diet$), E2TP($f_1$), and E2TP($f_2$), are designed to facilitate the training process. Beyond in-domain task-specific experiments, our paper addresses cross-domain scenarios, demonstrating the effectiveness and generalizability of the approach. By conducting a comprehensive analysis on various benchmarks, we show that E2TP achieves new state-of-the-art results in nearly all cases.

Explaining Recommendation System Using Counterfactual Textual Explanations

Mar 14, 2023Abstract:Currently, there is a significant amount of research being conducted in the field of artificial intelligence to improve the explainability and interpretability of deep learning models. It is found that if end-users understand the reason for the production of some output, it is easier to trust the system. Recommender systems are one example of systems that great efforts have been conducted to make their output more explainable. One method for producing a more explainable output is using counterfactual reasoning, which involves altering minimal features to generate a counterfactual item that results in changing the output of the system. This process allows the identification of input features that have a significant impact on the desired output, leading to effective explanations. In this paper, we present a method for generating counterfactual explanations for both tabular and textual features. We evaluated the performance of our proposed method on three real-world datasets and demonstrated a +5\% improvement on finding effective features (based on model-based measures) compared to the baseline method.

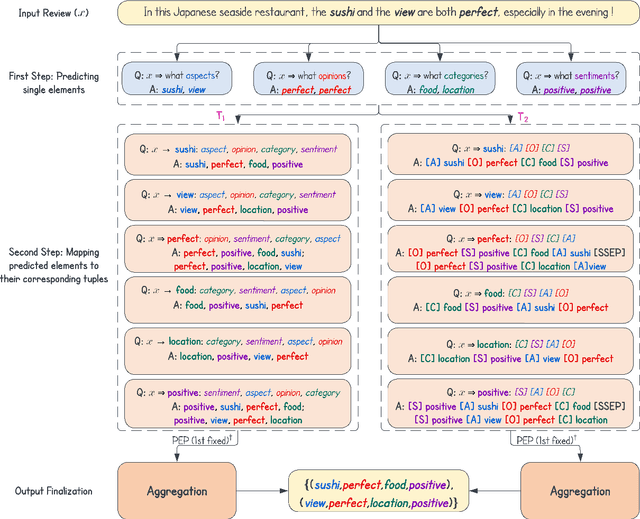

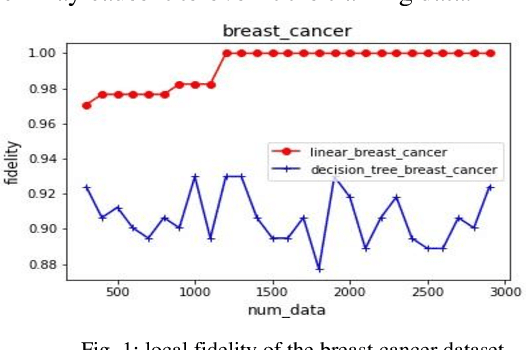

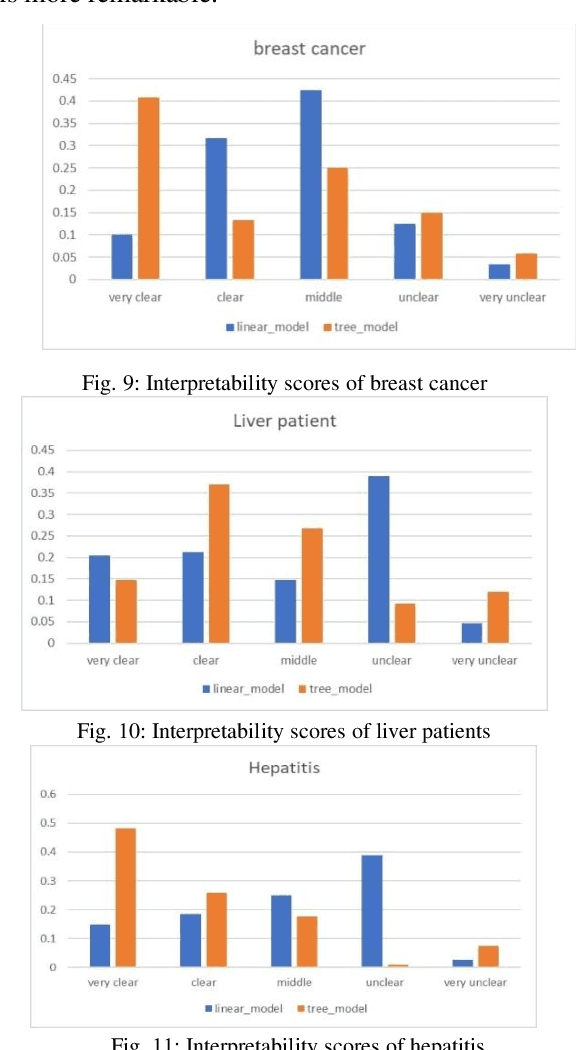

Using Decision Tree as Local Interpretable Model in Autoencoder-based LIME

Apr 07, 2022

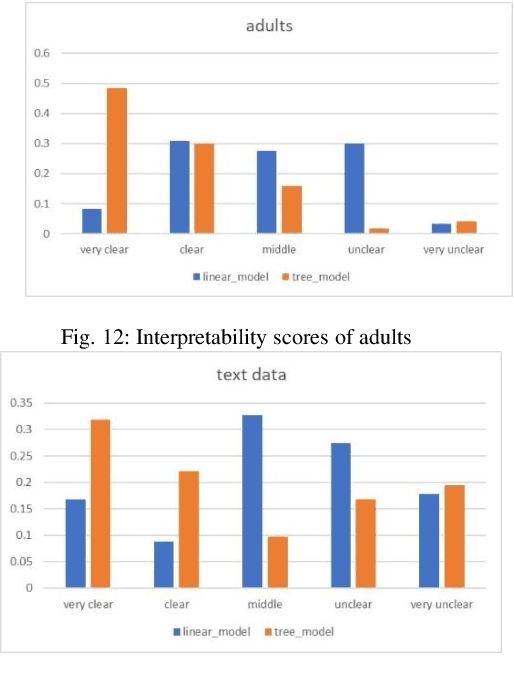

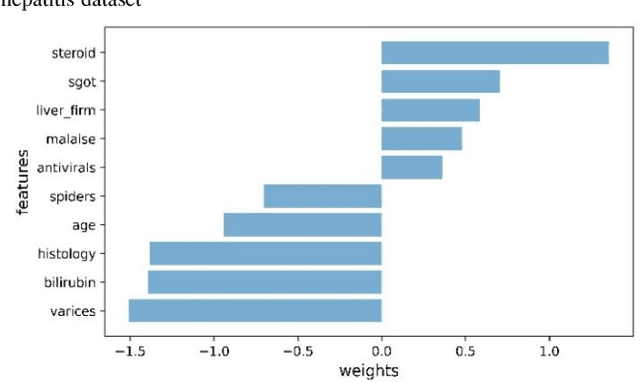

Abstract:Nowadays, deep neural networks are being used in many domains because of their high accuracy results. However, they are considered as "black box", means that they are not explainable for humans. On the other hand, in some tasks such as medical, economic, and self-driving cars, users want the model to be interpretable to decide if they can trust these results or not. In this work, we present a modified version of an autoencoder-based approach for local interpretability called ALIME. The ALIME itself is inspired by a famous method called Local Interpretable Model-agnostic Explanations (LIME). LIME generates a single instance level explanation by generating new data around the instance and training a local linear interpretable model. ALIME uses an autoencoder to weigh the new data around the sample. Nevertheless, the ALIME uses a linear model as the interpretable model to be trained locally, just like the LIME. This work proposes a new approach, which uses a decision tree instead of the linear model, as the interpretable model. We evaluate the proposed model in case of stability, local fidelity, and interpretability on different datasets. Compared to ALIME, the experiments show significant results on stability and local fidelity and improved results on interpretability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge