Nikola Janjušević

Self-Supervised Noise Adaptive MRI Denoising via Repetition to Repetition (Rep2Rep) Learning

Apr 24, 2025Abstract:Purpose: This work proposes a novel self-supervised noise-adaptive image denoising framework, called Repetition to Repetition (Rep2Rep) learning, for low-field (<1T) MRI applications. Methods: Rep2Rep learning extends the Noise2Noise framework by training a neural network on two repeated MRI acquisitions, using one repetition as input and another as target, without requiring ground-truth data. It incorporates noise-adaptive training, enabling denoising generalization across varying noise levels and flexible inference with any number of repetitions. Performance was evaluated on both synthetic noisy brain MRI and 0.55T prostate MRI data, and compared against supervised learning and Monte Carlo Stein's Unbiased Risk Estimator (MC-SURE). Results: Rep2Rep learning outperforms MC-SURE on both synthetic and 0.55T MRI datasets. On synthetic brain data, it achieved denoising quality comparable to supervised learning and surpassed MC-SURE, particularly in preserving structural details and reducing residual noise. On the 0.55T prostate MRI dataset, a reader study showed radiologists preferred Rep2Rep-denoised 2-average images over 8-average noisy images. Rep2Rep demonstrated robustness to noise-level discrepancies between training and inference, supporting its practical implementation. Conclusion: Rep2Rep learning offers an effective self-supervised denoising for low-field MRI by leveraging routinely acquired multi-repetition data. Its noise-adaptivity enables generalization to different SNR regimes without clean reference images. This makes Rep2Rep learning a promising tool for improving image quality and scan efficiency in low-field MRI.

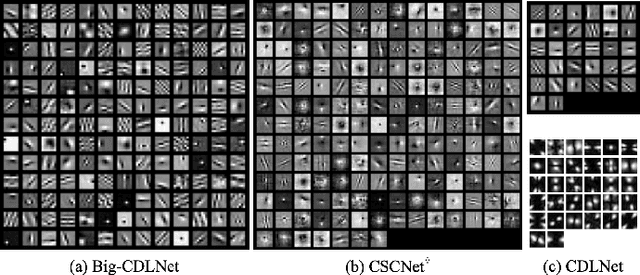

Fast and Interpretable Nonlocal Neural Networks for Image Denoising via Group-Sparse Convolutional Dictionary Learning

Jun 02, 2023Abstract:Nonlocal self-similarity within natural images has become an increasingly popular prior in deep-learning models. Despite their successful image restoration performance, such models remain largely uninterpretable due to their black-box construction. Our previous studies have shown that interpretable construction of a fully convolutional denoiser (CDLNet), with performance on par with state-of-the-art black-box counterparts, is achievable by unrolling a dictionary learning algorithm. In this manuscript, we seek an interpretable construction of a convolutional network with a nonlocal self-similarity prior that performs on par with black-box nonlocal models. We show that such an architecture can be effectively achieved by upgrading the $\ell 1$ sparsity prior of CDLNet to a weighted group-sparsity prior. From this formulation, we propose a novel sliding-window nonlocal operation, enabled by sparse array arithmetic. In addition to competitive performance with black-box nonlocal DNNs, we demonstrate the proposed sliding-window sparse attention enables inference speeds greater than an order of magnitude faster than its competitors.

Gabor is Enough: Interpretable Deep Denoising with a Gabor Synthesis Dictionary Prior

Apr 23, 2022

Abstract:Image processing neural networks, natural and artificial, have a long history with orientation-selectivity, often described mathematically as Gabor filters. Gabor-like filters have been observed in the early layers of CNN classifiers and even throughout low-level image processing networks. In this work, we take this observation to the extreme and explicitly constrain the filters of a natural-image denoising CNN to be learned 2D real Gabor filters. Surprisingly, we find that the proposed network (GDLNet) can achieve near state-of-the-art denoising performance amongst popular fully convolutional neural networks, with only a fraction of the learned parameters. We further verify that this parameterization maintains the noise-level generalization (training vs. inference mismatch) characteristics of the base network, and investigate the contribution of individual Gabor filter parameters to the performance of the denoiser. We present positive findings for the interpretation of dictionary learning networks as performing accelerated sparse-coding via the importance of untied learned scale parameters between network layers. Our network's success suggests that representations used by low-level image processing CNNs can be as simple and interpretable as Gabor filterbanks.

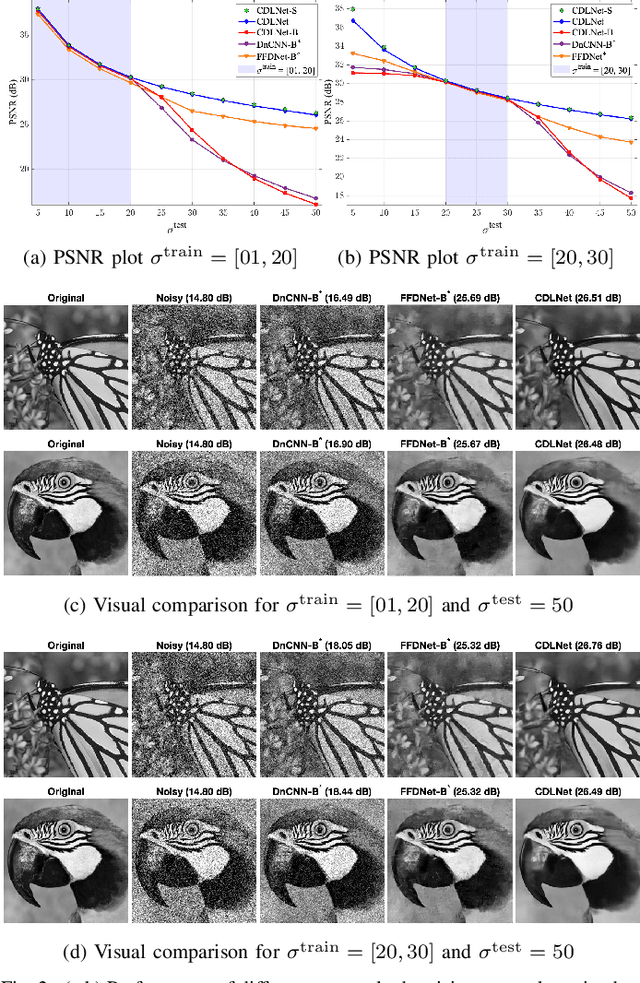

CDLNet: Noise-Adaptive Convolutional Dictionary Learning Network for Blind Denoising and Demosaicing

Dec 08, 2021

Abstract:Deep learning based methods hold state-of-the-art results in low-level image processing tasks, but remain difficult to interpret due to their black-box construction. Unrolled optimization networks present an interpretable alternative to constructing deep neural networks by deriving their architecture from classical iterative optimization methods without use of tricks from the standard deep learning tool-box. So far, such methods have demonstrated performance close to that of state-of-the-art models while using their interpretable construction to achieve a comparably low learned parameter count. In this work, we propose an unrolled convolutional dictionary learning network (CDLNet) and demonstrate its competitive denoising and joint denoising and demosaicing (JDD) performance both in low and high parameter count regimes. Specifically, we show that the proposed model outperforms state-of-the-art fully convolutional denoising and JDD models when scaled to a similar parameter count. In addition, we leverage the model's interpretable construction to propose a noise-adaptive parameterization of thresholds in the network that enables state-of-the-art blind denoising performance, and near perfect generalization on noise-levels unseen during training. Furthermore, we show that such performance extends to the JDD task and unsupervised learning.

CDLNet: Robust and Interpretable Denoising Through Deep Convolutional Dictionary Learning

Mar 05, 2021

Abstract:Deep learning based methods hold state-of-the-art results in image denoising, but remain difficult to interpret due to their construction from poorly understood building blocks such as batch-normalization, residual learning, and feature domain processing. Unrolled optimization networks propose an interpretable alternative to constructing deep neural networks by deriving their architecture from classical iterative optimization methods, without use of tricks from the standard deep learning tool-box. So far, such methods have demonstrated performance close to that of state-of-the-art models while using their interpretable construction to achieve a comparably low learned parameter count. In this work, we propose an unrolled convolutional dictionary learning network (CDLNet) and demonstrate its competitive denoising performance in both low and high parameter count regimes. Specifically, we show that the proposed model outperforms the state-of-the-art denoising models when scaled to similar parameter count. In addition, we leverage the model's interpretable construction to propose an augmentation of the network's thresholds that enables state-of-the-art blind denoising performance and near-perfect generalization on noise-levels unseen during training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge