Niels Joubert

Developments in Modern GNSS and Its Impact on Autonomous Vehicle Architectures

Feb 02, 2020

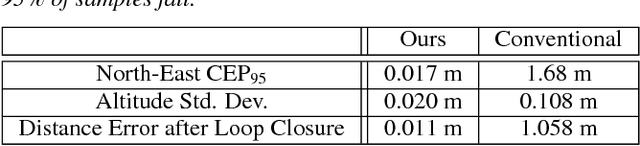

Abstract:This paper surveys a number of recent developments in modern Global Navigation Satellite Systems (GNSS) and investigates the possible impact on autonomous driving architectures. Modern GNSS now consist of four independent global satellite constellations delivering modernized signals at multiple civil frequencies. New ground monitoring infrastructure, mathematical models, and internet services correct for errors in the GNSS signals at continent scale. Mass-market automotive-grade receiver chipsets are available at low Cost, Size, Weight, and Power (CSWaP). The result is that GNSS in 2020 delivers better than lane-level accurate localization with 99.99999% integrity guarantees at over 95% availability. In autonomous driving, SAE Level 2 partially autonomous vehicles are now available to consumers, capable of autonomously following lanes and performing basic maneuvers under human supervision. Furthermore, the first pilot programs of SAE Level 4 driverless vehicles are being demonstrated on public roads. However, autonomous driving is not a solved problem. GNSS can help. Specifically, incorporating high-integrity GNSS lane determination into vision-based architectures can unlock lane-level maneuvers and provide oversight to guarantee safety. Incorporating precision GNSS into LiDAR-based systems can unlock robustness and additional fallbacks for safety and utility. Lastly, GNSS provides interoperability through consistent timing and reference frames for future V2X scenarios.

Towards a Drone Cinematographer: Guiding Quadrotor Cameras using Visual Composition Principles

Oct 05, 2016

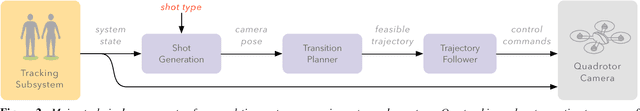

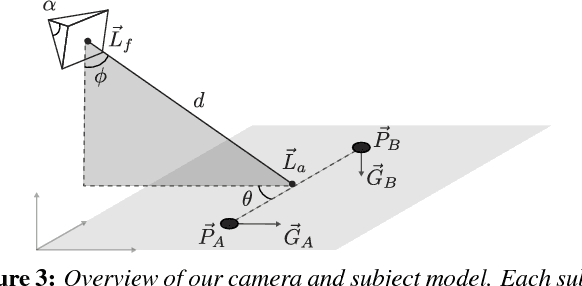

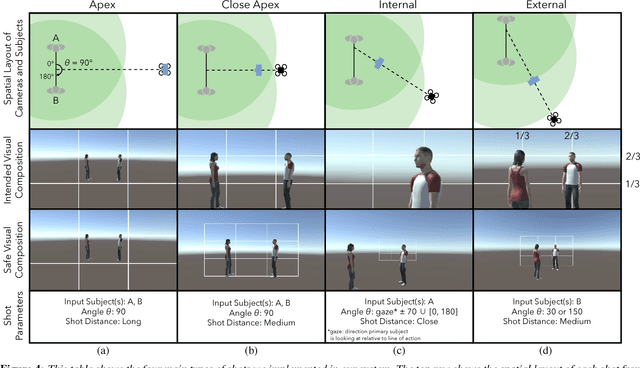

Abstract:We present a system to capture video footage of human subjects in the real world. Our system leverages a quadrotor camera to automatically capture well-composed video of two subjects. Subjects are tracked in a large-scale outdoor environment using RTK GPS and IMU sensors. Then, given the tracked state of our subjects, our system automatically computes static shots based on well-established visual composition principles and canonical shots from cinematography literature. To transition between these static shots, we calculate feasible, safe, and visually pleasing transitions using a novel real-time trajectory planning algorithm. We evaluate the performance of our tracking system, and experimentally show that RTK GPS significantly outperforms conventional GPS in capturing a variety of canonical shots. Lastly, we demonstrate our system guiding a consumer quadrotor camera autonomously capturing footage of two subjects in a variety of use cases. This is the first end-to-end system that enables people to leverage the mobility of quadrotors, as well as the knowledge of expert filmmakers, to autonomously capture high-quality footage of people in the real world.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge