Nicolas P. Rougier

INRIA Lorraine - Loria, University of Colorado, Boulder

Exploring the role of structure in a time constrained decision task

Jan 19, 2024Abstract:The structure of the basal ganglia is remarkably similar across a number of species (often described in terms of direct, indirect and hyperdirect pathways) and is deeply involved in decision making and action selection. In this article, we are interested in exploring the role of structure when solving a decision task while avoiding to make any strong assumption regarding the actual structure. To do so, we exploit the echo state network paradigm that allows to solve complex task based on a random architecture. Considering a temporal decision task, the question is whether a specific structure allows for better performance and if so, whether this structure shares some similarity with the basal ganglia. Our results highlight the advantage of having a slow (direct) and a fast (hyperdirect) pathway that allows to deal with late information during a decision making task.

From implicit learning to explicit representations

Apr 05, 2022

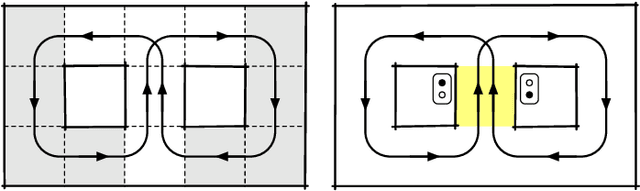

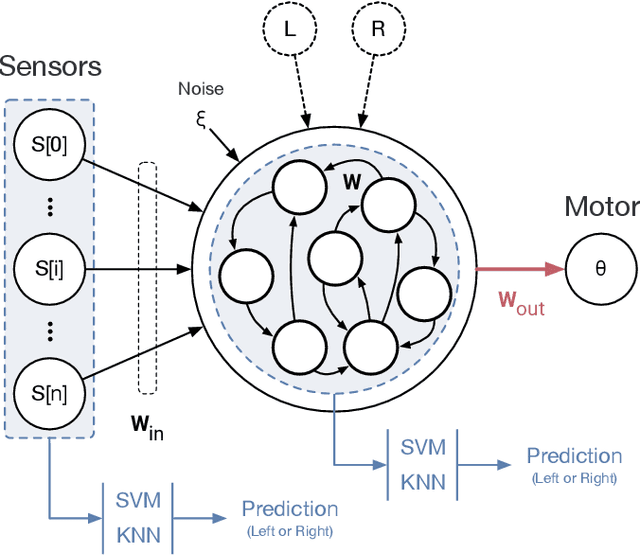

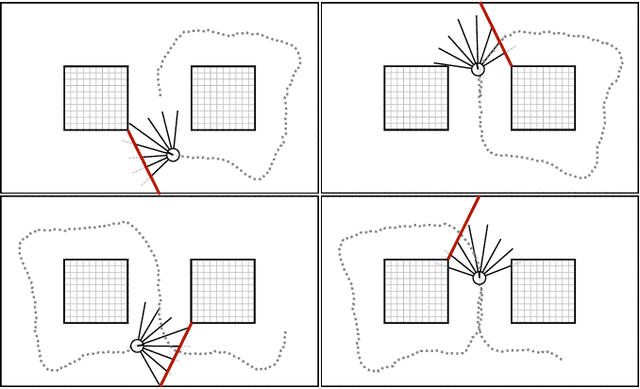

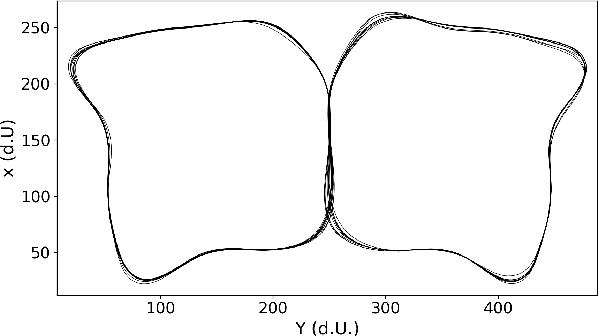

Abstract:Using the reservoir computing framework, we demonstrate how a simple model can solve an alternation task without an explicit working memory. To do so, a simple bot equipped with sensors navigates inside a 8-shaped maze and turns alternatively right and left at the same intersection in the maze. The analysis of the model's internal activity reveals that the memory is actually encoded inside the dynamics of the network. However, such dynamic working memory is not accessible such as to bias the behavior into one of the two attractors (left and right). To do so, external cues are fed to the bot such that it can follow arbitrary sequences, instructed by the cue. This model highlights the idea that procedural learning and its internal representation can be dissociated. If the former allows to produce behavior, it is not sufficient to allow for an explicit and fine-grained manipulation.

Randomized Self Organizing Map

Nov 18, 2020

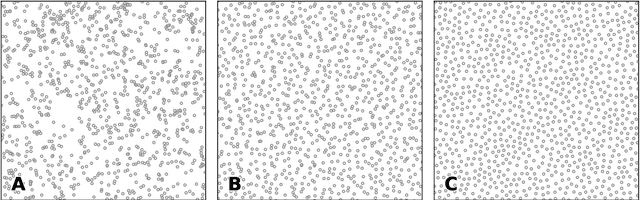

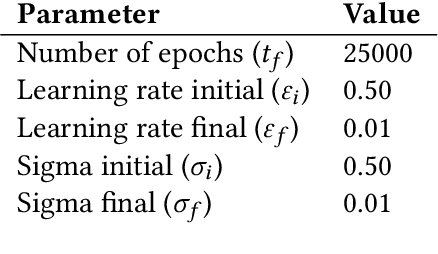

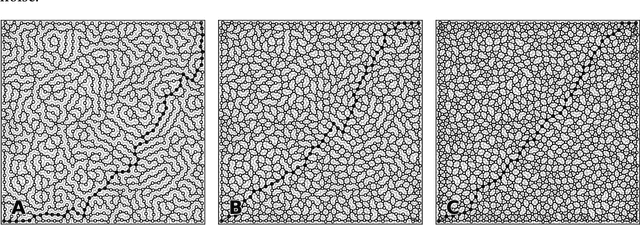

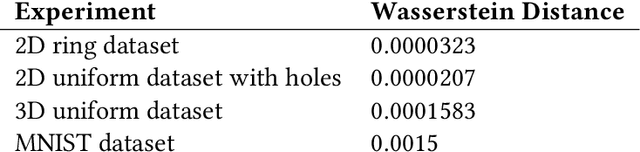

Abstract:We propose a variation of the self organizing map algorithm by considering the random placement of neurons on a two-dimensional manifold, following a blue noise distribution from which various topologies can be derived. These topologies possess random (but controllable) discontinuities that allow for a more flexible self-organization, especially with high-dimensional data. The proposed algorithm is tested on one-, two- and three-dimensions tasks as well as on the MNIST handwritten digits dataset and validated using spectral analysis and topological data analysis tools. We also demonstrate the ability of the randomized self-organizing map to gracefully reorganize itself in case of neural lesion and/or neurogenesis.

Knowledge extraction from the learning of sequences in a long short term memory (LSTM) architecture

Dec 06, 2019

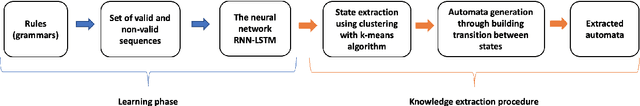

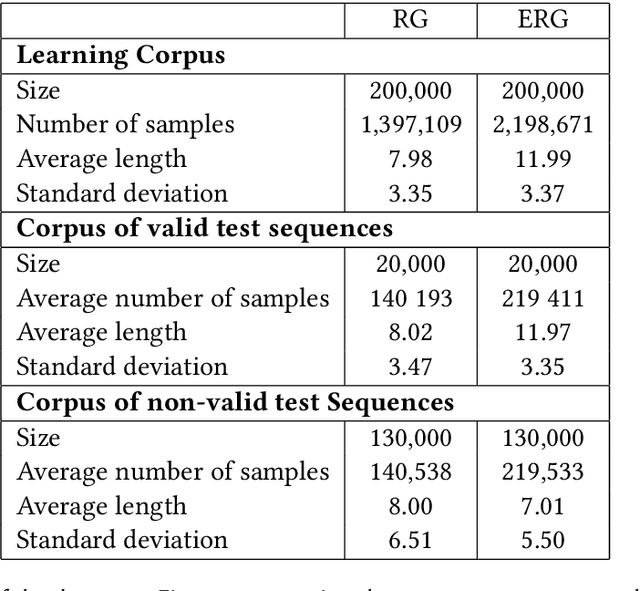

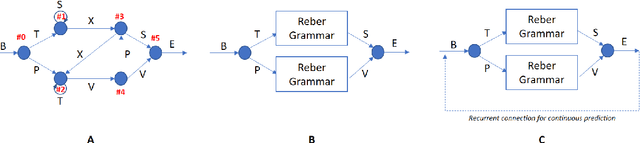

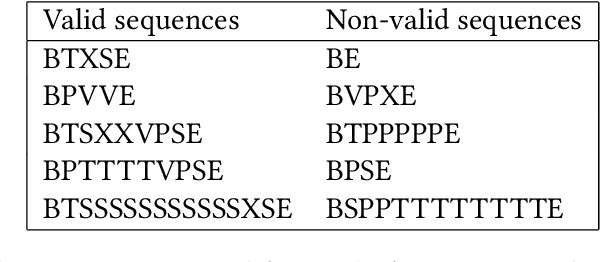

Abstract:We introduce a general method to extract knowledge from a recurrent neural network (Long Short Term Memory) that has learnt to detect if a given input sequence is valid or not, according to an unknown generative automaton. Based on the clustering of the hidden states, we explain how to build and validate an automaton that corresponds to the underlying (unknown) automaton, and allows to predict if a given sequence is valid or not. The method is illustrated on artificial grammars (Reber's grammar variations) as well as on a real use-case whose underlying grammar is unknown.

A graphical, scalable and intuitive method for the placement and the connection of biological cells

Oct 14, 2017

Abstract:We introduce a graphical method originating from the computer graphics domain that is used for the arbitrary and intuitive placement of cells over a two-dimensional manifold. Using a bitmap image as input, where the color indicates the identity of the different structures and the alpha channel indicates the local cell density, this method guarantees a discrete distribution of cell position respecting the local density function. This method scales to any number of cells, allows to specify several different structures at once with arbitrary shapes and provides a scalable and versatile alternative to the more classical assumption of a uniform non-spatial distribution. Furthermore, several connection schemes can be derived from the paired distances between cells using either an automatic mapping or a user-defined local reference frame, providing new computational properties for the underlying model. The method is illustrated on a discrete homogeneous neural field, on the distribution of cones and rods in the retina and on a coronal view of the basal ganglia.

A computational approach to the covert and overt deployment of spatial attention

Sep 26, 2008

Abstract:Popular computational models of visual attention tend to neglect the influence of saccadic eye movements whereas it has been shown that the primates perform on average three of them per seconds and that the neural substrate for the deployment of attention and the execution of an eye movement might considerably overlap. Here we propose a computational model in which the deployment of attention with or without a subsequent eye movement emerges from local, distributed and numerical computations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge