Frédéric Alexandre

INRIA Lorraine - Loria

From implicit learning to explicit representations

Apr 05, 2022

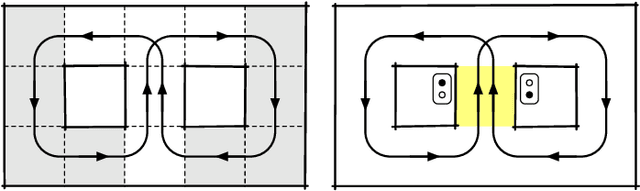

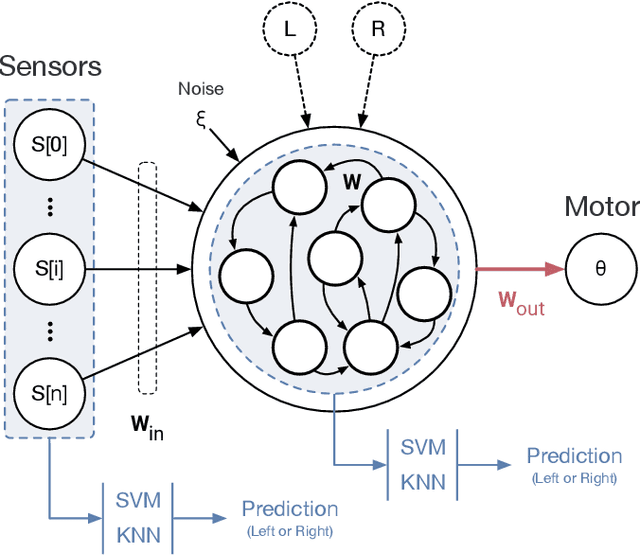

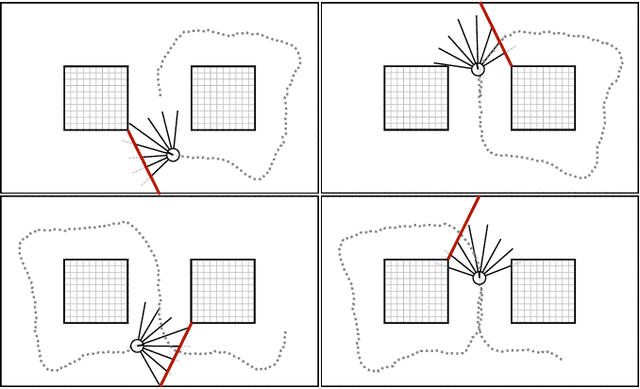

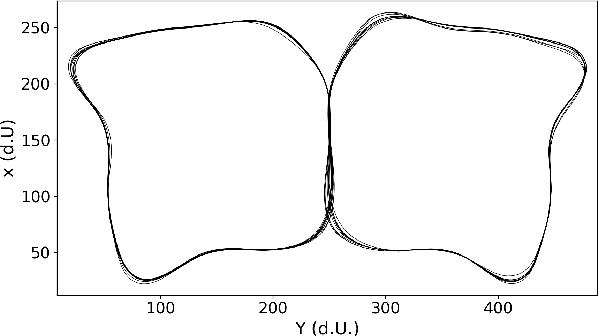

Abstract:Using the reservoir computing framework, we demonstrate how a simple model can solve an alternation task without an explicit working memory. To do so, a simple bot equipped with sensors navigates inside a 8-shaped maze and turns alternatively right and left at the same intersection in the maze. The analysis of the model's internal activity reveals that the memory is actually encoded inside the dynamics of the network. However, such dynamic working memory is not accessible such as to bias the behavior into one of the two attractors (left and right). To do so, external cues are fed to the bot such that it can follow arbitrary sequences, instructed by the cue. This model highlights the idea that procedural learning and its internal representation can be dissociated. If the former allows to produce behavior, it is not sufficient to allow for an explicit and fine-grained manipulation.

Knowledge extraction from the learning of sequences in a long short term memory (LSTM) architecture

Dec 06, 2019

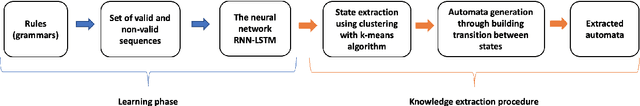

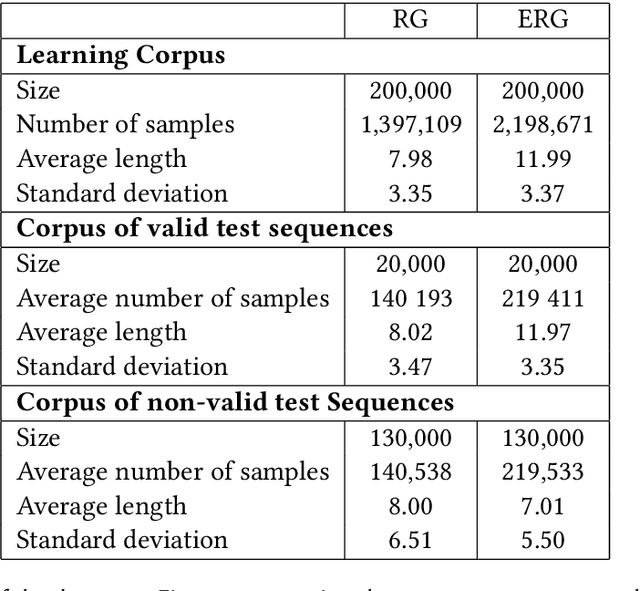

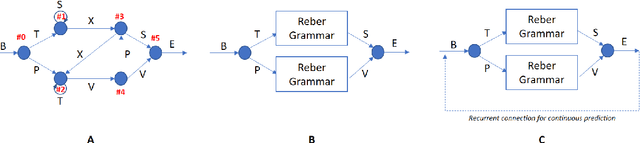

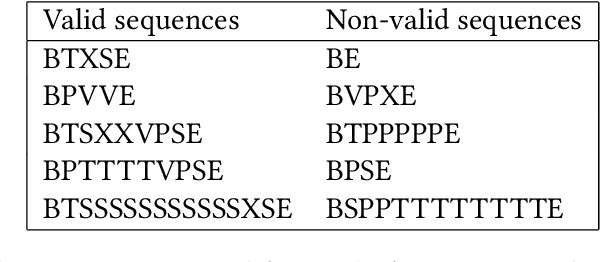

Abstract:We introduce a general method to extract knowledge from a recurrent neural network (Long Short Term Memory) that has learnt to detect if a given input sequence is valid or not, according to an unknown generative automaton. Based on the clustering of the hidden states, we explain how to build and validate an automaton that corresponds to the underlying (unknown) automaton, and allows to predict if a given sequence is valid or not. The method is illustrated on artificial grammars (Reber's grammar variations) as well as on a real use-case whose underlying grammar is unknown.

A computational approach to the covert and overt deployment of spatial attention

Sep 26, 2008

Abstract:Popular computational models of visual attention tend to neglect the influence of saccadic eye movements whereas it has been shown that the primates perform on average three of them per seconds and that the neural substrate for the deployment of attention and the execution of an eye movement might considerably overlap. Here we propose a computational model in which the deployment of attention with or without a subsequent eye movement emerges from local, distributed and numerical computations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge