Nengli Lim

Bayesian optimization for backpropagation in Monte-Carlo tree search

Jan 25, 2020

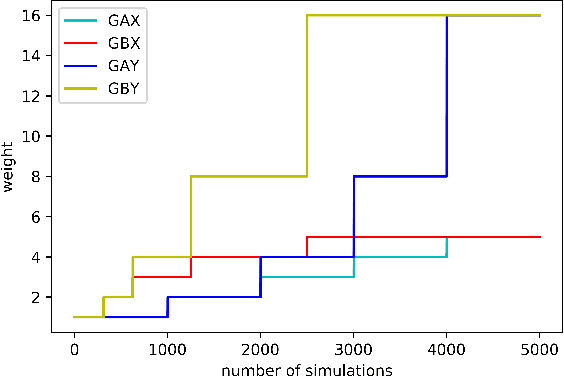

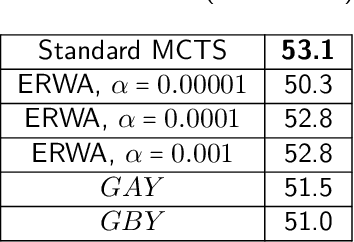

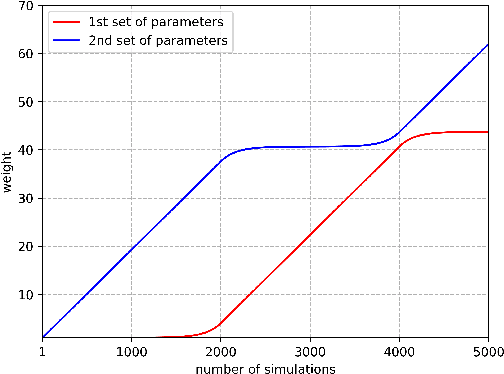

Abstract:In large domains, Monte-Carlo tree search (MCTS) is required to estimate the values of the states as efficiently and accurately as possible. However, the standard update rule in backpropagation assumes a stationary distribution for the returns, and particularly in min-max trees, convergence to the true value can be slow because of averaging. We present two methods, Softmax MCTS and Monotone MCTS, which generalize previous attempts to improve upon the backpropagation strategy. We demonstrate that both methods reduce to finding optimal monotone functions, which we do so by performing Bayesian optimization with a Gaussian process (GP) prior. We conduct experiments on computer Go, where the returns are given by a deep value neural network, and show that our proposed framework outperforms previous methods.

Disentangling Representations using Gaussian Processes in Variational Autoencoders for Video Prediction

Jan 08, 2020

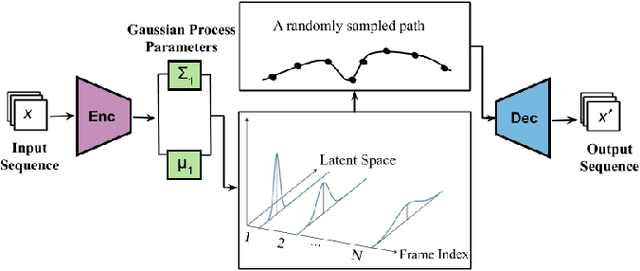

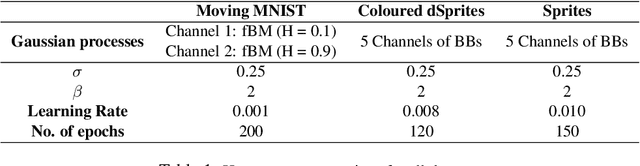

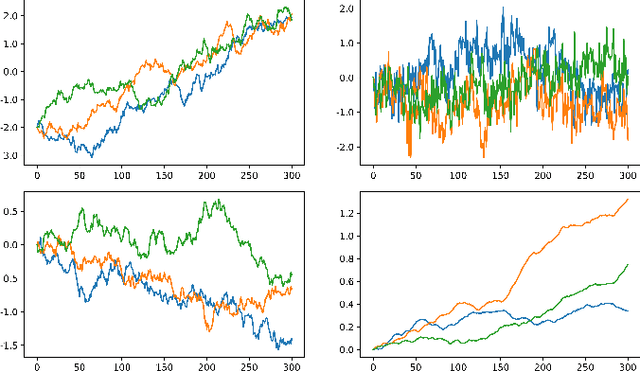

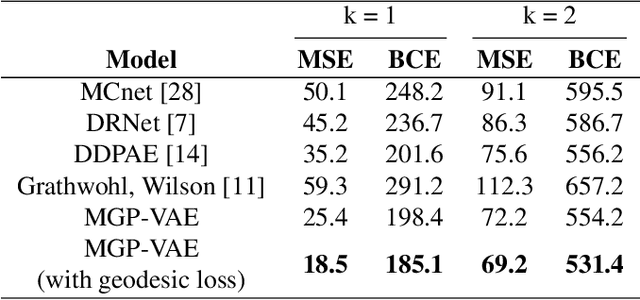

Abstract:We introduce MGP-VAE, a variational autoencoder which uses Gaussian processes (GP) to model the latent space distribution. We employ MGP-VAE for the unsupervised learning of video sequences to obtain disentangled representations. Previous work in this area has mainly been confined to separating dynamic information from static content. We improve on previous results by establishing a framework by which multiple features, static or dynamic, can be disentangled. Specifically we use fractional Brownian motions (fBM) and Brownian bridges (BB) to enforce an inter-frame correlation structure in each independent channel. We show that varying this correlation structure enables one to capture different aspects of variation in the data. We demonstrate the quality of our disentangled representations on numerous experiments on three publicly available datasets, and also perform quantitative tests on a video prediction task. In addition, we introduce a novel geodesic loss function which takes into account the curvature of the data manifold to improve learning in the prediction task. Our experiments show quantitatively that the combination of our improved disentangled representations with the novel loss function enable MGP-VAE to outperform the state-of-the-art in video prediction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge