Natsue Yoshimura

Adaptive sparseness for correntropy-based robust regression via automatic relevance determination

Jan 31, 2023

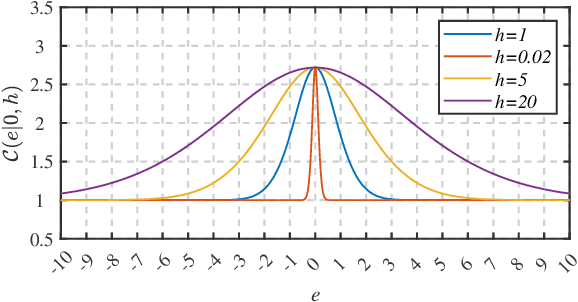

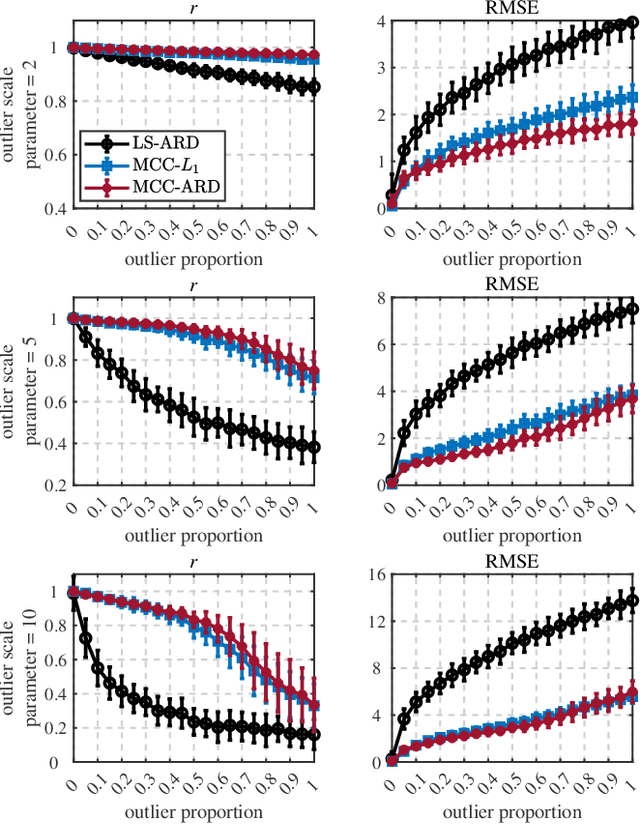

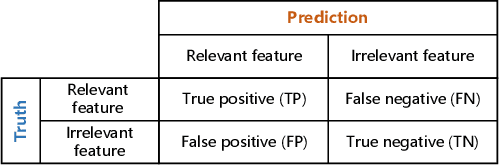

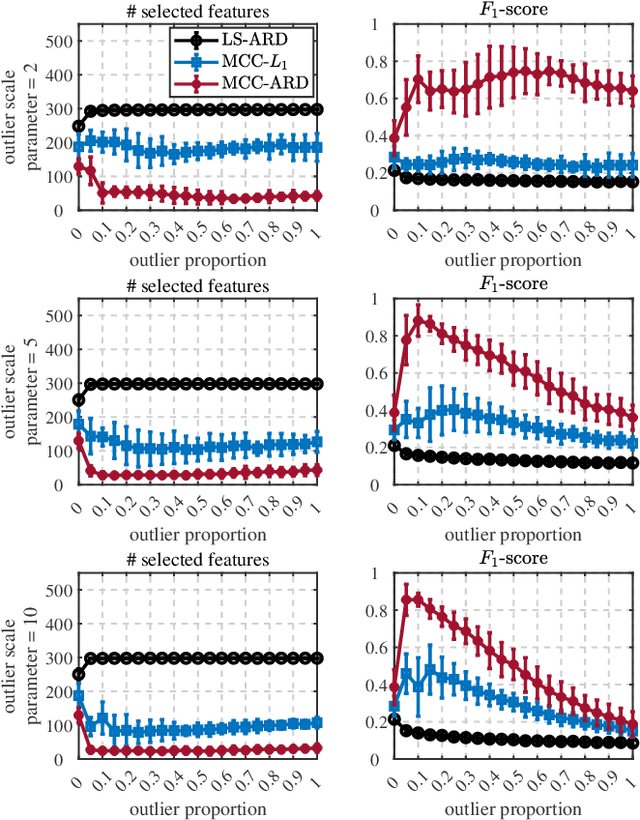

Abstract:Sparseness and robustness are two important properties for many machine learning scenarios. In the present study, regarding the maximum correntropy criterion (MCC) based robust regression algorithm, we investigate to integrate the MCC method with the automatic relevance determination (ARD) technique in a Bayesian framework, so that MCC-based robust regression could be implemented with adaptive sparseness. To be specific, we use an inherent noise assumption from the MCC to derive an explicit likelihood function, and realize the maximum a posteriori (MAP) estimation with the ARD prior by variational Bayesian inference. Compared to the existing robust and sparse L1-regularized MCC regression, the proposed MCC-ARD regression can eradicate the troublesome tuning for the regularization hyper-parameter which controls the regularization strength. Further, MCC-ARD achieves superior prediction performance and feature selection capability than L1-regularized MCC, as demonstrated by a noisy and high-dimensional simulation study.

Correntropy-Based Logistic Regression with Automatic Relevance Determination for Robust Sparse Brain Activity Decoding

Jul 20, 2022

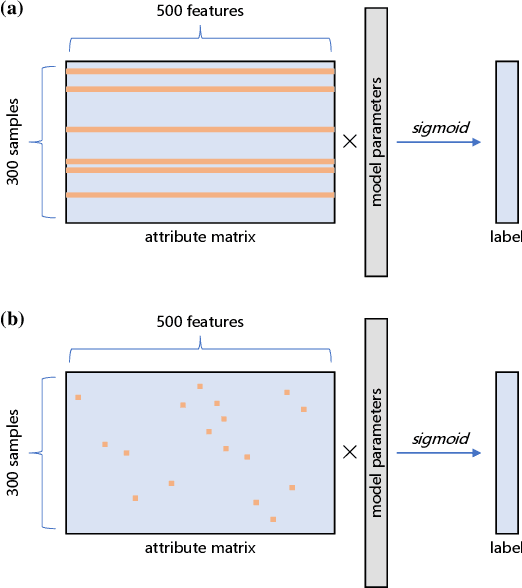

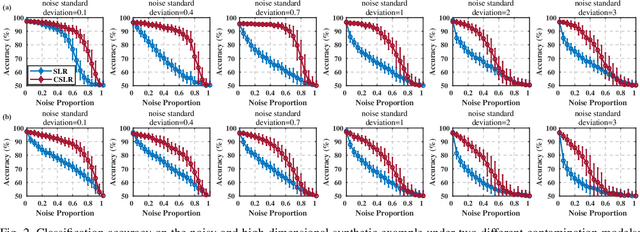

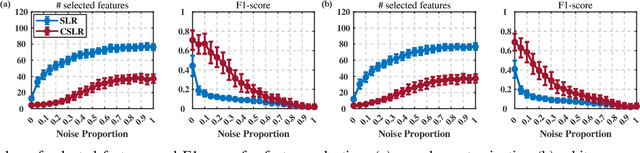

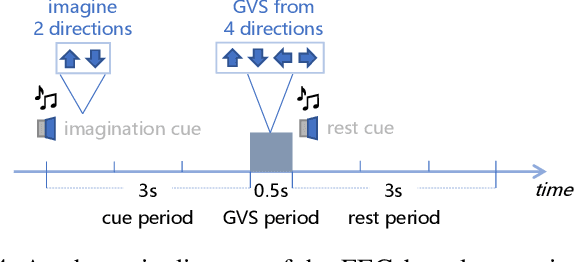

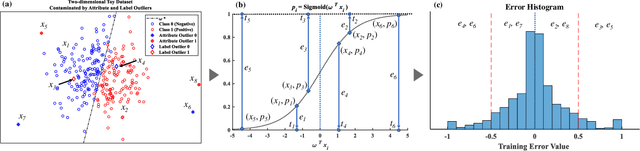

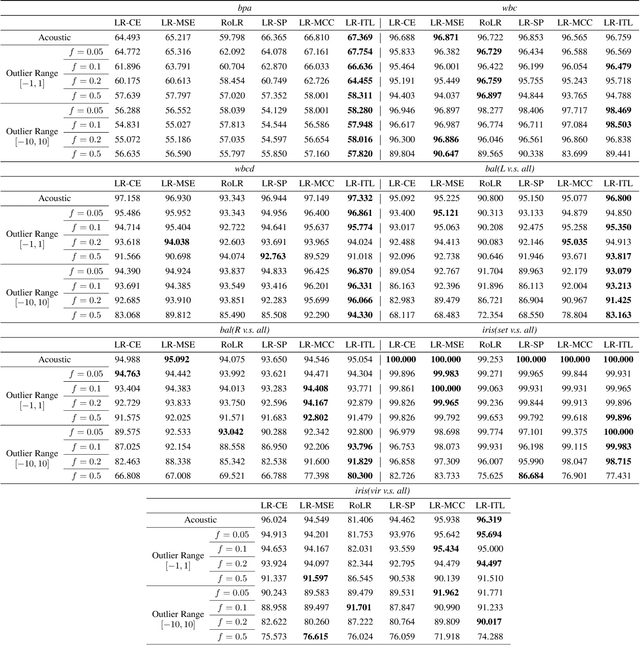

Abstract:Recent studies have utilized sparse classifications to predict categorical variables from high-dimensional brain activity signals to expose human's intentions and mental states, selecting the relevant features automatically in the model training process. However, existing sparse classification models will likely be prone to the performance degradation which is caused by noise inherent in the brain recordings. To address this issue, we aim to propose a new robust and sparse classification algorithm in this study. To this end, we introduce the correntropy learning framework into the automatic relevance determination based sparse classification model, proposing a new correntropy-based robust sparse logistic regression algorithm. To demonstrate the superior brain activity decoding performance of the proposed algorithm, we evaluate it on a synthetic dataset, an electroencephalogram (EEG) dataset, and a functional magnetic resonance imaging (fMRI) dataset. The extensive experimental results confirm that not only the proposed method can achieve higher classification accuracy in a noisy and high-dimensional classification task, but also it would select those more informative features for the decoding scenarios. Integrating the correntropy learning approach with the automatic relevance determination technique will significantly improve the robustness with respect to the noise, leading to more adequate robust sparse brain decoding algorithm. It provides a more powerful approach in the real-world brain activity decoding and the brain-computer interfaces.

Partial Maximum Correntropy Regression for Robust Trajectory Decoding from Noisy Epidural Electrocorticographic Signals

Jun 23, 2021

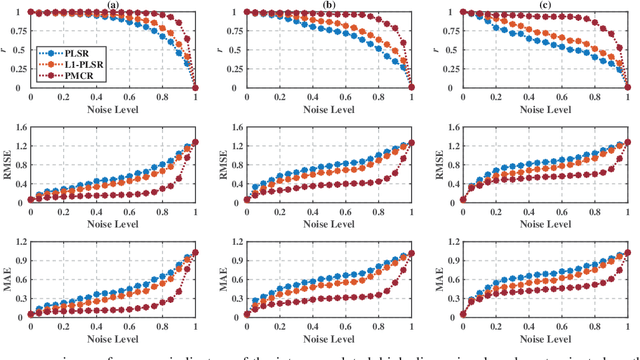

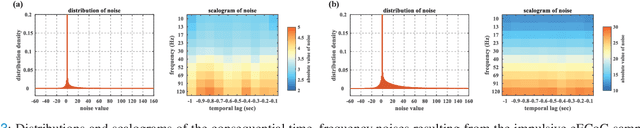

Abstract:The Partial Least Square Regression (PLSR) algorithm exhibits exceptional competence for predicting continuous variables from inter-correlated brain recordings in brain-computer interfaces, which achieved successful prediction from epidural electrocorticography of macaques to three-dimensional continuous hand trajectories recently. Nevertheless, PLSR is in essence formulated based on the least square criterion, thus, being non-robust with respect to complicated noises consequently. The aim of the present study is to propose a robust version of PLSR. To this end, the maximum correntropy criterion is adopted to structure a new robust variant of PLSR, namely Partial Maximum Correntropy Regression (PMCR). Half-quadratic optimization technique is utilized to calculate the robust latent variables. We assess the proposed PMCR on a synthetic example and the public Neurotycho dataset. Compared with the conventional PLSR and the state-of-the-art variant, PMCR realized superior prediction competence on three different performance indicators with contaminated training set. The proposed PMCR was demonstrated as an effective approach for robust decoding from noisy brain measurements, which could reduce the performance degradation resulting from adverse noises, thus, improving the decoding robustness of brain-computer interfaces.

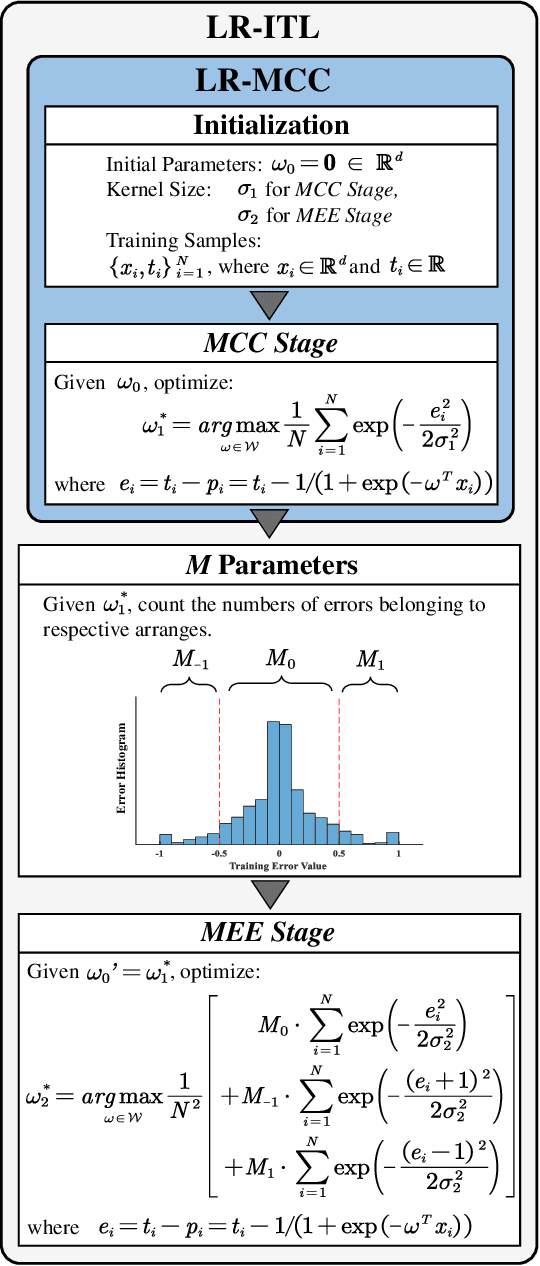

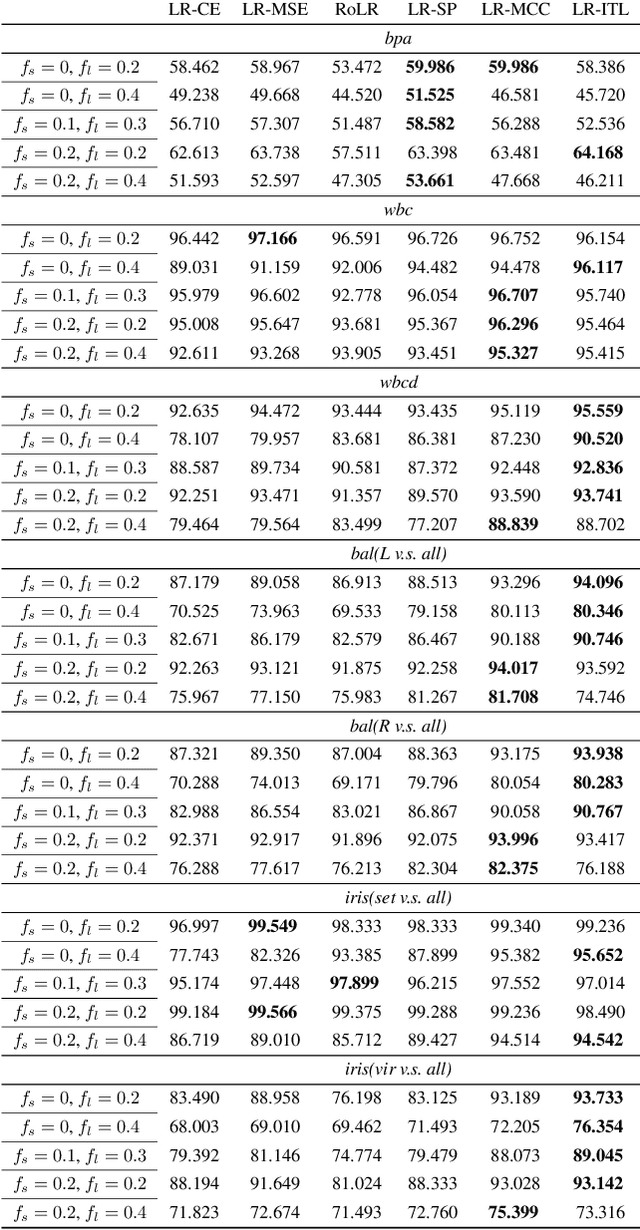

Robust Logistic Regression against Attribute and Label Outliers via Information Theoretic Learning

Sep 06, 2019

Abstract:The framework of information theoretic learning (ITL) has been verified as a powerful approach for robust machine learning, which improves robustness significantly in regression, feature extraction, dimensionality reduction and so on. Nevertheless, few studies utilize ITL for robust classification. In this study, we attempt to improve the robustness of the logistic regression, a fundamental method in classification, through analyzing the characteristic when the model is affected by outliers. We propose an ITL-based variant that learns by the error distribution, the performance of which is experimentally evaluated on two toy examples and several public datasets, compared with two traditional methods and two states of the art. The results demonstrate that the novel method can outperform the states of the art evidently in some cases, and behaves with desirable potential to achieve better robustness in complex situations than existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge