Robust Logistic Regression against Attribute and Label Outliers via Information Theoretic Learning

Paper and Code

Sep 06, 2019

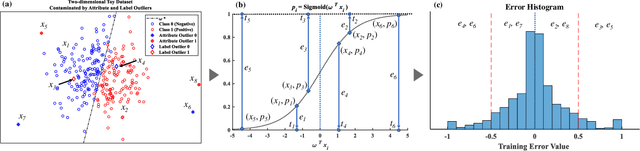

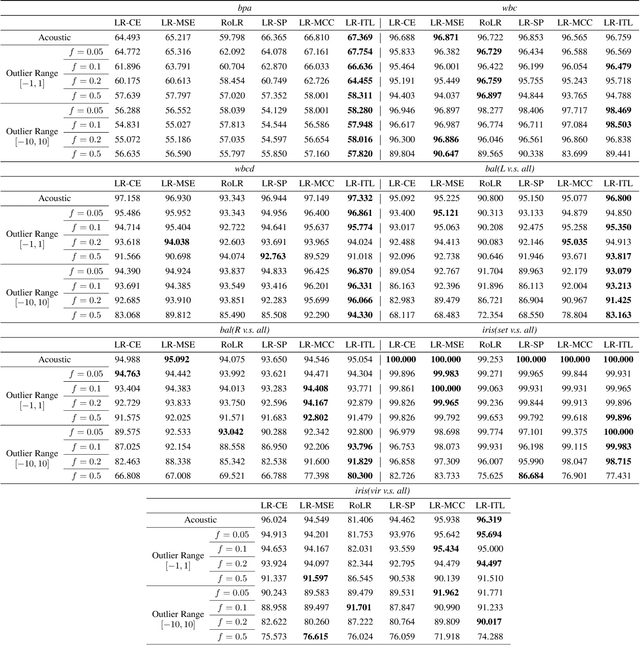

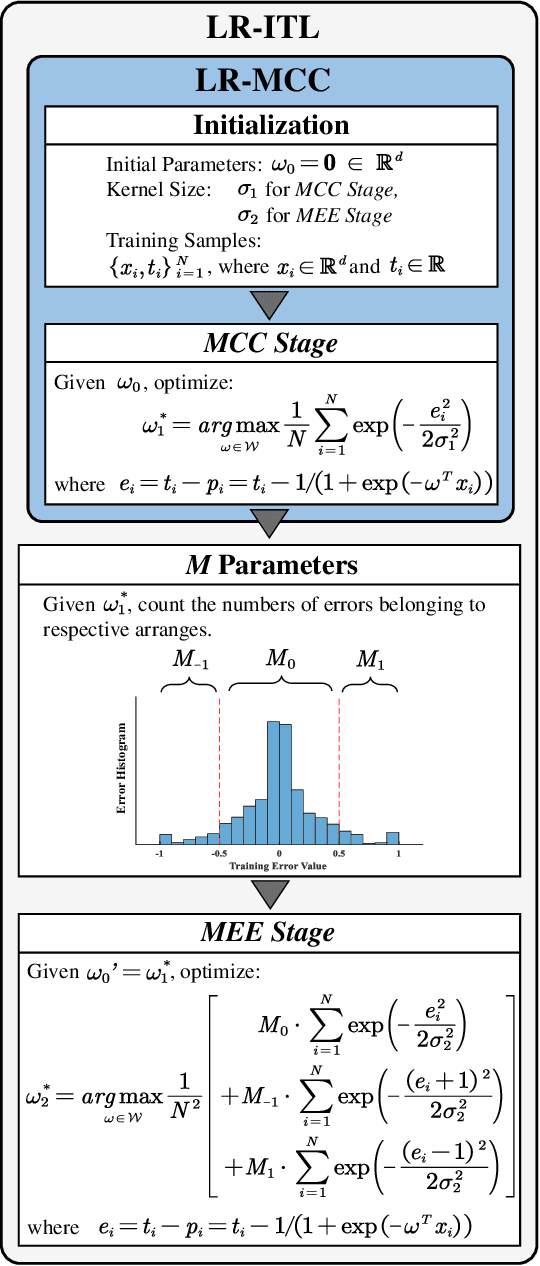

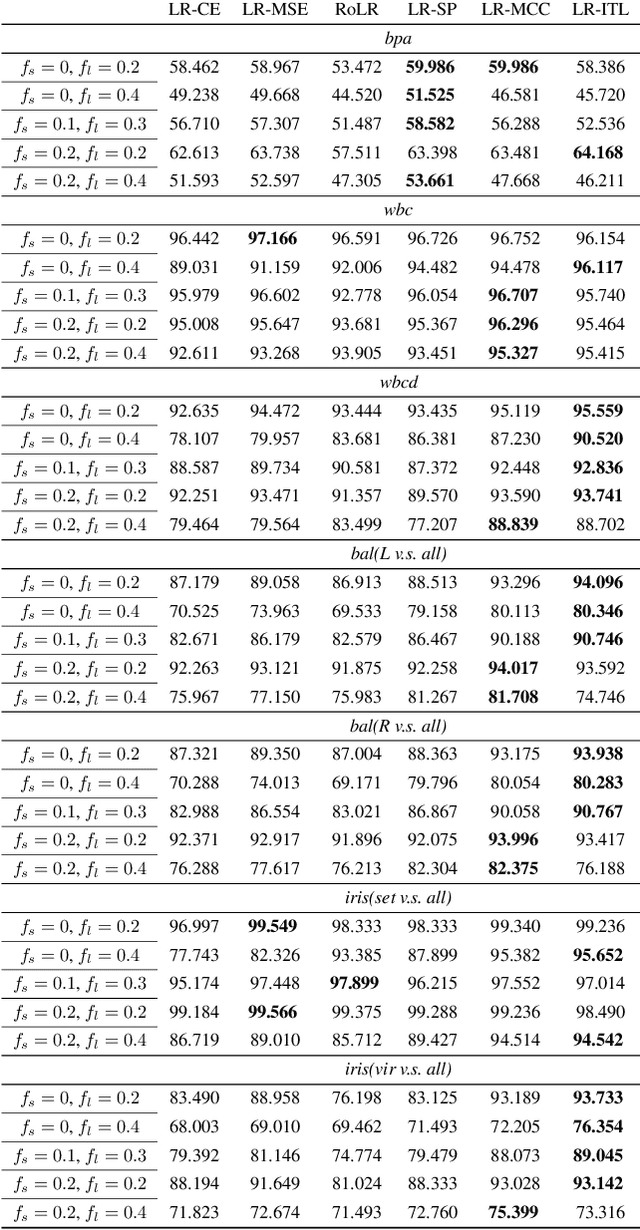

The framework of information theoretic learning (ITL) has been verified as a powerful approach for robust machine learning, which improves robustness significantly in regression, feature extraction, dimensionality reduction and so on. Nevertheless, few studies utilize ITL for robust classification. In this study, we attempt to improve the robustness of the logistic regression, a fundamental method in classification, through analyzing the characteristic when the model is affected by outliers. We propose an ITL-based variant that learns by the error distribution, the performance of which is experimentally evaluated on two toy examples and several public datasets, compared with two traditional methods and two states of the art. The results demonstrate that the novel method can outperform the states of the art evidently in some cases, and behaves with desirable potential to achieve better robustness in complex situations than existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge