Naruya Kondo

Dance Generation by Sound Symbolic Words

Jun 06, 2023

Abstract:This study introduces a novel approach to generate dance motions using onomatopoeia as input, with the aim of enhancing creativity and diversity in dance generation. Unlike text and music, onomatopoeia conveys rhythm and meaning through abstract word expressions without constraints on expression and without need for specialized knowledge. We adapt the AI Choreographer framework and employ the Sakamoto system, a feature extraction method for onomatopoeia focusing on phonemes and syllables. Additionally, we present a new dataset of 40 onomatopoeia-dance motion pairs collected through a user survey. Our results demonstrate that the proposed method enables more intuitive dance generation and can create dance motions using sound-symbolic words from a variety of languages, including those without onomatopoeia. This highlights the potential for diverse dance creation across different languages and cultures, accessible to a wider audience. Qualitative samples from our model can be found at: https://sites.google.com/view/onomatopoeia-dance/home/.

VaxNeRF: Revisiting the Classic for Voxel-Accelerated Neural Radiance Field

Nov 25, 2021

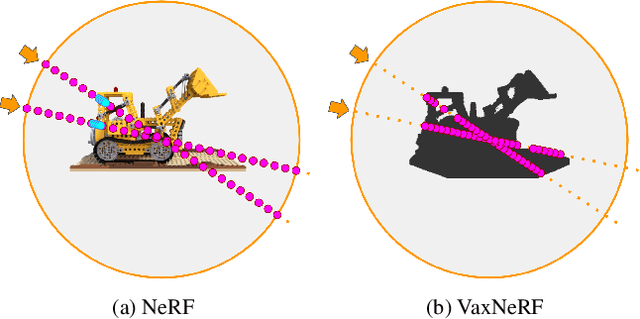

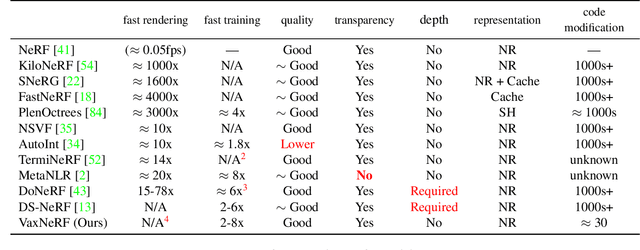

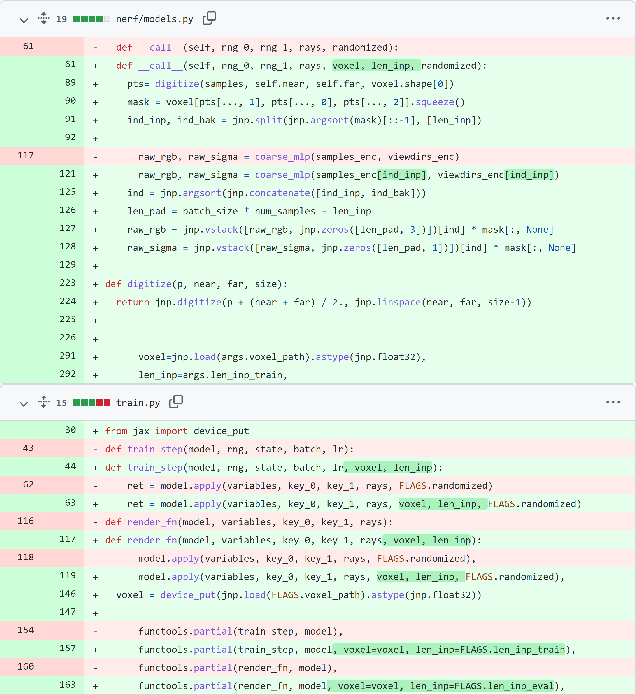

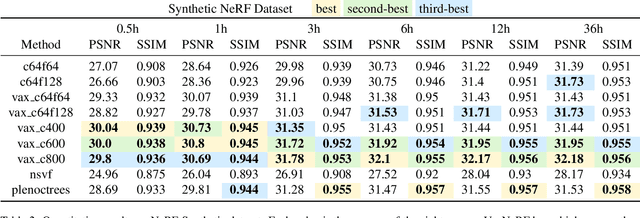

Abstract:Neural Radiance Field (NeRF) is a popular method in data-driven 3D reconstruction. Given its simplicity and high quality rendering, many NeRF applications are being developed. However, NeRF's big limitation is its slow speed. Many attempts are made to speeding up NeRF training and inference, including intricate code-level optimization and caching, use of sophisticated data structures, and amortization through multi-task and meta learning. In this work, we revisit the basic building blocks of NeRF through the lens of classic techniques before NeRF. We propose Voxel-Accelearated NeRF (VaxNeRF), integrating NeRF with visual hull, a classic 3D reconstruction technique only requiring binary foreground-background pixel labels per image. Visual hull, which can be optimized in about 10 seconds, can provide coarse in-out field separation to omit substantial amounts of network evaluations in NeRF. We provide a clean fully-pythonic, JAX-based implementation on the popular JaxNeRF codebase, consisting of only about 30 lines of code changes and a modular visual hull subroutine, and achieve about 2-8x faster learning on top of the highly-performative JaxNeRF baseline with zero degradation in rendering quality. With sufficient compute, this effectively brings down full NeRF training from hours to 30 minutes. We hope VaxNeRF -- a careful combination of a classic technique with a deep method (that arguably replaced it) -- can empower and accelerate new NeRF extensions and applications, with its simplicity, portability, and reliable performance gains. Codes are available at https://github.com/naruya/VaxNeRF .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge