Nami Ashizawa

Do Backdoors Assist Membership Inference Attacks?

Mar 22, 2023

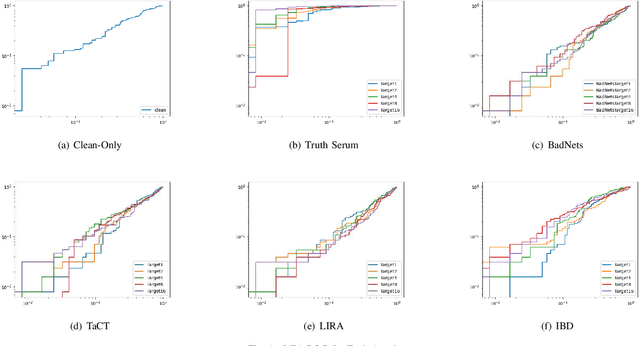

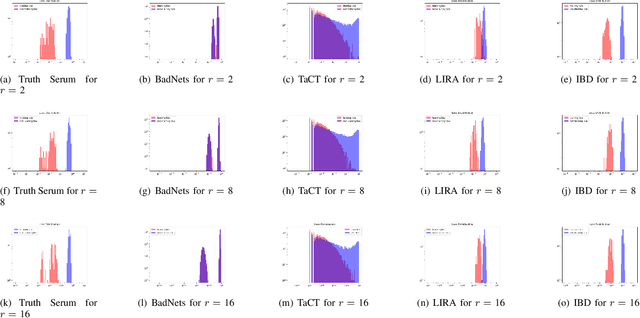

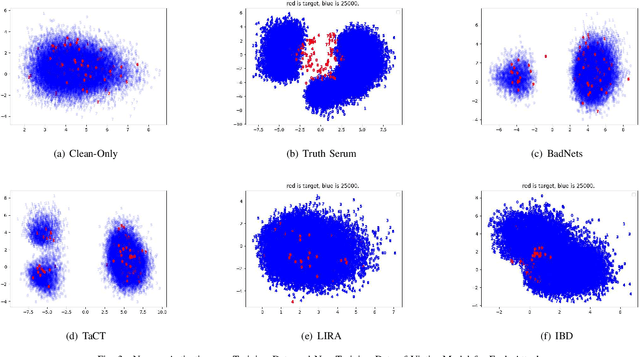

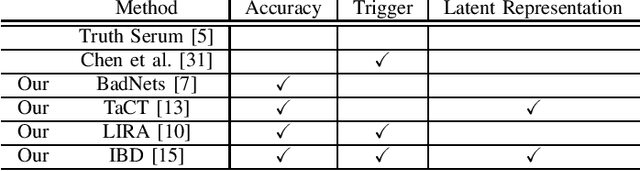

Abstract:When an adversary provides poison samples to a machine learning model, privacy leakage, such as membership inference attacks that infer whether a sample was included in the training of the model, becomes effective by moving the sample to an outlier. However, the attacks can be detected because inference accuracy deteriorates due to poison samples. In this paper, we discuss a \textit{backdoor-assisted membership inference attack}, a novel membership inference attack based on backdoors that return the adversary's expected output for a triggered sample. We found three crucial insights through experiments with an academic benchmark dataset. We first demonstrate that the backdoor-assisted membership inference attack is unsuccessful. Second, when we analyzed loss distributions to understand the reason for the unsuccessful results, we found that backdoors cannot separate loss distributions of training and non-training samples. In other words, backdoors cannot affect the distribution of clean samples. Third, we also show that poison and triggered samples activate neurons of different distributions. Specifically, backdoors make any clean sample an inlier, contrary to poisoning samples. As a result, we confirm that backdoors cannot assist membership inference.

Eth2Vec: Learning Contract-Wide Code Representations for Vulnerability Detection on Ethereum Smart Contracts

Jan 08, 2021

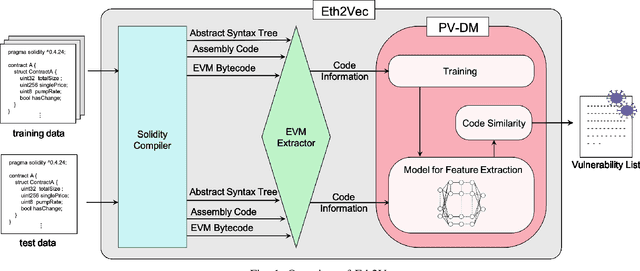

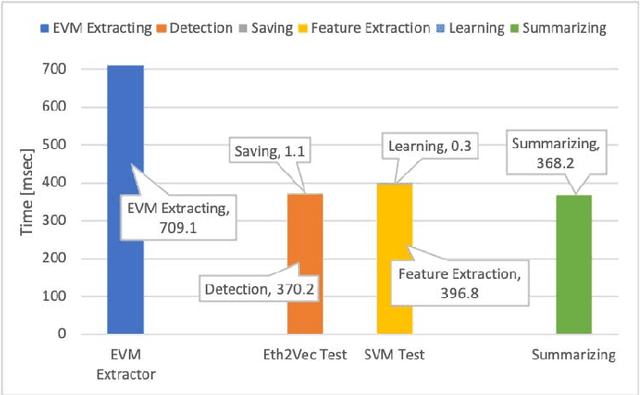

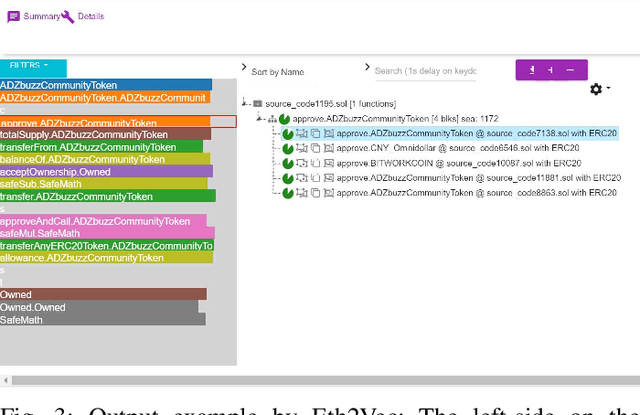

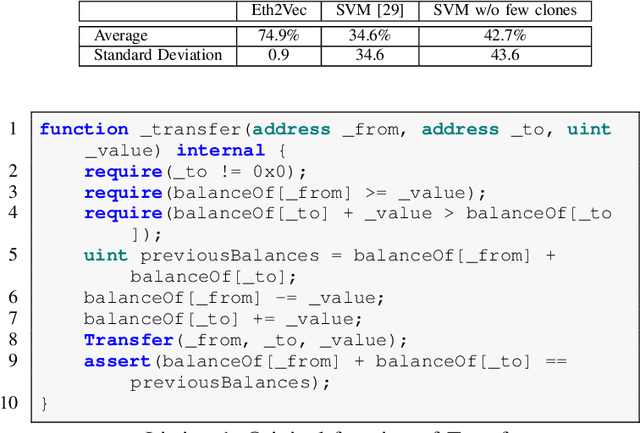

Abstract:Ethereum smart contracts are programs that run on the Ethereum blockchain, and many smart contract vulnerabilities have been discovered in the past decade. Many security analysis tools have been created to detect such vulnerabilities, but their performance decreases drastically when codes to be analyzed are being rewritten. In this paper, we propose Eth2Vec, a machine-learning-based static analysis tool for vulnerability detection, with robustness against code rewrites in smart contracts. Existing machine-learning-based static analysis tools for vulnerability detection need features, which analysts create manually, as inputs. In contrast, Eth2Vec automatically learns features of vulnerable Ethereum Virtual Machine (EVM) bytecodes with tacit knowledge through a neural network for language processing. Therefore, Eth2Vec can detect vulnerabilities in smart contracts by comparing the code similarity between target EVM bytecodes and the EVM bytecodes it already learned. We conducted experiments with existing open databases, such as Etherscan, and our results show that Eth2Vec outperforms the existing work in terms of well-known metrics, i.e., precision, recall, and F1-score. Moreover, Eth2Vec can detect vulnerabilities even in rewritten codes.

Self-Organizing Map assisted Deep Autoencoding Gaussian Mixture Model for Intrusion Detection

Aug 28, 2020

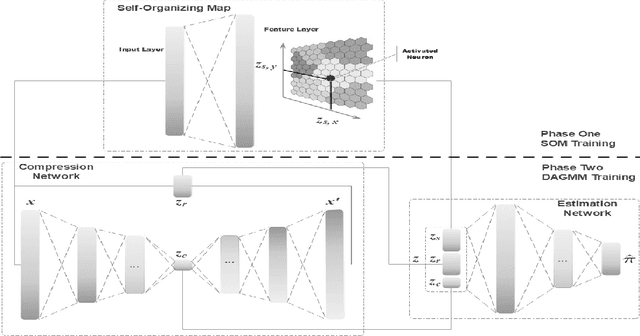

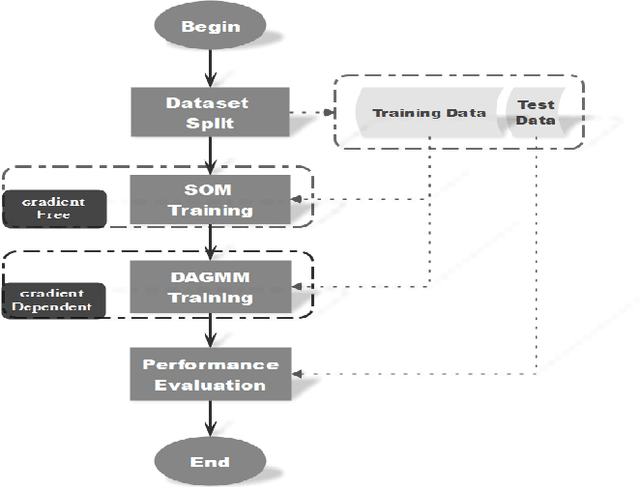

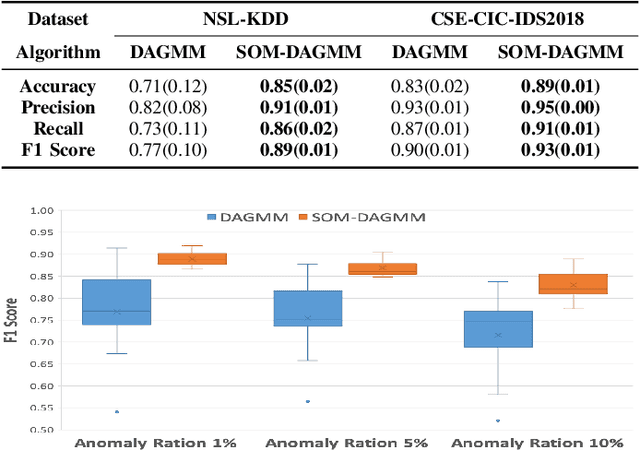

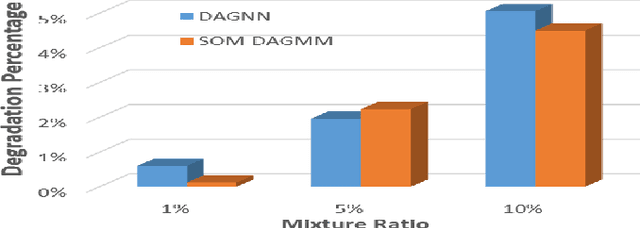

Abstract:In the information age, a secure and stable network environment is essential and hence intrusion detection is critical for any networks. In this paper, we propose a self-organizing map assisted deep autoencoding Gaussian mixture model (SOMDAGMM) supplemented with well-preserved input space topology for more accurate network intrusion detection. The deep autoencoding Gaussian mixture model comprises a compression network and an estimation network which is able to perform unsupervised joint training. However, the code generated by the autoencoder is inept at preserving the topology of the input space, which is rooted in the bottleneck of the adopted deep structure. A self-organizing map has been introduced to construct SOMDAGMM for addressing this issue. The superiority of the proposed SOM-DAGMM is empirically demonstrated with extensive experiments conducted upon two datasets. Experimental results show that SOM-DAGMM outperforms state-of-the-art DAGMM on all tests, and achieves up to 15.58% improvement in F1 score and with better stability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge