Nabeel Nisar Bhat

Cross-layer Integrated Sensing and Communication: A Joint Industrial and Academic Perspective

May 16, 2025

Abstract:Integrated sensing and communication (ISAC) enables radio systems to simultaneously sense and communicate with their environment. This paper, developed within the Hexa-X-II project funded by the European Union, presents a comprehensive cross-layer vision for ISAC in 6G networks, integrating insights from physical-layer design, hardware architectures, AI-driven intelligence, and protocol-level innovations. We begin by revisiting the foundational principles of ISAC, highlighting synergies and trade-offs between sensing and communication across different integration levels. Enabling technologies, such as multiband operation, massive and distributed MIMO, non-terrestrial networks, reconfigurable intelligent surfaces, and machine learning, are analyzed in conjunction with hardware considerations including waveform design, synchronization, and full-duplex operation. To bridge implementation and system-level evaluation, we introduce a quantitative cross-layer framework linking design parameters to key performance and value indicators. By synthesizing perspectives from both academia and industry, this paper outlines how deeply integrated ISAC can transform 6G into a programmable and context-aware platform supporting applications from reliable wireless access to autonomous mobility and digital twinning.

CSI4Free: GAN-Augmented mmWave CSI for Improved Pose Classification

Jun 26, 2024

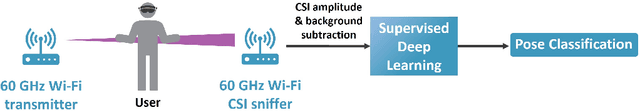

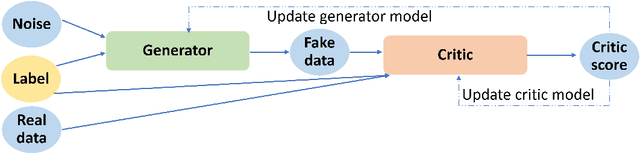

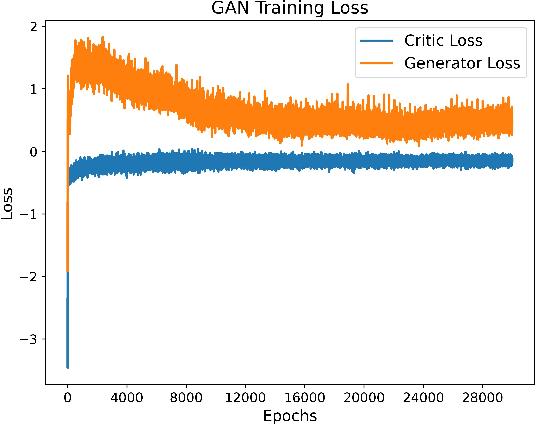

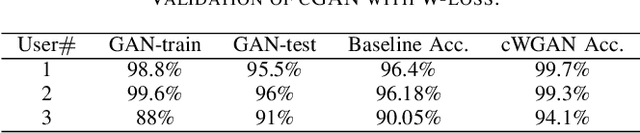

Abstract:In recent years, Joint Communication and Sensing (JC&S), has demonstrated significant success, particularly in utilizing sub-6 GHz frequencies with commercial-off-the-shelf (COTS) Wi-Fi devices for applications such as localization, gesture recognition, and pose classification. Deep learning and the existence of large public datasets has been pivotal in achieving such results. However, at mmWave frequencies (30-300 GHz), which has shown potential for more accurate sensing performance, there is a noticeable lack of research in the domain of COTS Wi-Fi sensing. Challenges such as limited research hardware, the absence of large datasets, limited functionality in COTS hardware, and the complexities of data collection present obstacles to a comprehensive exploration of this field. In this work, we aim to address these challenges by developing a method that can generate synthetic mmWave channel state information (CSI) samples. In particular, we use a generative adversarial network (GAN) on an existing dataset, to generate 30,000 additional CSI samples. The augmented samples exhibit a remarkable degree of consistency with the original data, as indicated by the notably high GAN-train and GAN-test scores. Furthermore, we integrate the augmented samples in training a pose classification model. We observe that the augmented samples complement the real data and improve the generalization of the classification model.

Gesture Recognition with mmWave Wi-Fi Access Points: Lessons Learned

Jun 29, 2023

Abstract:In recent years, channel state information (CSI) at sub-6 GHz has been widely exploited for Wi-Fi sensing, particularly for activity and gesture recognition. In this work, we instead explore mmWave (60 GHz) Wi-Fi signals for gesture recognition/pose estimation. Our focus is on the mmWave Wi-Fi signals so that they can be used not only for high data rate communication but also for improved sensing e.g., for extended reality (XR) applications. For this reason, we extract spatial beam signal-to-noise ratios (SNRs) from the periodic beam training employed by IEEE 802.11ad devices. We consider a set of 10 gestures/poses motivated by XR applications. We conduct experiments in two environments and with three people.As a comparison, we also collect CSI from IEEE 802.11ac devices. To extract features from the CSI and the beam SNR, we leverage a deep neural network (DNN). The DNN classifier achieves promising results on the beam SNR task with state-of-the-art 96.7% accuracy in a single environment, even with a limited dataset. We also investigate the robustness of the beam SNR against CSI across different environments. Our experiments reveal that features from the CSI generalize without additional re-training, while those from beam SNRs do not. Therefore, re-training is required in the latter case.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge