Myeongkyun Kang

Few Shot Part Segmentation Reveals Compositional Logic for Industrial Anomaly Detection

Dec 21, 2023Abstract:Logical anomalies (LA) refer to data violating underlying logical constraints e.g., the quantity, arrangement, or composition of components within an image. Detecting accurately such anomalies requires models to reason about various component types through segmentation. However, curation of pixel-level annotations for semantic segmentation is both time-consuming and expensive. Although there are some prior few-shot or unsupervised co-part segmentation algorithms, they often fail on images with industrial object. These images have components with similar textures and shapes, and a precise differentiation proves challenging. In this study, we introduce a novel component segmentation model for LA detection that leverages a few labeled samples and unlabeled images sharing logical constraints. To ensure consistent segmentation across unlabeled images, we employ a histogram matching loss in conjunction with an entropy loss. As segmentation predictions play a crucial role, we propose to enhance both local and global sample validity detection by capturing key aspects from visual semantics via three memory banks: class histograms, component composition embeddings and patch-level representations. For effective LA detection, we propose an adaptive scaling strategy to standardize anomaly scores from different memory banks in inference. Extensive experiments on the public benchmark MVTec LOCO AD reveal our method achieves 98.1% AUROC in LA detection vs. 89.6% from competing methods.

Data Generation using Texture Co-occurrence and Spatial Self-Similarity for Debiasing

Oct 15, 2021

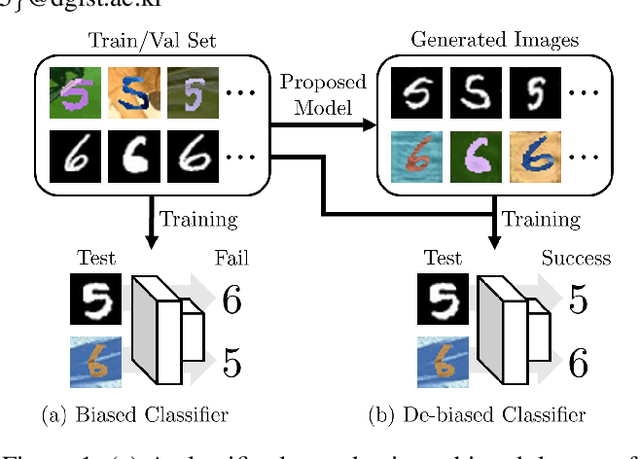

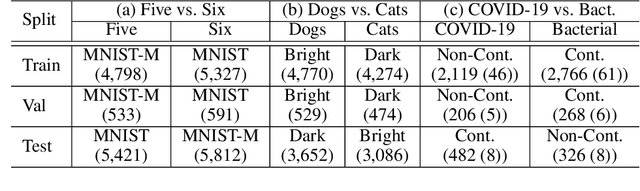

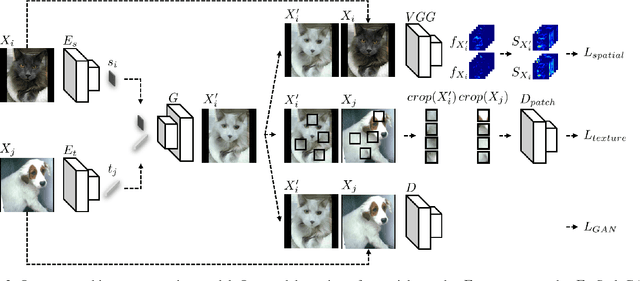

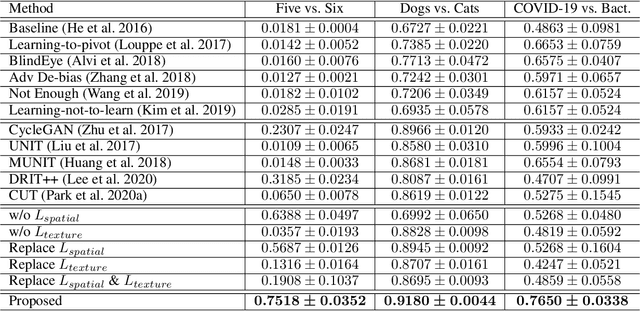

Abstract:Classification models trained on biased datasets usually perform poorly on out-of-distribution samples since biased representations are embedded into the model. Recently, adversarial learning methods have been proposed to disentangle biased representations, but it is challenging to discard only the biased features without altering other relevant information. In this paper, we propose a novel de-biasing approach that explicitly generates additional images using texture representations of oppositely labeled images to enlarge the training dataset and mitigate the effect of biases when training a classifier. Every new generated image contains similar spatial information from a source image while transferring textures from a target image of opposite label. Our model integrates a texture co-occurrence loss that determines whether a generated image's texture is similar to that of the target, and a spatial self-similarity loss that determines whether the spatial details between the generated and source images are well preserved. Both generated and original training images are further used to train a classifier that is able to avoid learning unknown bias representations. We employ three distinct artificially designed datasets with known biases to demonstrate the ability of our method to mitigate bias information, and report competitive performance over existing state-of-the-art methods.

Mixing-AdaSIN: Constructing a de-biased dataset using Adaptive Structural Instance Normalization and texture Mixing

Mar 26, 2021

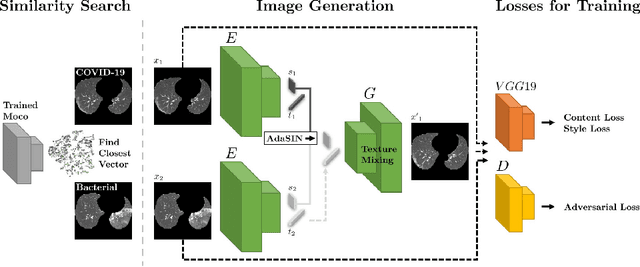

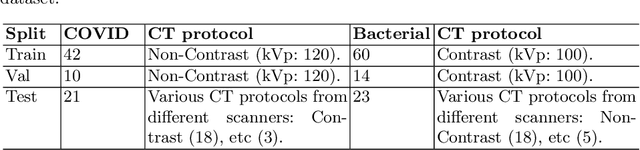

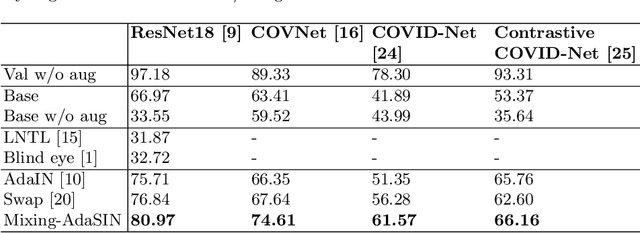

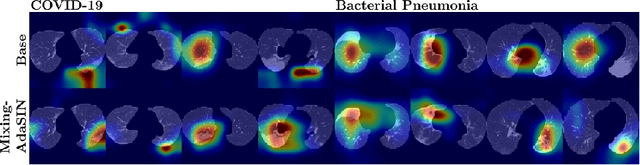

Abstract:Following the pandemic outbreak, several works have proposed to diagnose COVID-19 with deep learning in computed tomography (CT); reporting performance on-par with experts. However, models trained/tested on the same in-distribution data may rely on the inherent data biases for successful prediction, failing to generalize on out-of-distribution samples or CT with different scanning protocols. Early attempts have partly addressed bias-mitigation and generalization through augmentation or re-sampling, but are still limited by collection costs and the difficulty of quantifying bias in medical images. In this work, we propose Mixing-AdaSIN; a bias mitigation method that uses a generative model to generate de-biased images by mixing texture information between different labeled CT scans with semantically similar features. Here, we use Adaptive Structural Instance Normalization (AdaSIN) to enhance de-biasing generation quality and guarantee structural consistency. Following, a classifier trained with the generated images learns to correctly predict the label without bias and generalizes better. To demonstrate the efficacy of our method, we construct a biased COVID-19 vs. bacterial pneumonia dataset based on CT protocols and compare with existing state-of-the-art de-biasing methods. Our experiments show that classifiers trained with de-biased generated images report improved in-distribution performance and generalization on an external COVID-19 dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge