Muying Luo

P2PFormer: A Primitive-to-polygon Method for Regular Building Contour Extraction from Remote Sensing Images

Jun 05, 2024

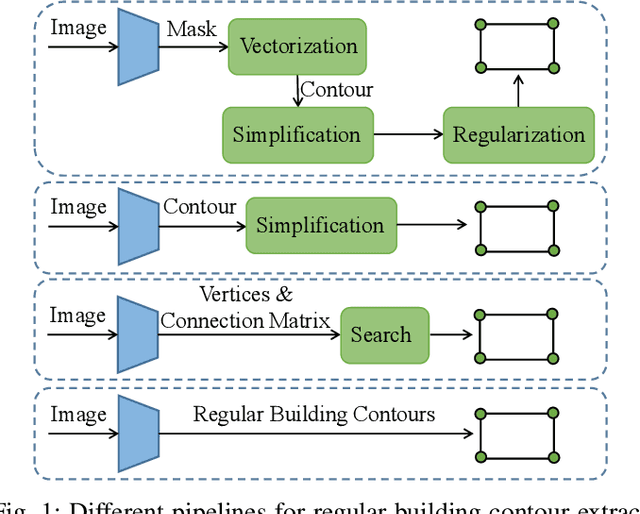

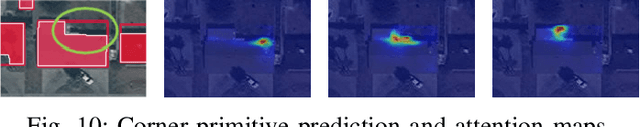

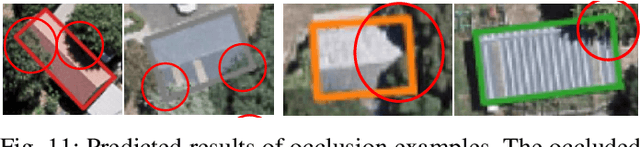

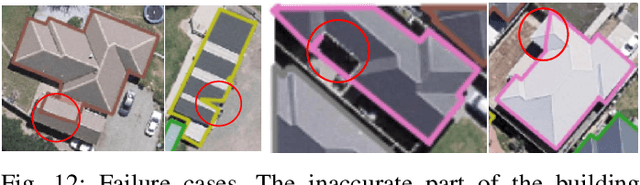

Abstract:Extracting building contours from remote sensing imagery is a significant challenge due to buildings' complex and diverse shapes, occlusions, and noise. Existing methods often struggle with irregular contours, rounded corners, and redundancy points, necessitating extensive post-processing to produce regular polygonal building contours. To address these challenges, we introduce a novel, streamlined pipeline that generates regular building contours without post-processing. Our approach begins with the segmentation of generic geometric primitives (which can include vertices, lines, and corners), followed by the prediction of their sequence. This allows for the direct construction of regular building contours by sequentially connecting the segmented primitives. Building on this pipeline, we developed P2PFormer, which utilizes a transformer-based architecture to segment geometric primitives and predict their order. To enhance the segmentation of primitives, we introduce a unique representation called group queries. This representation comprises a set of queries and a singular query position, which improve the focus on multiple midpoints of primitives and their efficient linkage. Furthermore, we propose an innovative implicit update strategy for the query position embedding aimed at sharpening the focus of queries on the correct positions and, consequently, enhancing the quality of primitive segmentation. Our experiments demonstrate that P2PFormer achieves new state-of-the-art performance on the WHU, CrowdAI, and WHU-Mix datasets, surpassing the previous SOTA PolyWorld by a margin of 2.7 AP and 6.5 AP75 on the largest CrowdAI dataset. We intend to make the code and trained weights publicly available to promote their use and facilitate further research.

BuildMapper: A Fully Learnable Framework for Vectorized Building Contour Extraction

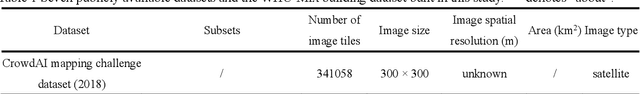

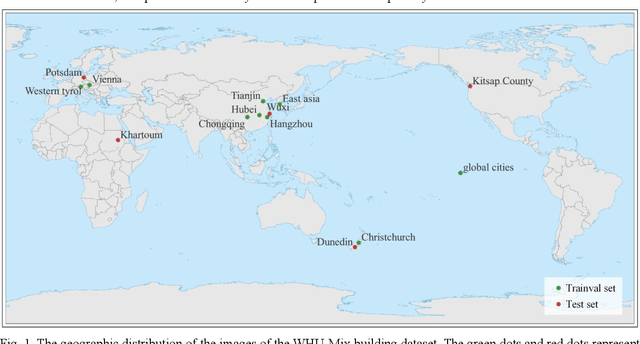

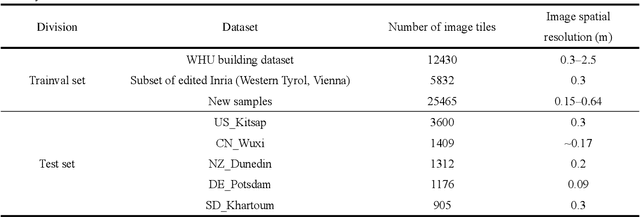

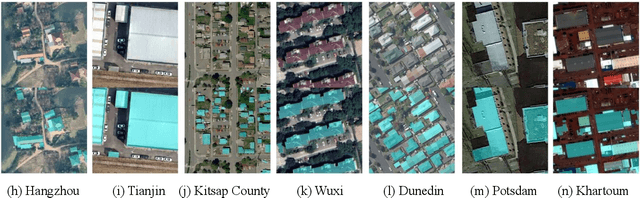

Nov 07, 2022Abstract:Deep learning based methods have significantly boosted the study of automatic building extraction from remote sensing images. However, delineating vectorized and regular building contours like a human does remains very challenging, due to the difficulty of the methodology, the diversity of building structures, and the imperfect imaging conditions. In this paper, we propose the first end-to-end learnable building contour extraction framework, named BuildMapper, which can directly and efficiently delineate building polygons just as a human does. BuildMapper consists of two main components: 1) a contour initialization module that generates initial building contours; and 2) a contour evolution module that performs both contour vertex deformation and reduction, which removes the need for complex empirical post-processing used in existing methods. In both components, we provide new ideas, including a learnable contour initialization method to replace the empirical methods, dynamic predicted and ground truth vertex pairing for the static vertex correspondence problem, and a lightweight encoder for vertex information extraction and aggregation, which benefit a general contour-based method; and a well-designed vertex classification head for building corner vertices detection, which casts light on direct structured building contour extraction. We also built a suitable large-scale building dataset, the WHU-Mix (vector) building dataset, to benefit the study of contour-based building extraction methods. The extensive experiments conducted on the WHU-Mix (vector) dataset, the WHU dataset, and the CrowdAI dataset verified that BuildMapper can achieve a state-of-the-art performance, with a higher mask average precision (AP) and boundary AP than both segmentation-based and contour-based methods.

A diverse large-scale building dataset and a novel plug-and-play domain generalization method for building extraction

Aug 22, 2022

Abstract:In this paper, we introduce a new building dataset and propose a novel domain generalization method to facilitate the development of building extraction from high-resolution remote sensing images. The problem with the current building datasets involves that they lack diversity, the quality of the labels is unsatisfactory, and they are hardly used to train a building extraction model with good generalization ability, so as to properly evaluate the real performance of a model in practical scenes. To address these issues, we built a diverse, large-scale, and high-quality building dataset named the WHU-Mix building dataset, which is more practice-oriented. The WHU-Mix building dataset consists of a training/validation set containing 43,727 diverse images collected from all over the world, and a test set containing 8402 images from five other cities on five continents. In addition, to further improve the generalization ability of a building extraction model, we propose a domain generalization method named batch style mixing (BSM), which can be embedded as an efficient plug-and-play module in the frond-end of a building extraction model, providing the model with a progressively larger data distribution to learn data-invariant knowledge. The experiments conducted in this study confirmed the potential of the WHU-Mix building dataset to improve the performance of a building extraction model, resulting in a 6-36% improvement in mIoU, compared to the other existing datasets. The adverse impact of the inaccurate labels in the other datasets can cause about 20% IoU decrease. The experiments also confirmed the high performance of the proposed BSM module in enhancing the generalization ability and robustness of a model, exceeding the baseline model without domain generalization by 13% and the recent domain generalization methods by 4-15% in mIoU.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge