Muxin Pu

Siformer: Feature-isolated Transformer for Efficient Skeleton-based Sign Language Recognition

Mar 26, 2025Abstract:Sign language recognition (SLR) refers to interpreting sign language glosses from given videos automatically. This research area presents a complex challenge in computer vision because of the rapid and intricate movements inherent in sign languages, which encompass hand gestures, body postures, and even facial expressions. Recently, skeleton-based action recognition has attracted increasing attention due to its ability to handle variations in subjects and backgrounds independently. However, current skeleton-based SLR methods exhibit three limitations: 1) they often neglect the importance of realistic hand poses, where most studies train SLR models on non-realistic skeletal representations; 2) they tend to assume complete data availability in both training or inference phases, and capture intricate relationships among different body parts collectively; 3) these methods treat all sign glosses uniformly, failing to account for differences in complexity levels regarding skeletal representations. To enhance the realism of hand skeletal representations, we present a kinematic hand pose rectification method for enforcing constraints. Mitigating the impact of missing data, we propose a feature-isolated mechanism to focus on capturing local spatial-temporal context. This method captures the context concurrently and independently from individual features, thus enhancing the robustness of the SLR model. Additionally, to adapt to varying complexity levels of sign glosses, we develop an input-adaptive inference approach to optimise computational efficiency and accuracy. Experimental results demonstrate the effectiveness of our approach, as evidenced by achieving a new state-of-the-art (SOTA) performance on WLASL100 and LSA64. For WLASL100, we achieve a top-1 accuracy of 86.50\%, marking a relative improvement of 2.39% over the previous SOTA. For LSA64, we achieve a top-1 accuracy of 99.84%.

Robustness Evaluation in Hand Pose Estimation Models using Metamorphic Testing

Mar 08, 2023

Abstract:Hand pose estimation (HPE) is a task that predicts and describes the hand poses from images or video frames. When HPE models estimate hand poses captured in a laboratory or under controlled environments, they normally deliver good performance. However, the real-world environment is complex, and various uncertainties may happen, which could degrade the performance of HPE models. For example, the hands could be occluded, the visibility of hands could be reduced by imperfect exposure rate, and the contour of hands prone to be blurred during fast hand movements. In this work, we adopt metamorphic testing to evaluate the robustness of HPE models and provide suggestions on the choice of HPE models for different applications. The robustness evaluation was conducted on four state-of-the-art models, namely MediaPipe hands, OpenPose, BodyHands, and NSRM hand. We found that on average more than 80\% of the hands could not be identified by BodyHands, and at least 50\% of hands could not be identified by MediaPipe hands when diagonal motion blur is introduced, while an average of more than 50\% of strongly underexposed hands could not be correctly estimated by NSRM hand. Similarly, applying occlusions on only four hand joints will also largely degrade the performance of these models. The experimental results show that occlusions, illumination variations, and motion blur are the main obstacles to the performance of existing HPE models. These findings may pave the way for researchers to improve the performance and robustness of hand pose estimation models and their applications.

Metamorphic Testing-based Adversarial Attack to Fool Deepfake Detectors

Apr 19, 2022

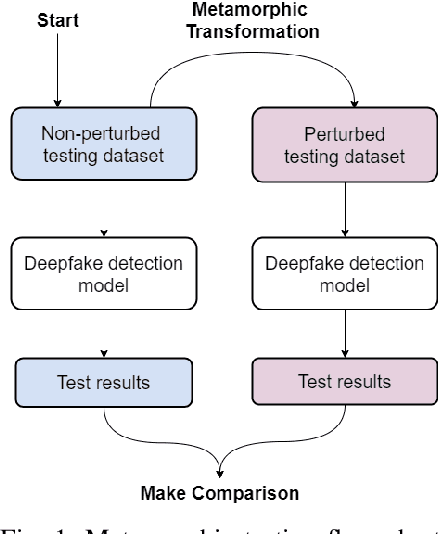

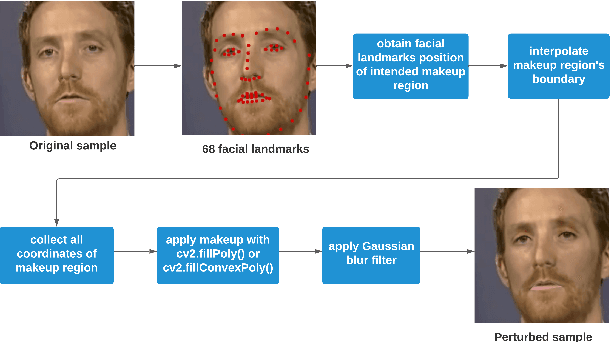

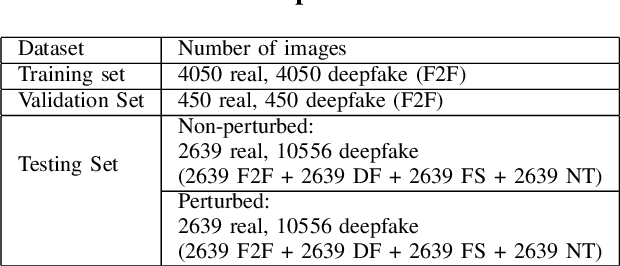

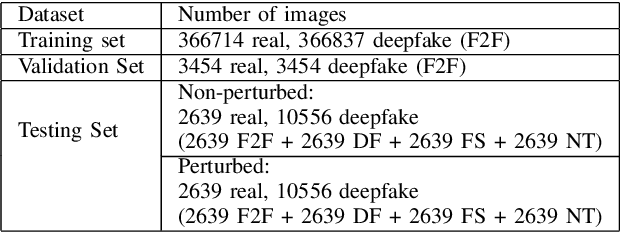

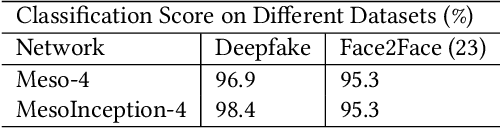

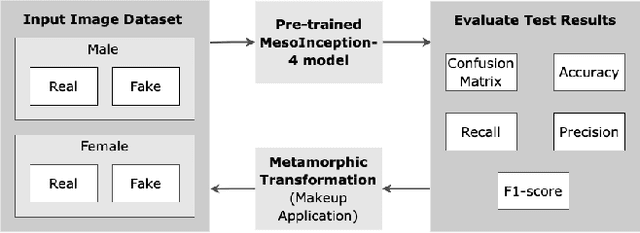

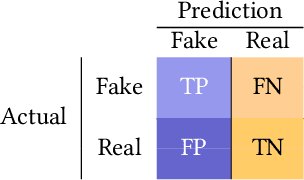

Abstract:Deepfakes utilise Artificial Intelligence (AI) techniques to create synthetic media where the likeness of one person is replaced with another. There are growing concerns that deepfakes can be maliciously used to create misleading and harmful digital contents. As deepfakes become more common, there is a dire need for deepfake detection technology to help spot deepfake media. Present deepfake detection models are able to achieve outstanding accuracy (>90%). However, most of them are limited to within-dataset scenario, where the same dataset is used for training and testing. Most models do not generalise well enough in cross-dataset scenario, where models are tested on unseen datasets from another source. Furthermore, state-of-the-art deepfake detection models rely on neural network-based classification models that are known to be vulnerable to adversarial attacks. Motivated by the need for a robust deepfake detection model, this study adapts metamorphic testing (MT) principles to help identify potential factors that could influence the robustness of the examined model, while overcoming the test oracle problem in this domain. Metamorphic testing is specifically chosen as the testing technique as it fits our demand to address learning-based system testing with probabilistic outcomes from largely black-box components, based on potentially large input domains. We performed our evaluations on MesoInception-4 and TwoStreamNet models, which are the state-of-the-art deepfake detection models. This study identified makeup application as an adversarial attack that could fool deepfake detectors. Our experimental results demonstrate that both the MesoInception-4 and TwoStreamNet models degrade in their performance by up to 30\% when the input data is perturbed with makeup.

Fairness Evaluation in Deepfake Detection Models using Metamorphic Testing

Mar 14, 2022

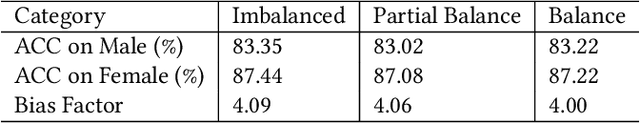

Abstract:Fairness of deepfake detectors in the presence of anomalies are not well investigated, especially if those anomalies are more prominent in either male or female subjects. The primary motivation for this work is to evaluate how deepfake detection model behaves under such anomalies. However, due to the black-box nature of deep learning (DL) and artificial intelligence (AI) systems, it is hard to predict the performance of a model when the input data is modified. Crucially, if this defect is not addressed properly, it will adversely affect the fairness of the model and result in discrimination of certain sub-population unintentionally. Therefore, the objective of this work is to adopt metamorphic testing to examine the reliability of the selected deepfake detection model, and how the transformation of input variation places influence on the output. We have chosen MesoInception-4, a state-of-the-art deepfake detection model, as the target model and makeup as the anomalies. Makeups are applied through utilizing the Dlib library to obtain the 68 facial landmarks prior to filling in the RGB values. Metamorphic relations are derived based on the notion that realistic perturbations of the input images, such as makeup, involving eyeliners, eyeshadows, blushes, and lipsticks (which are common cosmetic appearance) applied to male and female images, should not alter the output of the model by a huge margin. Furthermore, we narrow down the scope to focus on revealing potential gender biases in DL and AI systems. Specifically, we are interested to examine whether MesoInception-4 model produces unfair decisions, which should be considered as a consequence of robustness issues. The findings from our work have the potential to pave the way for new research directions in the quality assurance and fairness in DL and AI systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge