Muhammad Ifte Khairul Islam

Tackling Oversmoothing in GNN via Graph Sparsification: A Truss-based Approach

Jul 16, 2024

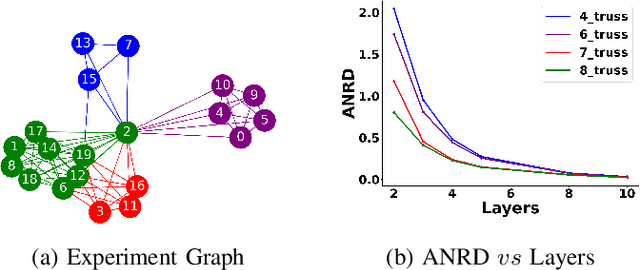

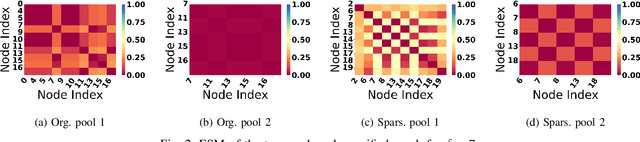

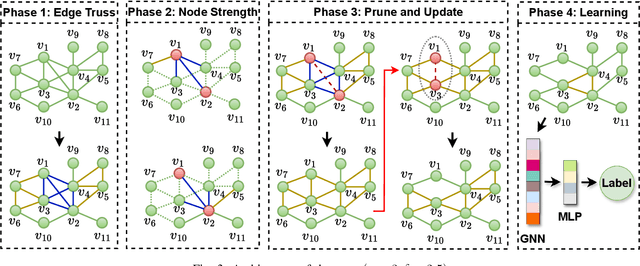

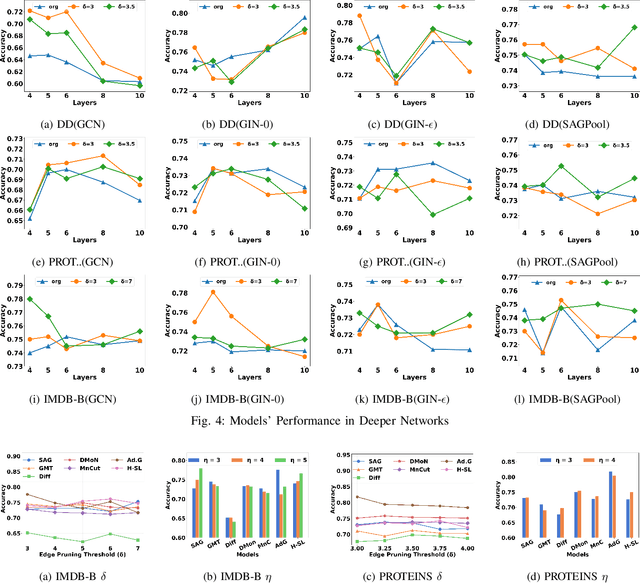

Abstract:Graph Neural Network (GNN) achieves great success for node-level and graph-level tasks via encoding meaningful topological structures of networks in various domains, ranging from social to biological networks. However, repeated aggregation operations lead to excessive mixing of node representations, particularly in dense regions with multiple GNN layers, resulting in nearly indistinguishable embeddings. This phenomenon leads to the oversmoothing problem that hampers downstream graph analytics tasks. To overcome this issue, we propose a novel and flexible truss-based graph sparsification model that prunes edges from dense regions of the graph. Pruning redundant edges in dense regions helps to prevent the aggregation of excessive neighborhood information during hierarchical message passing and pooling in GNN models. We then utilize our sparsification model in the state-of-the-art baseline GNNs and pooling models, such as GIN, SAGPool, GMT, DiffPool, MinCutPool, HGP-SL, DMonPool, and AdamGNN. Extensive experiments on different real-world datasets show that our model significantly improves the performance of the baseline GNN models in the graph classification task.

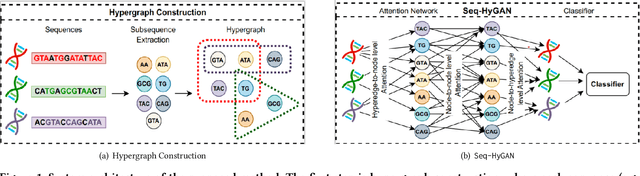

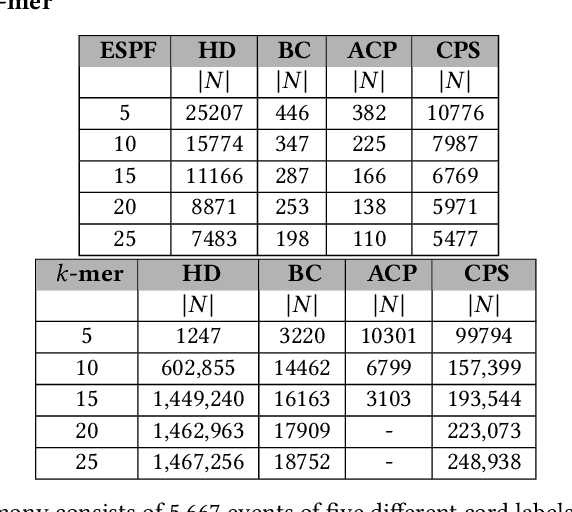

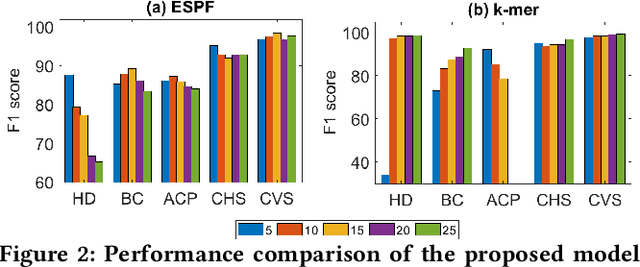

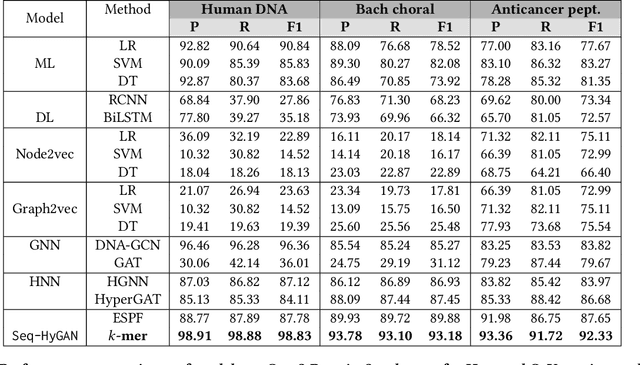

Seq-HyGAN: Sequence Classification via Hypergraph Attention Network

Mar 19, 2023

Abstract:Sequence classification has a wide range of real-world applications in different domains, such as genome classification in health and anomaly detection in business. However, the lack of explicit features in sequence data makes it difficult for machine learning models. While Neural Network (NN) models address this with learning features automatically, they are limited to capturing adjacent structural connections and ignore global, higher-order information between the sequences. To address these challenges in the sequence classification problems, we propose a novel Hypergraph Attention Network model, namely Seq-HyGAN. To capture the complex structural similarity between sequence data, we first create a hypergraph where the sequences are depicted as hyperedges and subsequences extracted from sequences are depicted as nodes. Additionally, we introduce an attention-based Hypergraph Neural Network model that utilizes a two-level attention mechanism. This model generates a sequence representation as a hyperedge while simultaneously learning the crucial subsequences for each sequence. We conduct extensive experiments on four data sets to assess and compare our model with several state-of-the-art methods. Experimental results demonstrate that our proposed Seq-HyGAN model can effectively classify sequence data and significantly outperform the baselines. We also conduct case studies to investigate the contribution of each module in Seq-HyGAN.

MPool: Motif-Based Graph Pooling

Mar 07, 2023

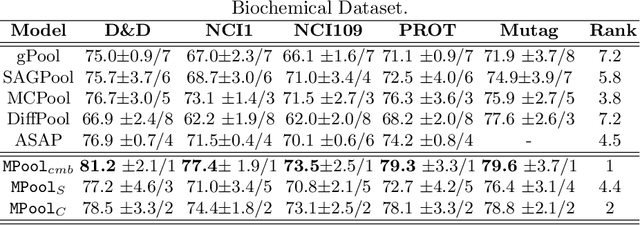

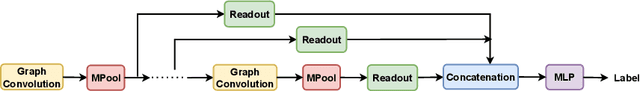

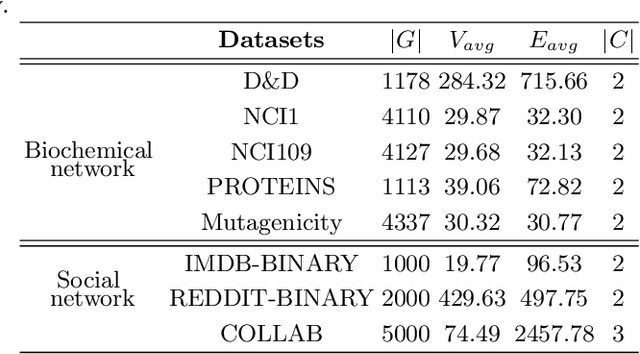

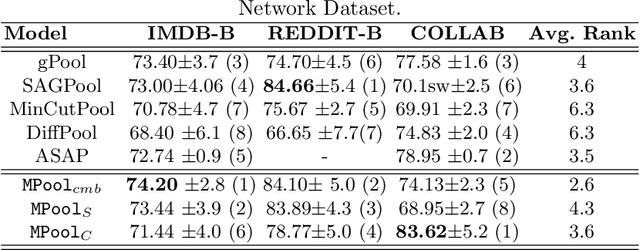

Abstract:Graph Neural networks (GNNs) have recently become a powerful technique for many graph-related tasks including graph classification. Current GNN models apply different graph pooling methods that reduce the number of nodes and edges to learn the higher-order structure of the graph in a hierarchical way. All these methods primarily rely on the one-hop neighborhood. However, they do not consider the higher- order structure of the graph. In this work, we propose a multi-channel Motif-based Graph Pooling method named (MPool) captures the higher-order graph structure with motif and local and global graph structure with a combination of selection and clustering-based pooling operations. As the first channel, we develop node selection-based graph pooling by designing a node ranking model considering the motif adjacency of nodes. As the second channel, we develop cluster-based graph pooling by designing a spectral clustering model using motif adjacency. As the final layer, the result of each channel is aggregated into the final graph representation. We perform extensive experiments on eight benchmark datasets and show that our proposed method shows better accuracy than the baseline methods for graph classification tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge