Mossad Helali

Linked Data Science Powered by Knowledge Graphs

Mar 09, 2023

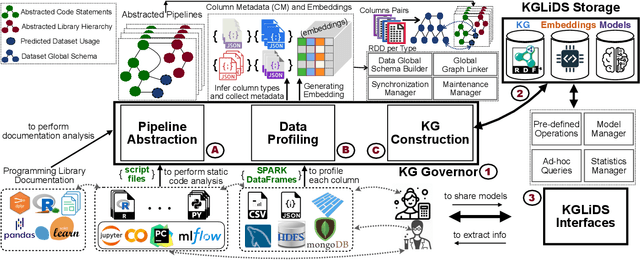

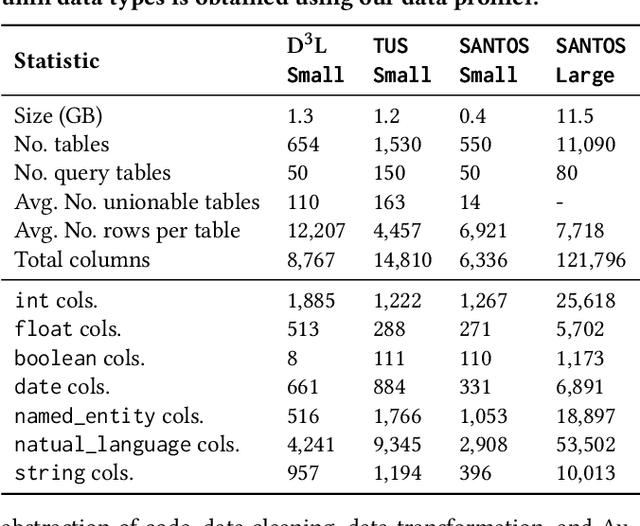

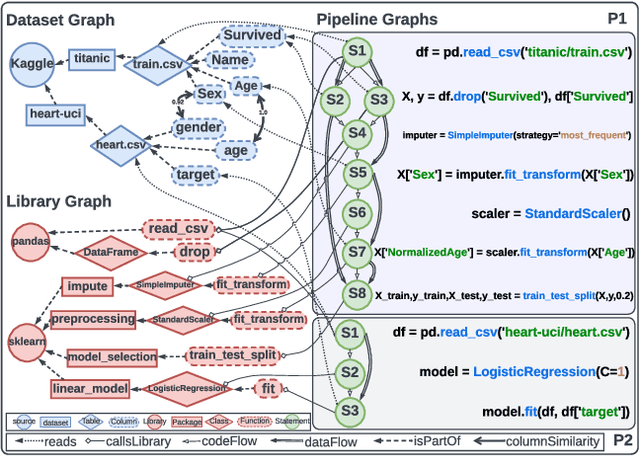

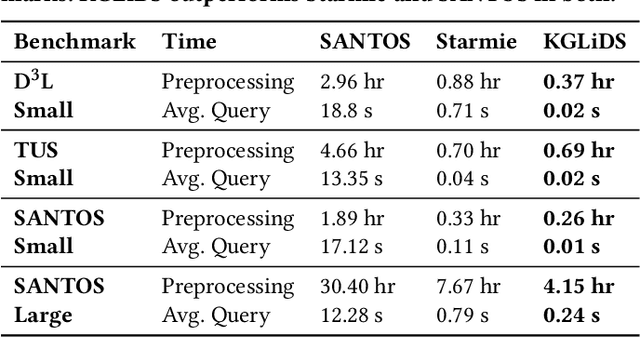

Abstract:In recent years, we have witnessed a growing interest in data science not only from academia but particularly from companies investing in data science platforms to analyze large amounts of data. In this process, a myriad of data science artifacts, such as datasets and pipeline scripts, are created. Yet, there has so far been no systematic attempt to holistically exploit the collected knowledge and experiences that are implicitly contained in the specification of these pipelines, e.g., compatible datasets, cleansing steps, ML algorithms, parameters, etc. Instead, data scientists still spend a considerable amount of their time trying to recover relevant information and experiences from colleagues, trial and error, lengthy exploration, etc. In this paper, we, therefore, propose a scalable system (KGLiDS) that employs machine learning to extract the semantics of data science pipelines and captures them in a knowledge graph, which can then be exploited to assist data scientists in various ways. This abstraction is the key to enabling Linked Data Science since it allows us to share the essence of pipelines between platforms, companies, and institutions without revealing critical internal information and instead focusing on the semantics of what is being processed and how. Our comprehensive evaluation uses thousands of datasets and more than thirteen thousand pipeline scripts extracted from data discovery benchmarks and the Kaggle portal and shows that KGLiDS significantly outperforms state-of-the-art systems on related tasks, such as dataset recommendation and pipeline classification.

Serenity: Library Based Python Code Analysis for Code Completion and Automated Machine Learning

Jan 05, 2023

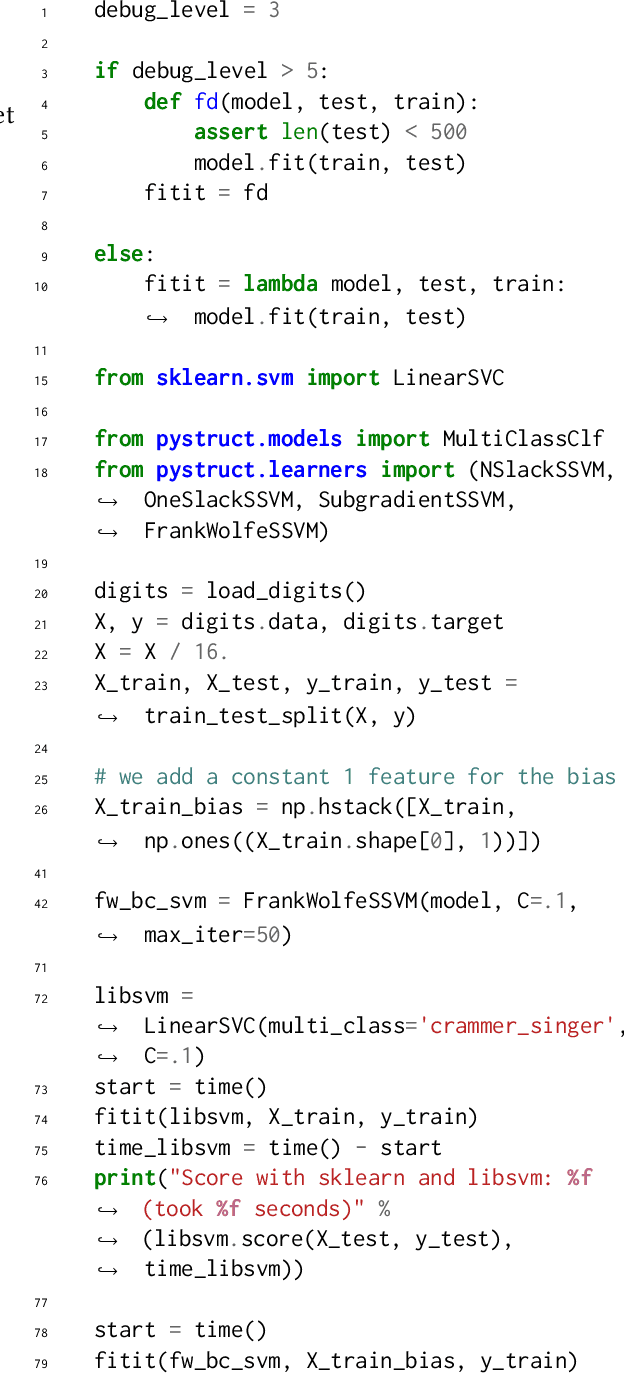

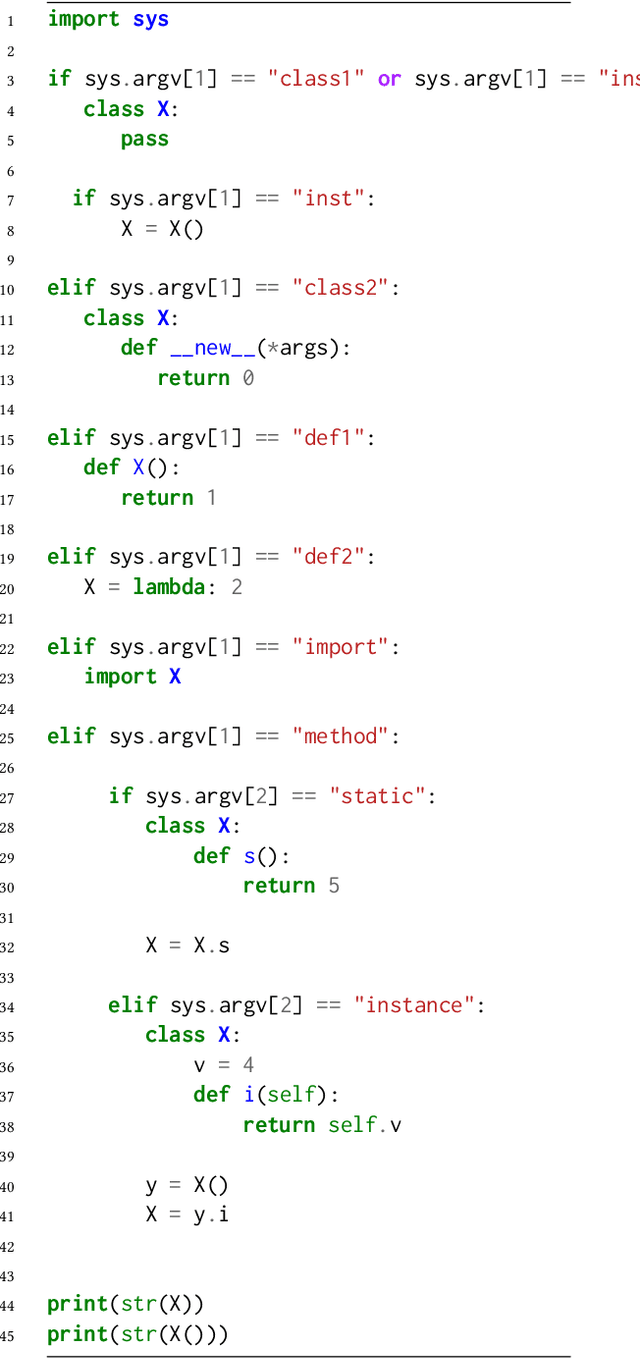

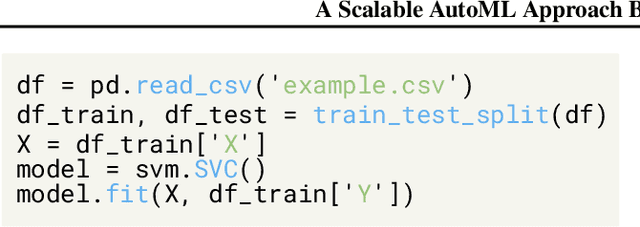

Abstract:Dynamically typed languages such as Python have become very popular. Among other strengths, Python's dynamic nature and its straightforward linking to native code have made it the de-facto language for many research areas such as Artificial Intelligence. This flexibility, however, makes static analysis very hard. While creating a sound, or a soundy, analysis for Python remains an open problem, we present in this work Serenity, a framework for static analysis of Python that turns out to be sufficient for some tasks. The Serenity framework exploits two basic mechanisms: (a) reliance on dynamic dispatch at the core of language translation, and (b) extreme abstraction of libraries, to generate an abstraction of the code. We demonstrate the efficiency and usefulness of Serenity's analysis in two applications: code completion and automated machine learning. In these two applications, we demonstrate that such analysis has a strong signal, and can be leveraged to establish state-of-the-art performance, comparable to neural models and dynamic analysis respectively.

A Scalable AutoML Approach Based on Graph Neural Networks

Oct 29, 2021

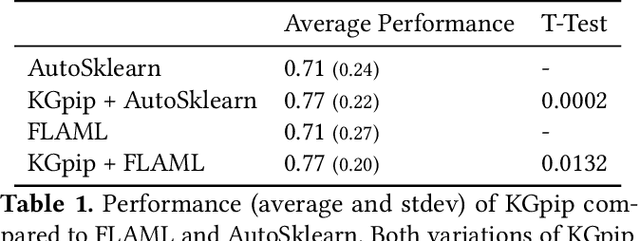

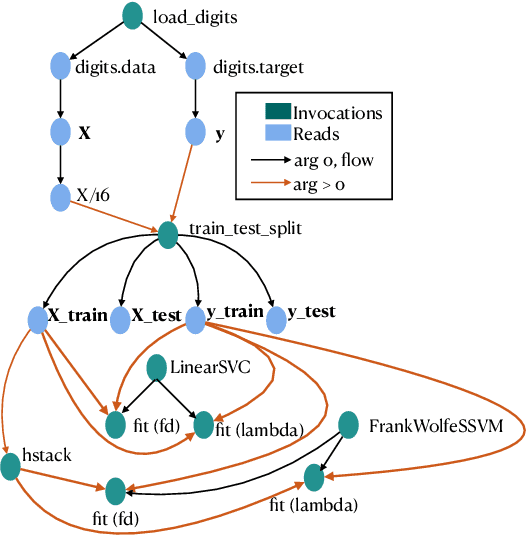

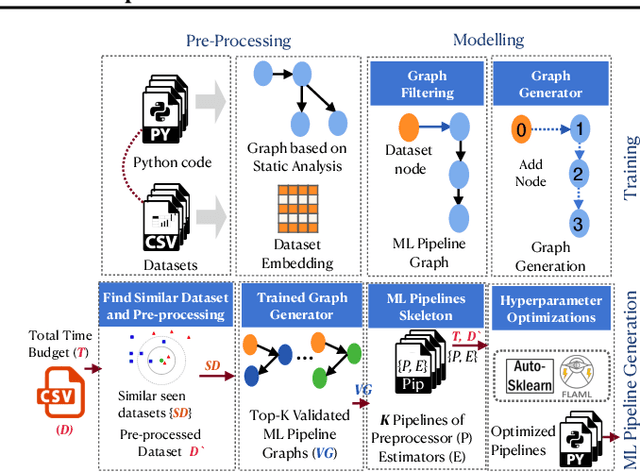

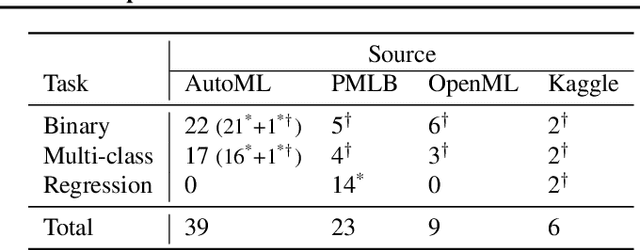

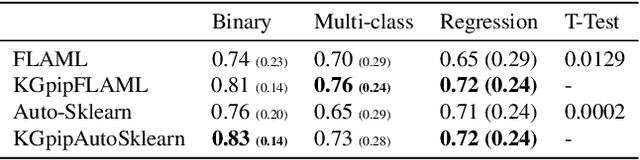

Abstract:AutoML systems build machine learning models automatically by performing a search over valid data transformations and learners, along with hyper-parameter optimization for each learner. We present a system called KGpip for the selection of transformations and learners, which (1) builds a database of datasets and corresponding historically used pipelines using effective static analysis instead of the typical use of actual runtime information, (2) uses dataset embeddings to find similar datasets in the database based on its content instead of metadata-based features, (3) models AutoML pipeline creation as a graph generation problem, to succinctly characterize the diverse pipelines seen for a single dataset. KGpip is designed as a sub-component for AutoML systems. We demonstrate this ability via integrating KGpip with two AutoML systems and show that it does significantly enhance the performance of existing state-of-the-art systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge