Moshe Kravchik

Poisoning Attacks on Cyber Attack Detectors for Industrial Control Systems

Dec 23, 2020

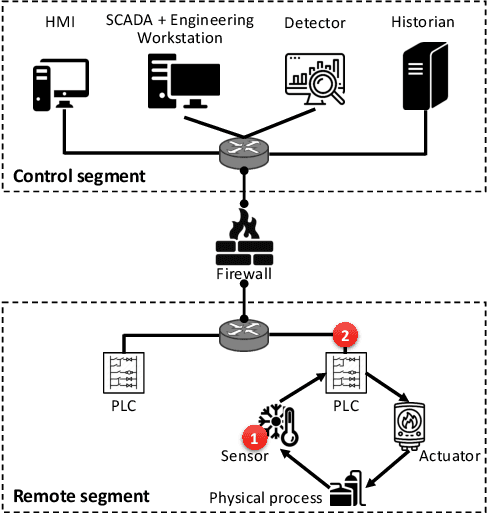

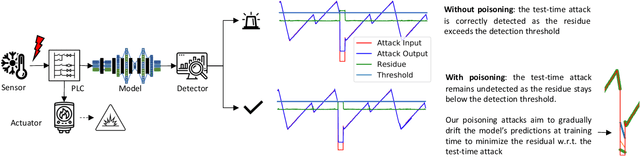

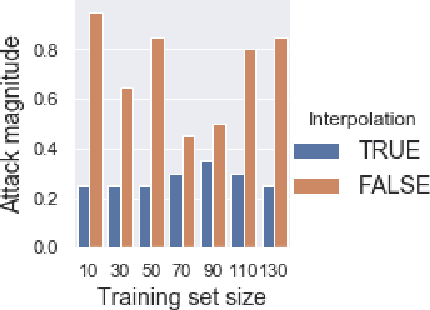

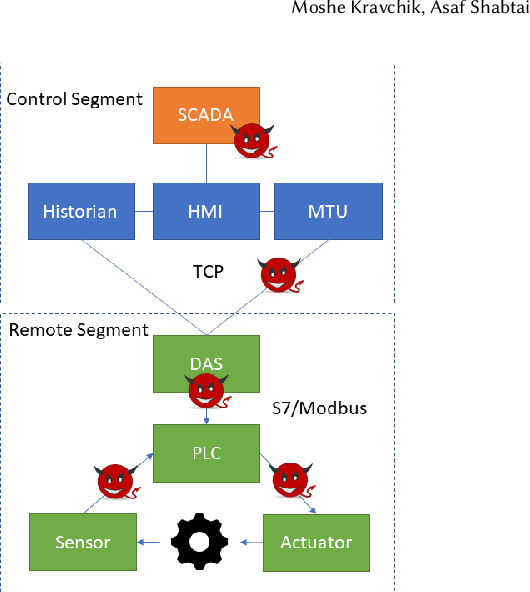

Abstract:Recently, neural network (NN)-based methods, including autoencoders, have been proposed for the detection of cyber attacks targeting industrial control systems (ICSs). Such detectors are often retrained, using data collected during system operation, to cope with the natural evolution (i.e., concept drift) of the monitored signals. However, by exploiting this mechanism, an attacker can fake the signals provided by corrupted sensors at training time and poison the learning process of the detector such that cyber attacks go undetected at test time. With this research, we are the first to demonstrate such poisoning attacks on ICS cyber attack online NN detectors. We propose two distinct attack algorithms, namely, interpolation- and back-gradient based poisoning, and demonstrate their effectiveness on both synthetic and real-world ICS data. We also discuss and analyze some potential mitigation strategies.

The Translucent Patch: A Physical and Universal Attack on Object Detectors

Dec 23, 2020

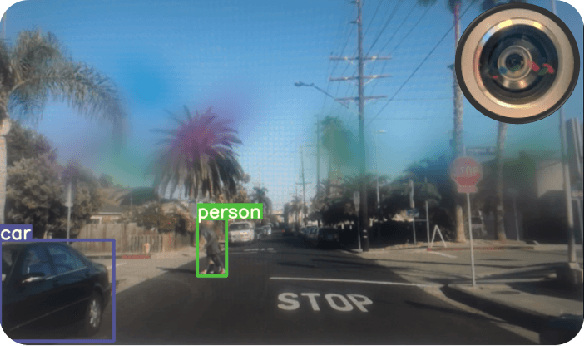

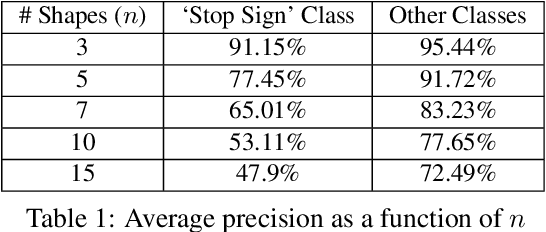

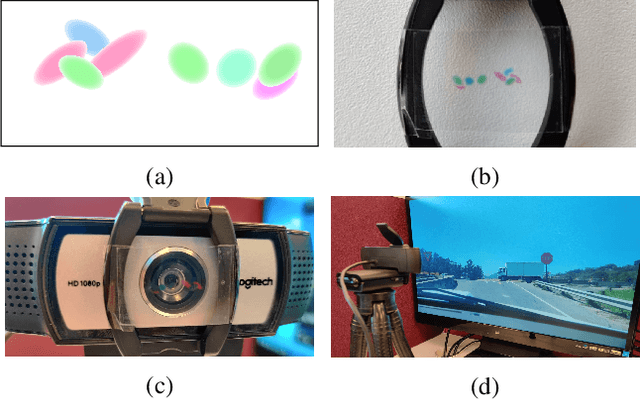

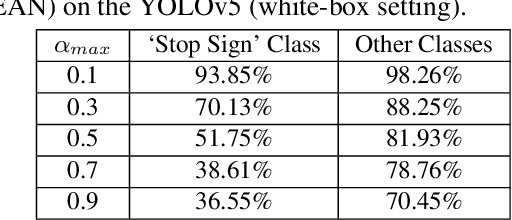

Abstract:Physical adversarial attacks against object detectors have seen increasing success in recent years. However, these attacks require direct access to the object of interest in order to apply a physical patch. Furthermore, to hide multiple objects, an adversarial patch must be applied to each object. In this paper, we propose a contactless translucent physical patch containing a carefully constructed pattern, which is placed on the camera's lens, to fool state-of-the-art object detectors. The primary goal of our patch is to hide all instances of a selected target class. In addition, the optimization method used to construct the patch aims to ensure that the detection of other (untargeted) classes remains unharmed. Therefore, in our experiments, which are conducted on state-of-the-art object detection models used in autonomous driving, we study the effect of the patch on the detection of both the selected target class and the other classes. We show that our patch was able to prevent the detection of 42.27% of all stop sign instances while maintaining high (nearly 80%) detection of the other classes.

Can't Boil This Frog: Robustness of Online-Trained Autoencoder-Based Anomaly Detectors to Adversarial Poisoning Attacks

Feb 07, 2020

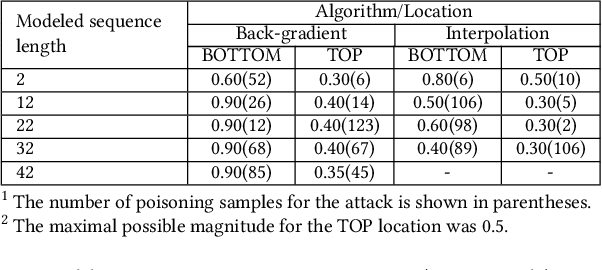

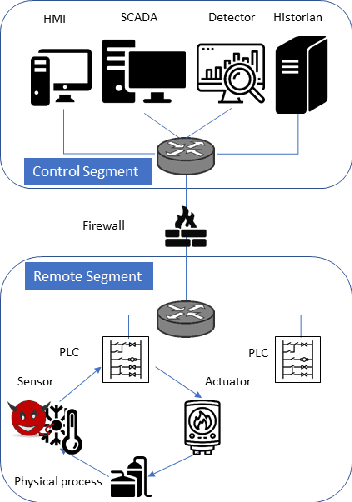

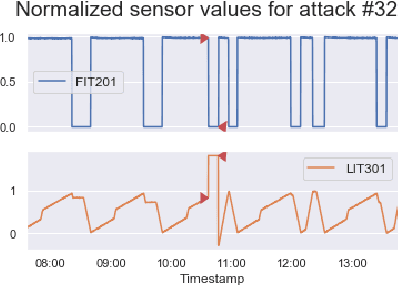

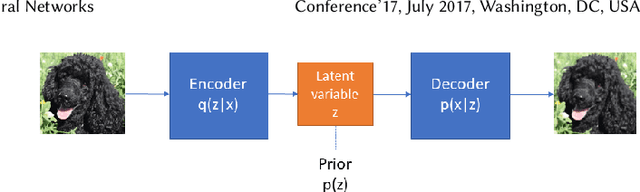

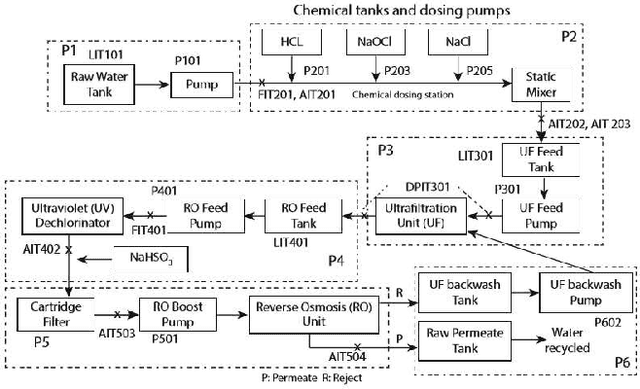

Abstract:In recent years, a variety of effective neural network-based methods for anomaly and cyber attack detection in industrial control systems (ICSs) have been demonstrated in the literature. Given their successful implementation and widespread use, there is a need to study adversarial attacks on such detection methods to better protect the systems that depend upon them. The extensive research performed on adversarial attacks on image and malware classification has little relevance to the physical system state prediction domain, which most of the ICS attack detection systems belong to. Moreover, such detection systems are typically retrained using new data collected from the monitored system, thus the threat of adversarial data poisoning is significant, however this threat has not yet been addressed by the research community. In this paper, we present the first study focused on poisoning attacks on online-trained autoencoder-based attack detectors. We propose two algorithms for generating poison samples, an interpolation-based algorithm and a back-gradient optimization-based algorithm, which we evaluate on both synthetic and real-world ICS data. We demonstrate that the proposed algorithms can generate poison samples that cause the target attack to go undetected by the autoencoder detector, however the ability to poison the detector is limited to a small set of attack types and magnitudes. When the poison-generating algorithms are applied to the popular SWaT dataset, we show that the autoencoder detector trained on the physical system state data is resilient to poisoning in the face of all ten of the relevant attacks in the dataset. This finding suggests that neural network-based attack detectors used in the cyber-physical domain are more robust to poisoning than in other problem domains, such as malware detection and image processing.

Efficient Cyber Attacks Detection in Industrial Control Systems Using Lightweight Neural Networks

Jul 02, 2019

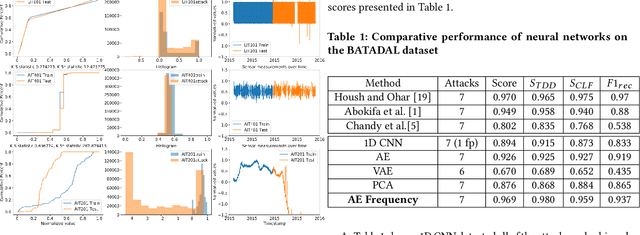

Abstract:Industrial control systems (ICSs) are widely used and vital to industry and society. Their failure can have severe impact on both economics and human life. Hence, these systems have become an attractive target for attacks, both physical and cyber. A number of attacks detection methods were proposed, however, they are characterized by an insufficient detection rate, a substantial false positives rate, or are system specific. In this paper, we study an attack detection method based on simple and lightweight neural networks, namely, 1D convolutions and autoencoders. We apply these networks to both time and frequency domains of the collected data and discuss pros and cons of each approach. We evaluate the suggested method on three popular public datasets and achieve detection metrics matching or exceeding previously published detection results, while featuring small footprint, short training and detection times, and generality.

Anomaly detection; Industrial control systems; convolutional neural networks

Jun 21, 2018

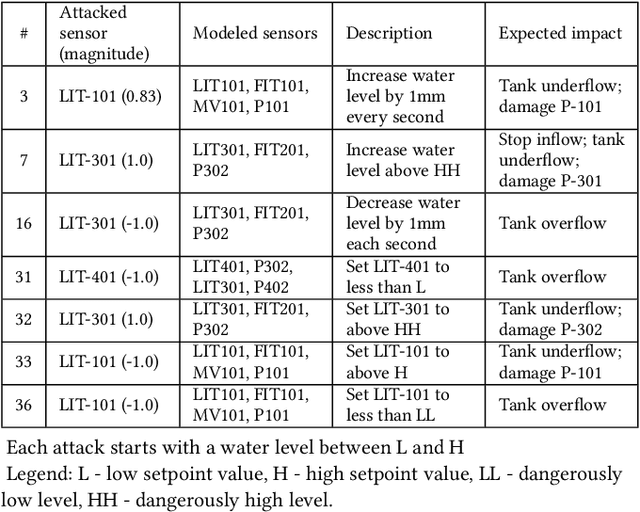

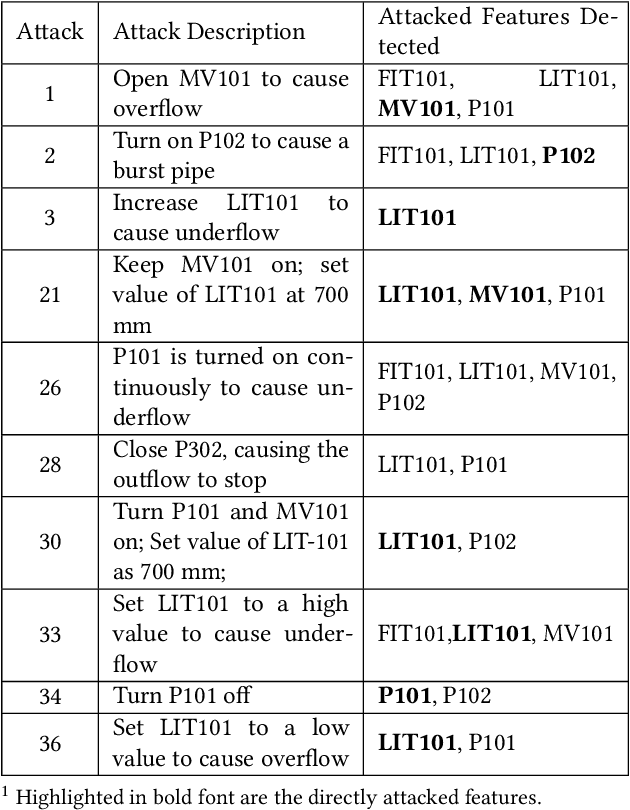

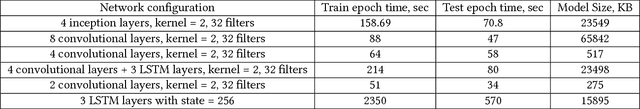

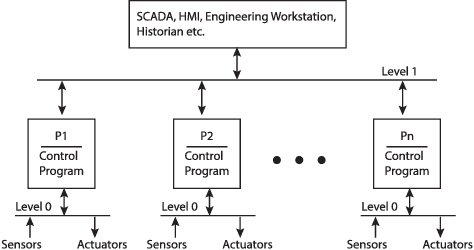

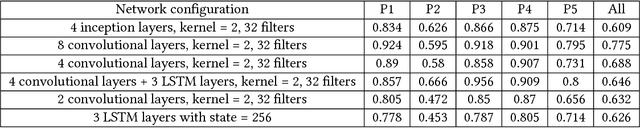

Abstract:This paper presents a study on detecting cyberattacks on industrial control systems (ICS) using unsupervised deep neural networks, specifically, convolutional neural networks. The study was performed on a SecureWater Treatment testbed (SWaT) dataset, which represents a scaled-down version of a real-world industrial water treatment plant. e suggest a method for anomaly detection based on measuring the statistical deviation of the predicted value from the observed value.We applied the proposed method by using a variety of deep neural networks architectures including different variants of convolutional and recurrent networks. The test dataset from SWaT included 36 different cyberattacks. The proposed method successfully detects the vast majority of the attacks with a low false positive rate thus improving on previous works based on this data set. The results of the study show that 1D convolutional networks can be successfully applied to anomaly detection in industrial control systems and outperform more complex recurrent networks while being much smaller and faster to train.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge