Monjoy Saha

TMA-Grid: An open-source, zero-footprint web application for FAIR Tissue MicroArray De-arraying

Jul 30, 2024

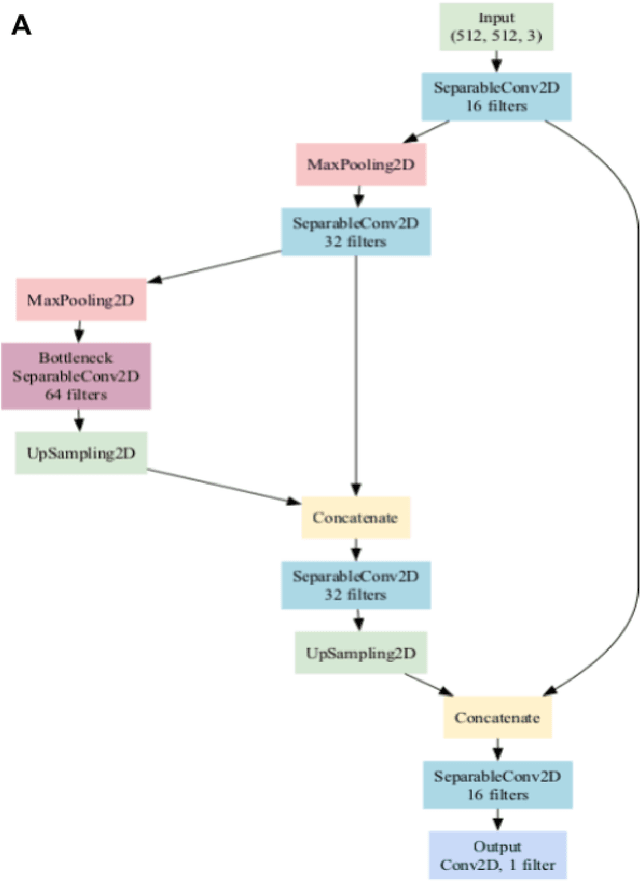

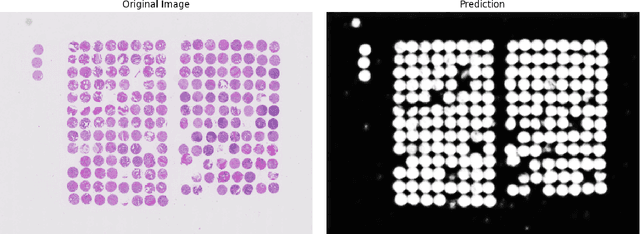

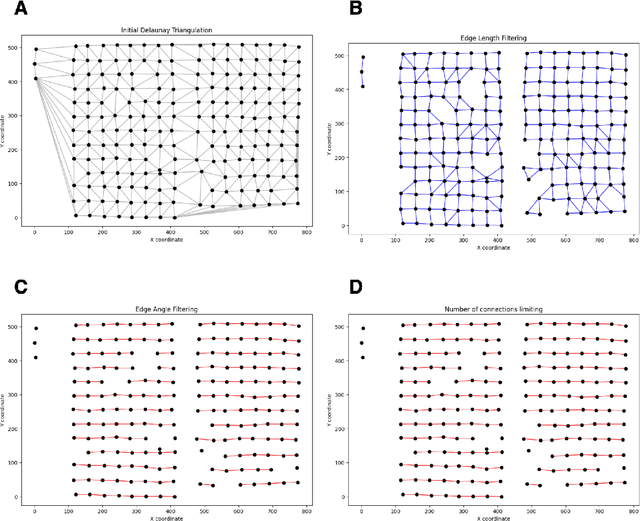

Abstract:Background: Tissue Microarrays (TMAs) significantly increase analytical efficiency in histopathology and large-scale epidemiologic studies by allowing multiple tissue cores to be scanned on a single slide. The individual cores can be digitally extracted and then linked to metadata for analysis in a process known as de-arraying. However, TMAs often contain core misalignments and artifacts due to assembly errors, which can adversely affect the reliability of the extracted cores during the de-arraying process. Moreover, conventional approaches for TMA de-arraying rely on desktop solutions.Therefore, a robust yet flexible de-arraying method is crucial to account for these inaccuracies and ensure effective downstream analyses. Results: We developed TMA-Grid, an in-browser, zero-footprint, interactive web application for TMA de-arraying. This web application integrates a convolutional neural network for precise tissue segmentation and a grid estimation algorithm to match each identified core to its expected location. The application emphasizes interactivity, allowing users to easily adjust segmentation and gridding results. Operating entirely in the web-browser, TMA-Grid eliminates the need for downloads or installations and ensures data privacy. Adhering to FAIR principles (Findable, Accessible, Interoperable, and Reusable), the application and its components are designed for seamless integration into TMA research workflows. Conclusions: TMA-Grid provides a robust, user-friendly solution for TMA dearraying on the web. As an open, freely accessible platform, it lays the foundation for collaborative analyses of TMAs and similar histopathology imaging data. Availability: Web application: https://episphere.github.io/tma-grid Code: https://github.com/episphere/tma-grid Tutorial: https://youtu.be/miajqyw4BVk

Finding Regions of Interest in Whole Slide Images Using Multiple Instance Learning

Apr 11, 2024Abstract:Whole Slide Images (WSI), obtained by high-resolution digital scanning of microscope slides at multiple scales, are the cornerstone of modern Digital Pathology. However, they represent a particular challenge to AI-based/AI-mediated analysis because pathology labeling is typically done at slide-level, instead of tile-level. It is not just that medical diagnostics is recorded at the specimen level, the detection of oncogene mutation is also experimentally obtained, and recorded by initiatives like The Cancer Genome Atlas (TCGA), at the slide level. This configures a dual challenge: a) accurately predicting the overall cancer phenotype and b) finding out what cellular morphologies are associated with it at the tile level. To address these challenges, a weakly supervised Multiple Instance Learning (MIL) approach was explored for two prevalent cancer types, Invasive Breast Carcinoma (TCGA-BRCA) and Lung Squamous Cell Carcinoma (TCGA-LUSC). This approach was explored for tumor detection at low magnification levels and TP53 mutations at various levels. Our results show that a novel additive implementation of MIL matched the performance of reference implementation (AUC 0.96), and was only slightly outperformed by Attention MIL (AUC 0.97). More interestingly from the perspective of the molecular pathologist, these different AI architectures identify distinct sensitivities to morphological features (through the detection of Regions of Interest, RoI) at different amplification levels. Tellingly, TP53 mutation was most sensitive to features at the higher applications where cellular morphology is resolved.

Detecting COVID-19 from Chest Computed Tomography Scans using AI-Driven Android Application

Nov 06, 2021

Abstract:The COVID-19 (coronavirus disease 2019) pandemic affected more than 186 million people with over 4 million deaths worldwide by June 2021. The magnitude of which has strained global healthcare systems. Chest Computed Tomography (CT) scans have a potential role in the diagnosis and prognostication of COVID-19. Designing a diagnostic system which is cost-efficient and convenient to operate on resource-constrained devices like mobile phones would enhance the clinical usage of chest CT scans and provide swift, mobile, and accessible diagnostic capabilities. This work proposes developing a novel Android application that detects COVID-19 infection from chest CT scans using a highly efficient and accurate deep learning algorithm. It further creates an attention heatmap, augmented on the segmented lung parenchyma region in the CT scans through an algorithm developed as a part of this work, which shows the regions of infection in the lungs. We propose a selection approach combined with multi-threading for a faster generation of heatmaps on Android Device, which reduces the processing time by about 93%. The neural network trained to detect COVID-19 in this work is tested with F1 score and accuracy, both of 99.58% and sensitivity of 99.69%, which is better than most of the results in the domain of COVID diagnosis from CT scans. This work will be beneficial in high volume practices and help doctors triage patients in the early diagnosis of the COVID-19 quickly and efficiently.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge