Moni Shahar

Medical Data Pecking: A Context-Aware Approach for Automated Quality Evaluation of Structured Medical Data

Jul 03, 2025Abstract:Background: The use of Electronic Health Records (EHRs) for epidemiological studies and artificial intelligence (AI) training is increasing rapidly. The reliability of the results depends on the accuracy and completeness of EHR data. However, EHR data often contain significant quality issues, including misrepresentations of subpopulations, biases, and systematic errors, as they are primarily collected for clinical and billing purposes. Existing quality assessment methods remain insufficient, lacking systematic procedures to assess data fitness for research. Methods: We present the Medical Data Pecking approach, which adapts unit testing and coverage concepts from software engineering to identify data quality concerns. We demonstrate our approach using the Medical Data Pecking Tool (MDPT), which consists of two main components: (1) an automated test generator that uses large language models and grounding techniques to create a test suite from data and study descriptions, and (2) a data testing framework that executes these tests, reporting potential errors and coverage. Results: We evaluated MDPT on three datasets: All of Us (AoU), MIMIC-III, and SyntheticMass, generating 55-73 tests per cohort across four conditions. These tests correctly identified 20-43 non-aligned or non-conforming data issues. We present a detailed analysis of the LLM-generated test suites in terms of reference grounding and value accuracy. Conclusion: Our approach incorporates external medical knowledge to enable context-sensitive data quality testing as part of the data analysis workflow to improve the validity of its outcomes. Our approach tackles these challenges from a quality assurance perspective, laying the foundation for further development such as additional data modalities and improved grounding methods.

Neural Fingerprints for Adversarial Attack Detection

Nov 07, 2024

Abstract:Deep learning models for image classification have become standard tools in recent years. A well known vulnerability of these models is their susceptibility to adversarial examples. These are generated by slightly altering an image of a certain class in a way that is imperceptible to humans but causes the model to classify it wrongly as another class. Many algorithms have been proposed to address this problem, falling generally into one of two categories: (i) building robust classifiers (ii) directly detecting attacked images. Despite the good performance of these detectors, we argue that in a white-box setting, where the attacker knows the configuration and weights of the network and the detector, they can overcome the detector by running many examples on a local copy, and sending only those that were not detected to the actual model. This problem is common in security applications where even a very good model is not sufficient to ensure safety. In this paper we propose to overcome this inherent limitation of any static defence with randomization. To do so, one must generate a very large family of detectors with consistent performance, and select one or more of them randomly for each input. For the individual detectors, we suggest the method of neural fingerprints. In the training phase, for each class we repeatedly sample a tiny random subset of neurons from certain layers of the network, and if their average is sufficiently different between clean and attacked images of the focal class they are considered a fingerprint and added to the detector bank. During test time, we sample fingerprints from the bank associated with the label predicted by the model, and detect attacks using a likelihood ratio test. We evaluate our detectors on ImageNet with different attack methods and model architectures, and show near-perfect detection with low rates of false detection.

Privacy-Adversarial User Representations in Recommender Systems

Jul 10, 2018

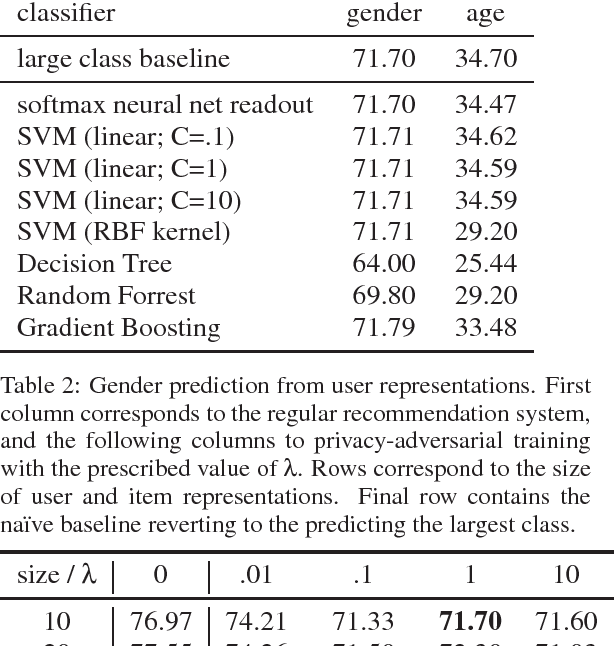

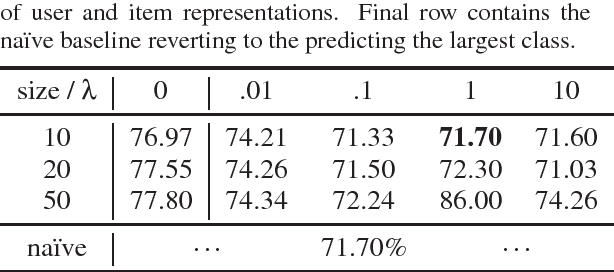

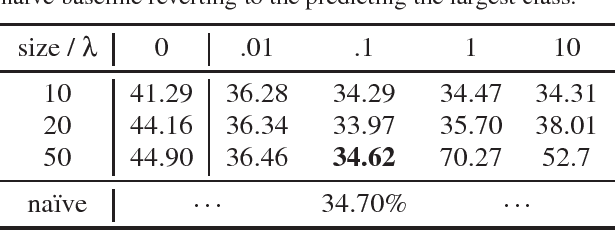

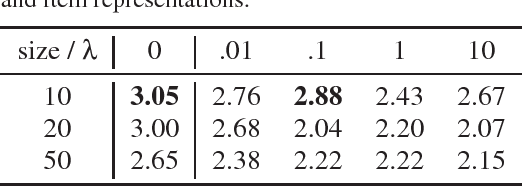

Abstract:Latent factor models for recommender systems represent users and items as low dimensional vectors. Privacy risks have been previously studied mostly in the context of recovery of personal information in the form of usage records from the training data. However, the user representations themselves may be used together with external data to recover private user information such as gender and age. In this paper we show that user vectors calculated by a common recommender system can be exploited in this way. We propose the privacy-adversarial framework to eliminate such leakage, and study the trade-off between recommender performance and leakage both theoretically and empirically using a benchmark dataset. We briefly discuss further applications of this method towards the generation of deeper and more insightful recommendations.

Fusing Multifaceted Transaction Data for User Modeling and Demographic Prediction

Dec 19, 2017

Abstract:Inferring user characteristics such as demographic attributes is of the utmost importance in many user-centric applications. Demographic data is an enabler of personalization, identity security, and other applications. Despite that, this data is sensitive and often hard to obtain. Previous work has shown that purchase history can be used for multi-task prediction of many demographic fields such as gender and marital status. Here we present an embedding based method to integrate multifaceted sequences of transaction data, together with auxiliary relational tables, for better user modeling and demographic prediction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge