Mohan Ren

Granger Causality Detection with Kolmogorov-Arnold Networks

Dec 19, 2024

Abstract:Discovering causal relationships in time series data is central in many scientific areas, ranging from economics to climate science. Granger causality is a powerful tool for causality detection. However, its original formulation is limited by its linear form and only recently nonlinear machine-learning generalizations have been introduced. This study contributes to the definition of neural Granger causality models by investigating the application of Kolmogorov-Arnold networks (KANs) in Granger causality detection and comparing their capabilities against multilayer perceptrons (MLP). In this work, we develop a framework called Granger Causality KAN (GC-KAN) along with a tailored training approach designed specifically for Granger causality detection. We test this framework on both Vector Autoregressive (VAR) models and chaotic Lorenz-96 systems, analysing the ability of KANs to sparsify input features by identifying Granger causal relationships, providing a concise yet accurate model for Granger causality detection. Our findings show the potential of KANs to outperform MLPs in discerning interpretable Granger causal relationships, particularly for the ability of identifying sparse Granger causality patterns in high-dimensional settings, and more generally, the potential of AI in causality discovery for the dynamical laws in physical systems.

Improving PINNs By Algebraic Inclusion of Boundary and Initial Conditions

Jul 30, 2024

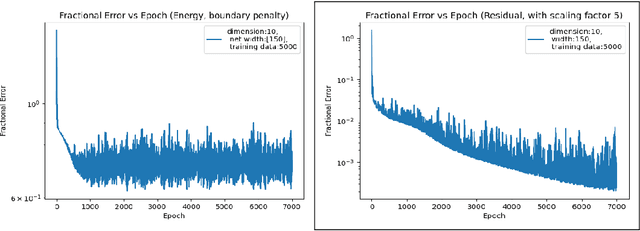

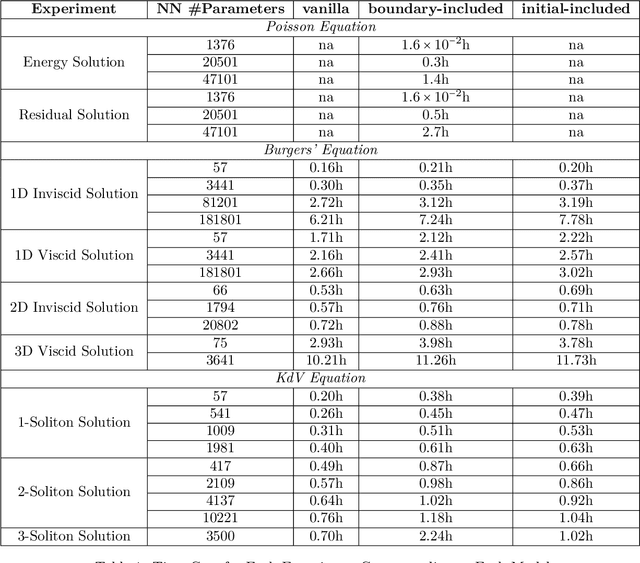

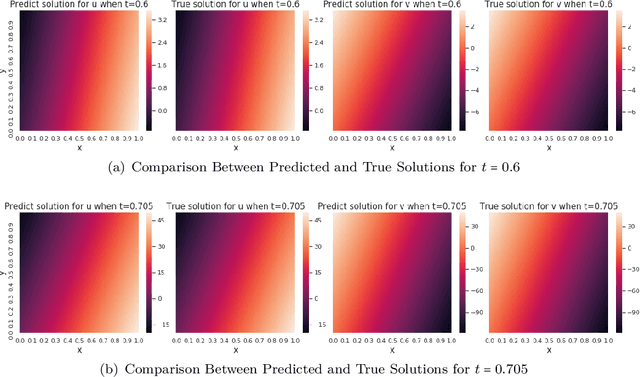

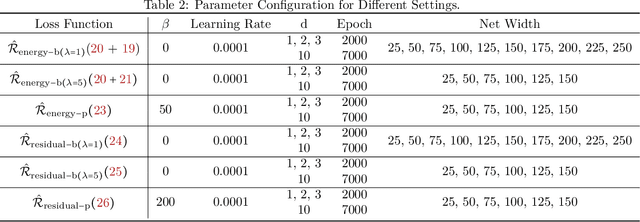

Abstract:"AI for Science" aims to solve fundamental scientific problems using AI techniques. As most physical phenomena can be described as Partial Differential Equations (PDEs) , approximating their solutions using neural networks has evolved as a central component of scientific-ML. Physics-Informed Neural Networks (PINNs) is the general method that has evolved for this task but its training is well-known to be very unstable. In this work we explore the possibility of changing the model being trained from being just a neural network to being a non-linear transformation of it - one that algebraically includes the boundary/initial conditions. This reduces the number of terms in the loss function than the standard PINN losses. We demonstrate that our modification leads to significant performance gains across a range of benchmark tasks, in various dimensions and without having to tweak the training algorithm. Our conclusions are based on conducting hundreds of experiments, in the fully unsupervised setting, over multiple linear and non-linear PDEs set to exactly solvable scenarios, which lends to a concrete measurement of our performance gains in terms of order(s) of magnitude lower fractional errors being achieved, than by standard PINNs. The code accompanying this manuscript is publicly available at, https://github.com/MorganREN/Improving-PINNs-By-Algebraic-Inclusion-of-Boundary-and-Initial-Conditions

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge