Mohammed Sharafath Abdul Hameed

South Westphalia University of Applied Sciences, Germany

Curiosity Based Reinforcement Learning on Robot Manufacturing Cell

Nov 17, 2020

Abstract:This paper introduces a novel combination of scheduling control on a flexible robot manufacturing cell with curiosity based reinforcement learning. Reinforcement learning has proved to be highly successful in solving tasks like robotics and scheduling. But this requires hand tuning of rewards in problem domains like robotics and scheduling even where the solution is not obvious. To this end, we apply a curiosity based reinforcement learning, using intrinsic motivation as a form of reward, on a flexible robot manufacturing cell to alleviate this problem. Further, the learning agents are embedded into the transportation robots to enable a generalized learning solution that can be applied to a variety of environments. In the first approach, the curiosity based reinforcement learning is applied to a simple structured robot manufacturing cell. And in the second approach, the same algorithm is applied to a graph structured robot manufacturing cell. Results from the experiments show that the agents are able to solve both the environments with the ability to transfer the curiosity module directly from one environment to another. We conclude that curiosity based learning on scheduling tasks provide a viable alternative to the reward shaped reinforcement learning traditionally used.

Reinforcement Learning on Job Shop Scheduling Problems Using Graph Networks

Sep 08, 2020

Abstract:This paper presents a novel approach for job shop scheduling problems using deep reinforcement learning. To account for the complexity of production environment, we employ graph neural networks to model the various relations within production environments. Furthermore, we cast the JSSP as a distributed optimization problem in which learning agents are individually assigned to resources which allows for higher flexibility with respect to changing production environments. The proposed distributed RL agents used to optimize production schedules for single resources are running together with a co-simulation framework of the production environment to obtain the required amount of data. The approach is applied to a multi-robot environment and a complex production scheduling benchmark environment. The initial results underline the applicability and performance of the proposed method.

Gradient Monitored Reinforcement Learning

May 25, 2020

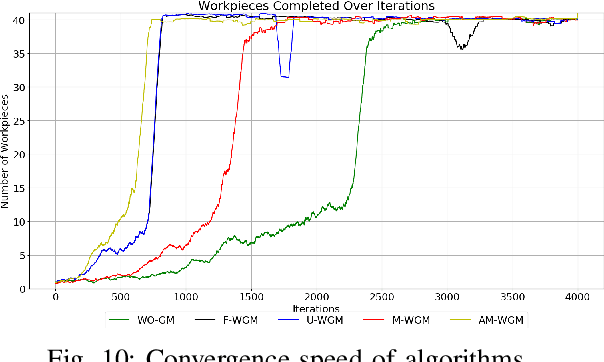

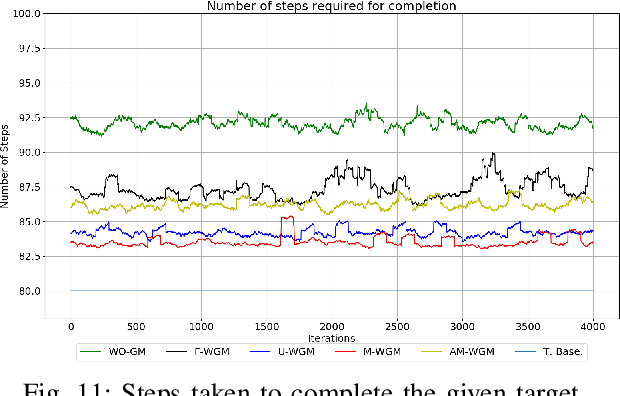

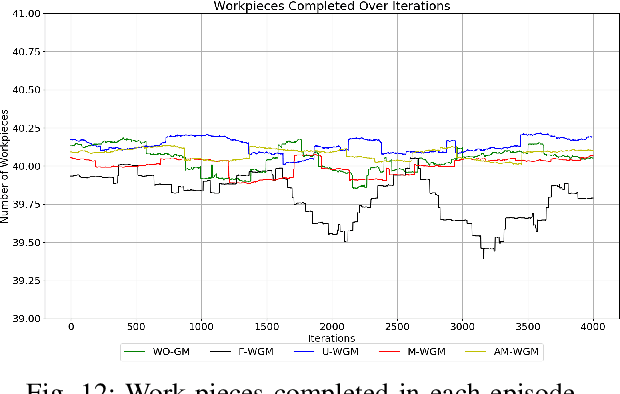

Abstract:This paper presents a novel neural network training approach for faster convergence and better generalization abilities in deep reinforcement learning. Particularly, we focus on the enhancement of training and evaluation performance in reinforcement learning algorithms by systematically reducing gradient's variance and thereby providing a more targeted learning process. The proposed method which we term as Gradient Monitoring(GM), is an approach to steer the learning in the weight parameters of a neural network based on the dynamic development and feedback from the training process itself. We propose different variants of the GM methodology which have been proven to increase the underlying performance of the model. The one of the proposed variant, Momentum with Gradient Monitoring (M-WGM), allows for a continuous adjustment of the quantum of back-propagated gradients in the network based on certain learning parameters. We further enhance the method with Adaptive Momentum with Gradient Monitoring (AM-WGM) method which allows for automatic adjustment between focused learning of certain weights versus a more dispersed learning depending on the feedback from the rewards collected. As a by-product, it also allows for automatic derivation of the required deep network sizes during training as the algorithm automatically freezes trained weights. The approach is applied to two discrete (Multi-Robot Co-ordination problem and Atari games) and one continuous control task (MuJoCo) using Advantage Actor-Critic (A2C) and Proximal Policy Optimization (PPO) respectively. The results obtained particularly underline the applicability and performance improvements of the methods in terms of generalization capability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge