Gradient Monitored Reinforcement Learning

Paper and Code

May 25, 2020

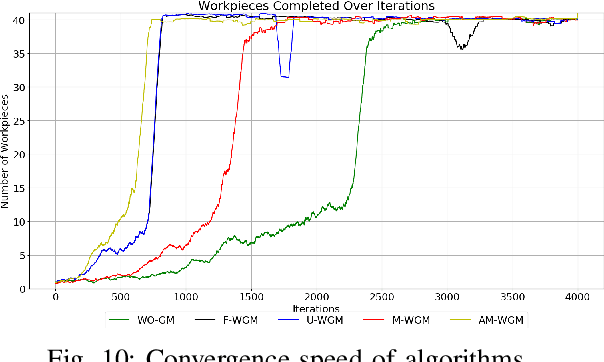

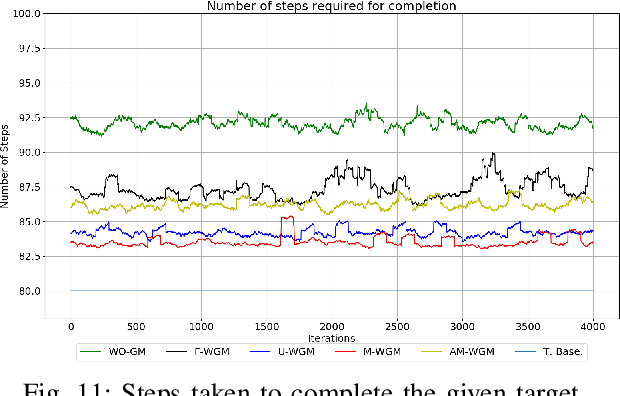

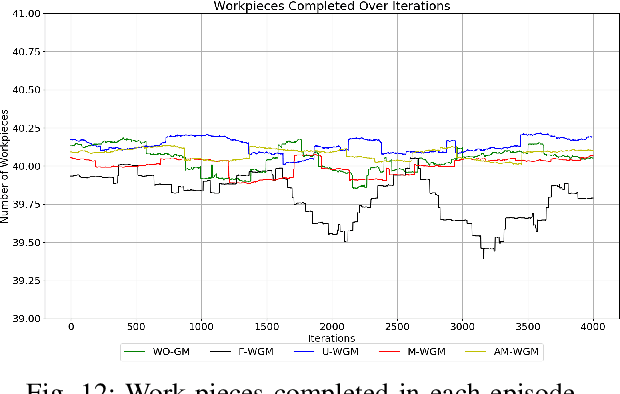

This paper presents a novel neural network training approach for faster convergence and better generalization abilities in deep reinforcement learning. Particularly, we focus on the enhancement of training and evaluation performance in reinforcement learning algorithms by systematically reducing gradient's variance and thereby providing a more targeted learning process. The proposed method which we term as Gradient Monitoring(GM), is an approach to steer the learning in the weight parameters of a neural network based on the dynamic development and feedback from the training process itself. We propose different variants of the GM methodology which have been proven to increase the underlying performance of the model. The one of the proposed variant, Momentum with Gradient Monitoring (M-WGM), allows for a continuous adjustment of the quantum of back-propagated gradients in the network based on certain learning parameters. We further enhance the method with Adaptive Momentum with Gradient Monitoring (AM-WGM) method which allows for automatic adjustment between focused learning of certain weights versus a more dispersed learning depending on the feedback from the rewards collected. As a by-product, it also allows for automatic derivation of the required deep network sizes during training as the algorithm automatically freezes trained weights. The approach is applied to two discrete (Multi-Robot Co-ordination problem and Atari games) and one continuous control task (MuJoCo) using Advantage Actor-Critic (A2C) and Proximal Policy Optimization (PPO) respectively. The results obtained particularly underline the applicability and performance improvements of the methods in terms of generalization capability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge